1. Preface¶

1.1. Who Should Use This Guide¶

The Installation and Configuration Guide is intended for system engineers and administrators who want to build, operate, and maintain a cluster system. Instructions for designing, installing, and configuring a cluster system with EXPRESSCLUSTER are covered in this guide.

1.2. How This Guide is Organized¶

2. Determining a system configuration: Provides instructions for how to verify system requirements and determine the system configuration.

3. Configuring a cluster system: Helps you understand how to configure a cluster system.

4. Installing EXPRESSCLUSTER: Provides instructions for how to install EXPRESSCLUSTER.

5. Registering the license: Provides instructions for how to register the license.

6. Creating the cluster configuration data: Provides instructions for how to create the cluster configuration data with the Cluster WebUI.

7. Verifying a cluster system: Verify that the cluster system you have configured operates successfully.

8. Verifying operation: Run the dummy-failure test and adjust the parameters.

9. Preparing to operate a cluster system: Provides information on what you need to consider before starting to operate EXPRESSCLUSTER.

10. Uninstalling and reinstalling EXPRESSCLUSTER: Provides instructions for how to uninstall and reinstall EXPRESSCLUSTER.

1.3. EXPRESSCLUSTER X Documentation Set¶

The EXPRESSCLUSTER X manuals consist of the following six guides. The title and purpose of each guide is described below:

This guide is intended for all users. The guide covers topics such as product overview, system requirements, and known problems.

Installation and Configuration Guide

This guide is intended for system engineers and administrators who want to build, operate, and maintain a cluster system. Instructions for designing, installing, and configuring a cluster system with EXPRESSCLUSTER are covered in this guide.

This guide is intended for system administrators. The guide covers topics such as how to operate EXPRESSCLUSTER, function of each module and troubleshooting. The guide is supplement to the Installation and Configuration Guide.

This guide is intended for administrators and for system administrators who want to build, operate, and maintain EXPRESSCLUSTER-based cluster systems. The guide describes maintenance-related topics for EXPRESSCLUSTER.

This guide is intended for administrators and for system engineers who want to build EXPRESSCLUSTER-based cluster systems. The guide describes features to work with specific hardware, serving as a supplement to the Installation and Configuration Guide.

This guide is intended for administrators and for system engineers who want to build EXPRESSCLUSTER-based cluster systems. The guide describes EXPRESSCLUSTER X 4.0 WebManager, Builder, and EXPRESSCLUSTER Ver 8.0 compatible commands.

1.4. Conventions¶

In this guide, Note, Important, See also are used as follows:

Note

Used when the information given is important, but not related to the data loss and damage to the system and machine.

Important

Used when the information given is necessary to avoid the data loss and damage to the system and machine.

See also

Used to describe the location of the information given at the reference destination.

The following conventions are used in this guide.

Convention |

Usage |

Example |

|---|---|---|

Bold |

Indicates graphical objects, such as fields, list boxes, menu selections, buttons, labels, icons, etc. |

In User Name, type your name.

On the File menu, click Open Database.

|

Angled bracket within the command line |

Indicates that the value specified inside of the angled bracket can be omitted. |

|

Monospace |

Indicates path names, commands, system output (message, prompt, etc), directory, file names, functions and parameters. |

|

bold |

Indicates the value that a user actually enters from a command line. |

Enter the following:

clpcl -s -a

|

italic |

Indicates that users should replace italicized part with values that they are actually working with. |

|

In the figures of this guide, this icon represents EXPRESSCLUSTER.

In the figures of this guide, this icon represents EXPRESSCLUSTER.

1.5. Contacting NEC¶

For the latest product information, visit our website below:

2. Determining a system configuration¶

This chapter provides instructions for determining the cluster system configuration that uses EXPRESSCLUSTER.

This chapter covers:

2.1. Steps from configuring a cluster system to installing EXPRESSCLUSTER

2.4. Checking system requirements for each EXPRESSCLUSTER module

2.1. Steps from configuring a cluster system to installing EXPRESSCLUSTER¶

Before you set up a cluster system that uses EXPRESSCLUSTER, you should carefully plan the cluster system with due consideration for factors such as hardware requirements, software to be used, and the way the system is used. When you have built the cluster, check to see if the cluster system is successfully set up before you start its operation.

This guide explains how to create a cluster system with EXPRESSCLUSTER through step-by-step instructions. Read each chapter by actually executing the procedures to install the cluster system. The following is the steps you take from designing the cluster system to operating EXPRESSCLUSTER:

See also

Refer to the "Reference Guide" as you need when operating EXPRESSCLUSTER by following the procedures introduced in this guide. See the "Getting Started Guide" for the latest information including system requirements and lease information.

Before installing EXPRESSCLUSTER, create the hardware configuration, the cluster system configuration and the information on the cluster system configuration.

2. Determining a system configuration

Review the overview of EXPRESSCLUSTER and determine the configurations of the hardware, network and software of the cluster system.

3. Configuring a cluster system

Plan a failover group that is to be the unit of a failover, and determine the information required to install the cluster system.Install EXPRESSCLUSTER and apply the license registration and the cluster configuration data to it.-

Install EXPRESSCLUSTER on the servers that constitute a cluster.

-

Register the license required to operate EXPRESSCLUSTER.

6. Creating the cluster configuration data

Based on the failover group information determined in the step 2, create the cluster configuration data by using the Cluster WebUI, and then configure a cluster.

-

Check if the cluster system has been created successfully.Conduct a dummy test, parameter tuning and operational simulation required to be done before operating the cluster system. The procedures to uninstall and reinstall are also explained in this section.

-

Check the operation and perform parameter tuning by a dummy-failure.

9. Preparing to operate a cluster system

Check the task simulation, backup and/or restoration and the procedure to handle an error, which are required to operate a cluster system.

10. Uninstalling and reinstalling EXPRESSCLUSTER

This chapter explains how to uninstall, and reinstall the EXPRESSCLUSTER.

2.2. What is EXPRESSCLUSTER?¶

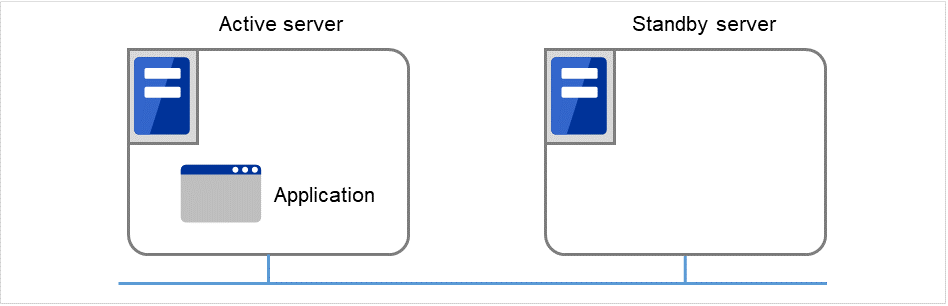

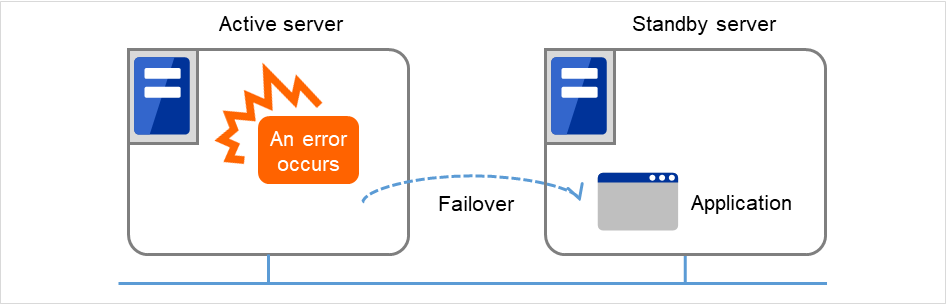

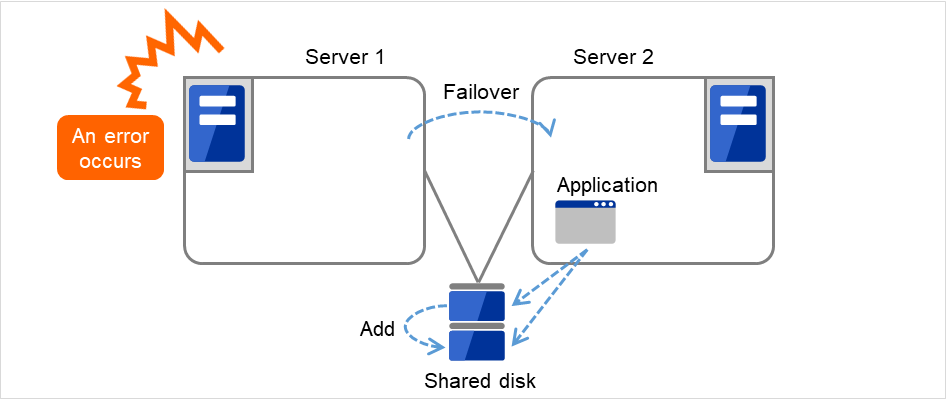

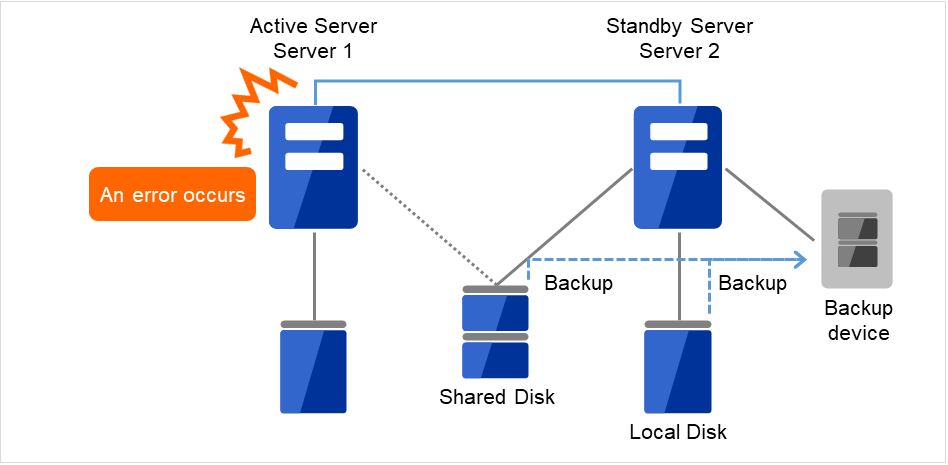

EXPRESSCLUSTER is software that enhances availability and expandability of systems by a redundant (clustered) system configuration. The application services running on the active server are automatically inherited to the standby server when an error occurs on the active server.

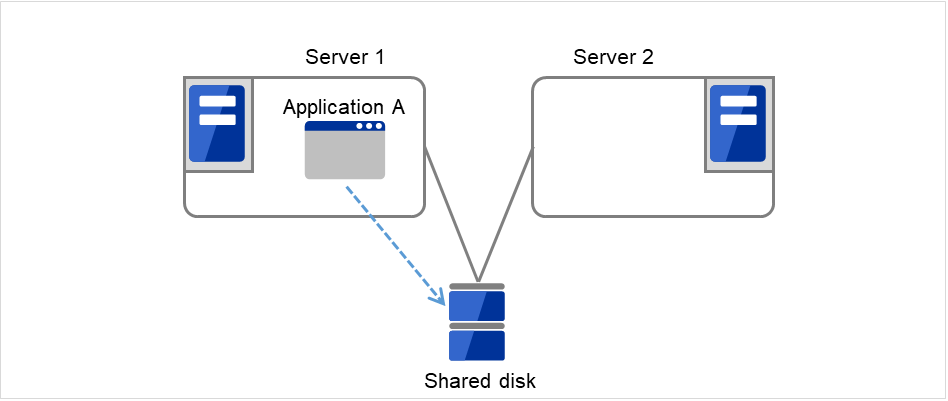

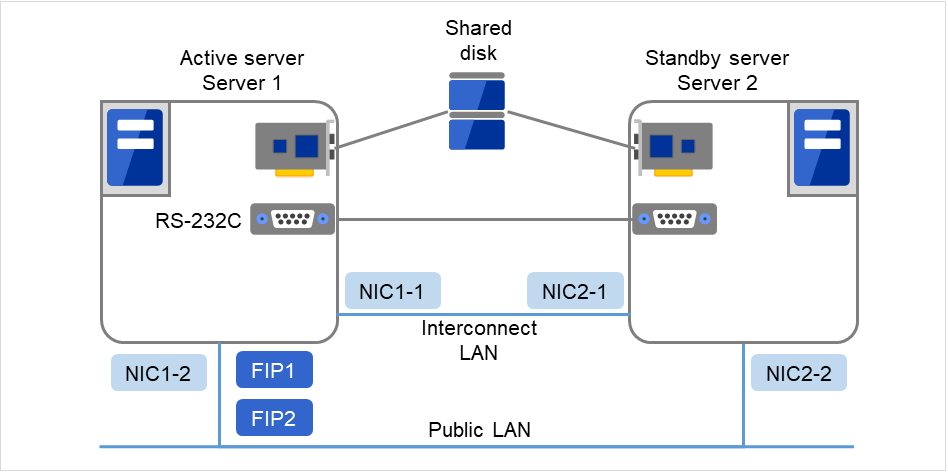

Fig. 2.1 Cluster system (in normal operation)¶

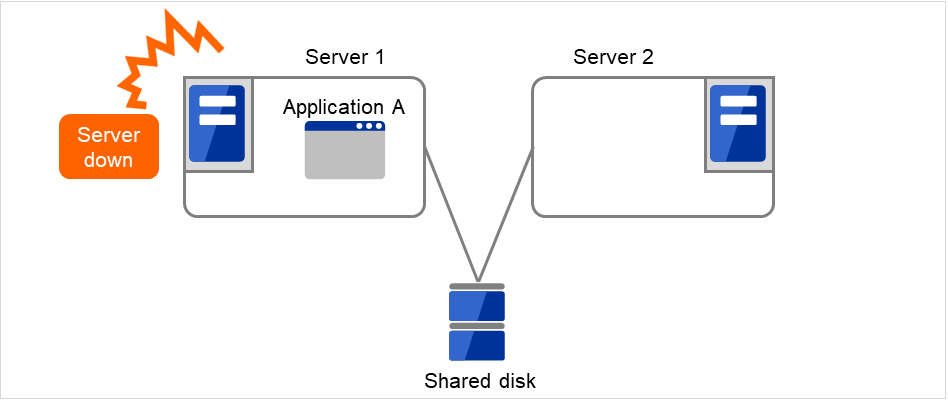

Fig. 2.2 Cluster system (when an error occurs)¶

The following can be achieved by installing a cluster system that uses EXPRESSCLUSTER.

- High availabilityThe down time is minimized by automatically failing over the applications and services to a "healthy" server when one of the servers which configure a cluster stops.

- High expandabilityBoth Windows and Linux support large scale cluster configurations having up to 32 servers.

See also

For details on EXPRESSCLUSTER, refer to "Using EXPRESSCLUSTER" in the "Getting Started Guide".

2.2.1. EXPRESSCLUSTER modules¶

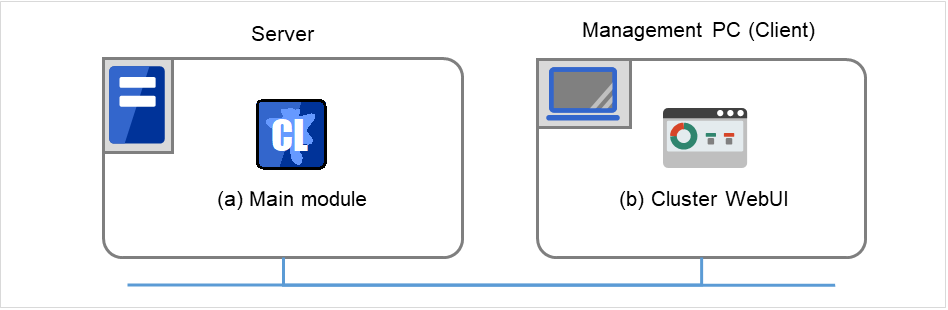

EXPRESSCLUSTER X consists of following two modules:

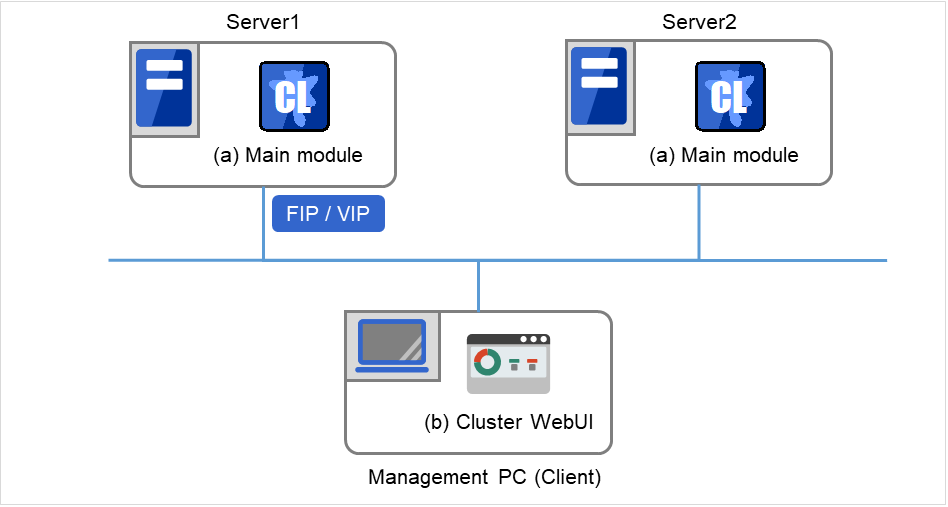

- EXPRESSCLUSTER ServerThe main module of EXPRESSCLUSTER and has all high availability functions of the server. Install this module on each server constituting the cluster.

- Cluster WebUIThis is a tool to create the configuration data of EXPRESSCLUSTER and to manage EXPRESSCLUSTER operations. The Cluster WebUI is installed in EXPRESSCLUSTER Server, but it is distinguished from the EXPRESSCLUSTER Server because the Cluster WebUI is operated through a Web browser on the management PC.

Fig. 2.3 Modules constituting EXPRESSCLUSTER¶

2.3. Planning system configuration¶

You need to determine an appropriate hardware configuration to install a cluster system that uses EXPRESSCLUSTER. The configuration examples of EXPRESSCLUSTER are shown below.

See also

For latest information on system requirements, refer to "Installation requirements for EXPRESSCLUSTER" and "Latest version information" in the "Getting Started Guide".

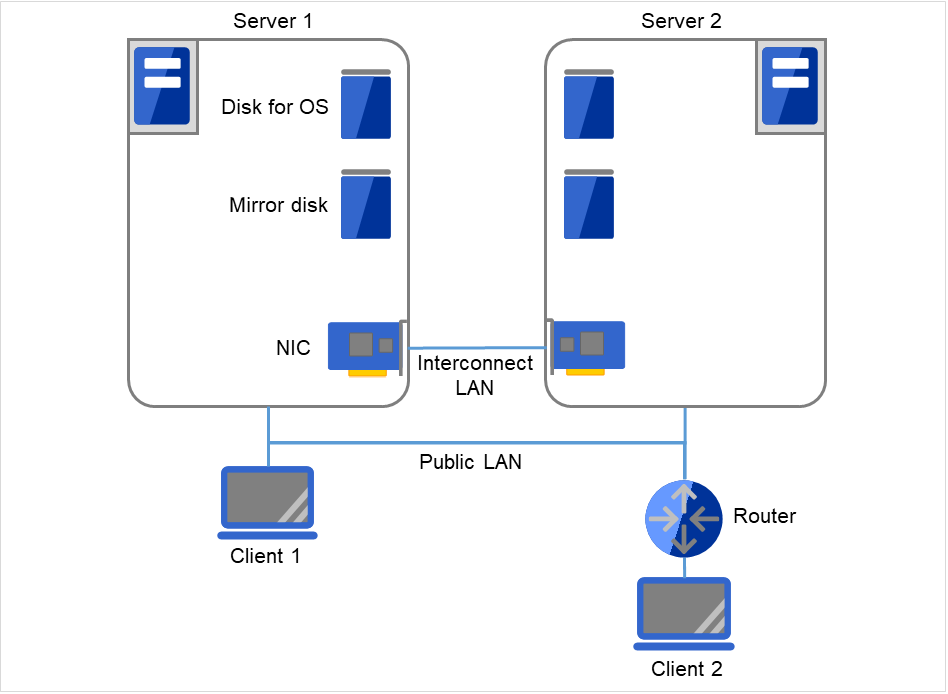

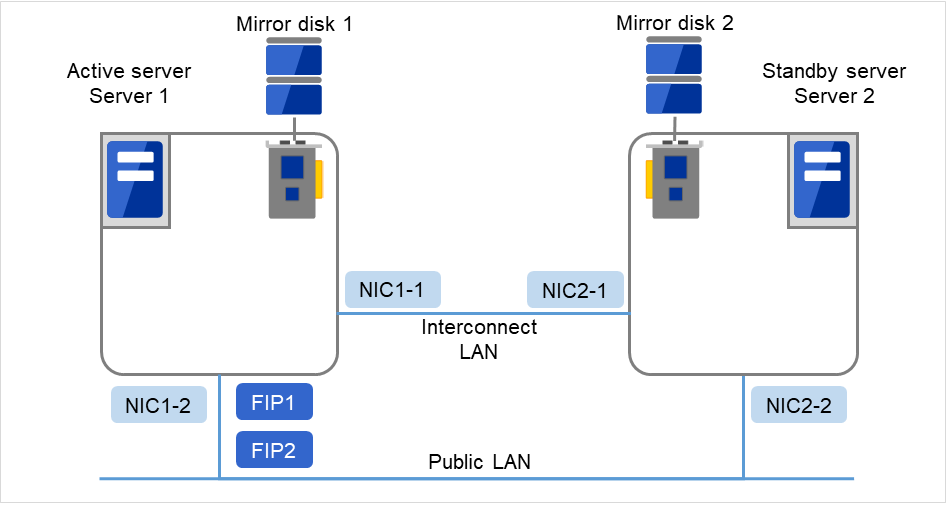

2.3.3. Example 2: Configuration using mirror disks with 2 nodes¶

Different models can be used for servers. However, the mirrors disk should have the same drive letter on both servers.

It is recommended to use cables for interconnection. (It is recommended to connect one server to another server directly using a cable. A HUB can also be used.)

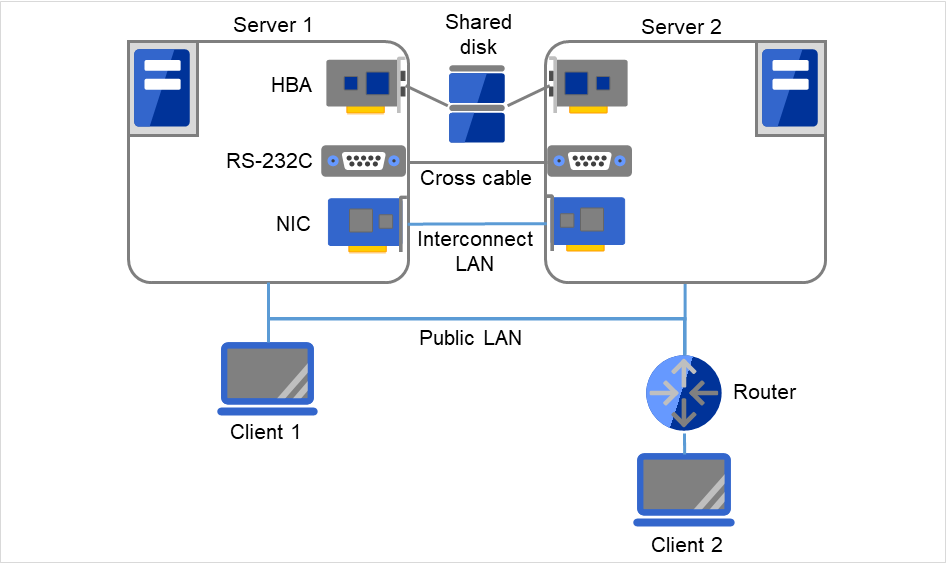

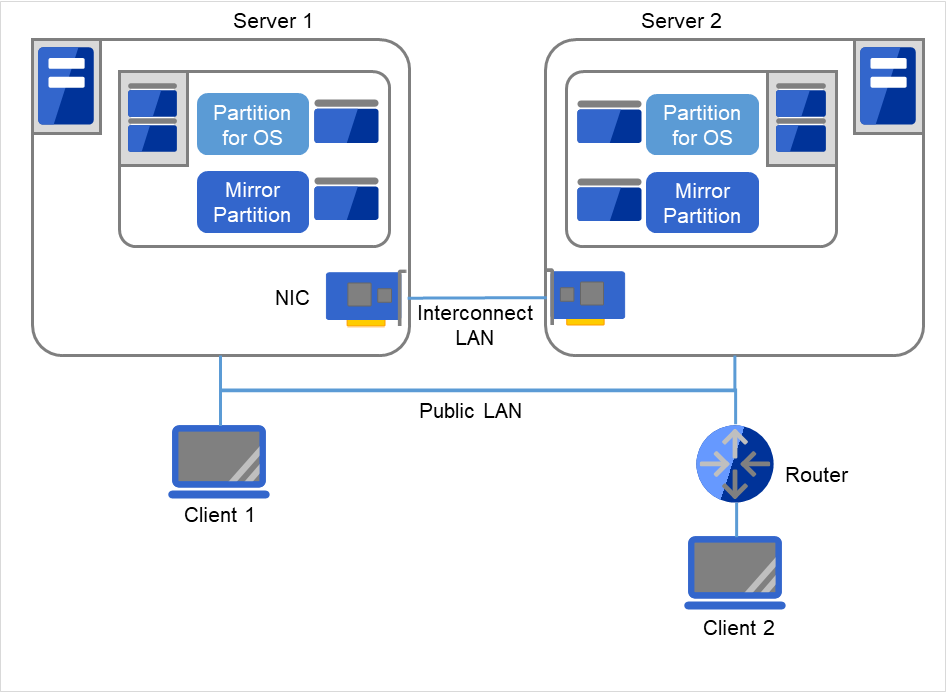

On cluster servers (Servers 1 and 2), the same drive letter needs to be specified. For this configuration, different models can be used. However, their partitions for mirroring must be set at exactly the same size in bytes. This may be impossible if there is a difference in the disk geometry. For connecting the interconnect cable, direct connection between the servers is recommended, but connection via a hub is also fine. Client 1, which exists on the same LAN as that of the cluster servers, can access them through a floating IP address. Client 2, which exists on a remote LAN, can also access the cluster servers through a floating IP address. Using floating IP addresses does not require the router to be configured for them.

Fig. 2.5 Example of a configuration using mirror disks with two nodes¶

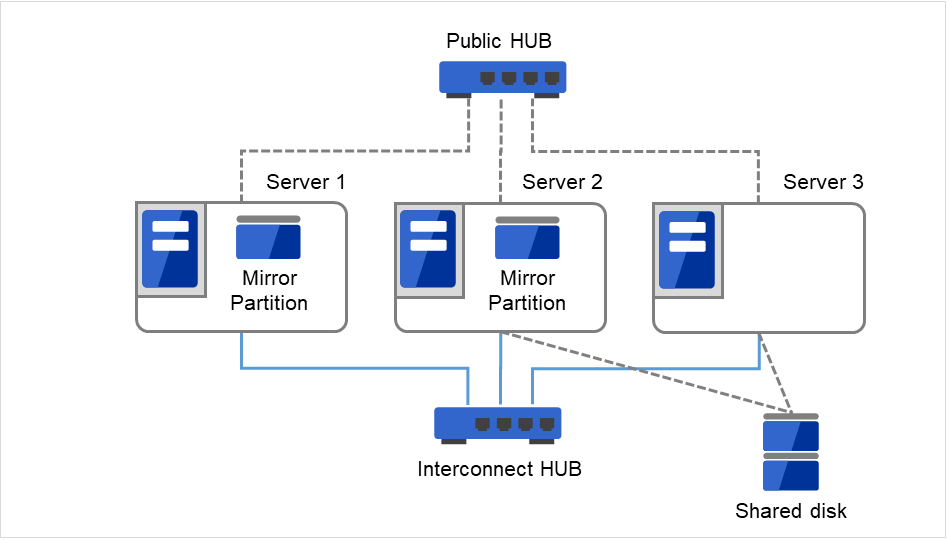

2.3.4. Example 3: Configuration using mirror partitions on the disks for OS with 2 nodes¶

A mirroring partition can be created on the disk used for the OS.

On Servers 1 and 2, the same drive letter needs to be specified. For this configuration, different models can be used. However, their partitions for mirroring must be set at exactly the same size in bytes. This may be impossible if there is a difference in the disk geometry. The partition for mirroring can be created on the same disk as that for the OS on each of the servers. Client 1, which exists on the same LAN as that of the cluster servers, can access them through a floating IP address. Client 2, which exists on a remote LAN, can also access the cluster servers through a floating IP address. Using floating IP addresses does not require the router to be configured for them.

Fig. 2.6 Example of a configuration with two nodes, making the mirroring area coexist with the OS area¶

See also

For mirror partition settings, refer to "Group resource details" and "Understanding mirror disk resources" in the "Reference Guide".

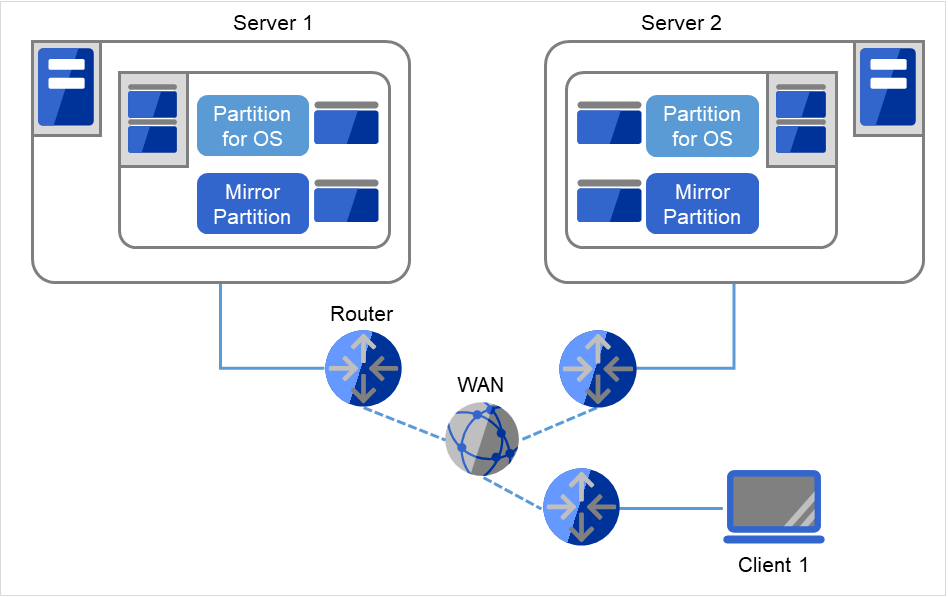

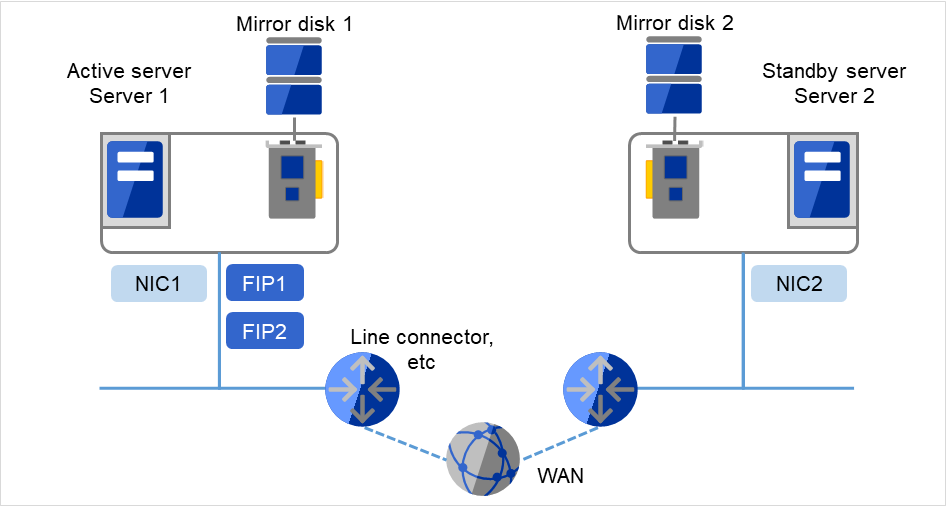

2.3.5. Example 4: Configuring a remote cluster by using asynchronous mirror disks with 2 nodes¶

On Servers 1 and 2, the same drive letter needs to be specified. For this configuration, different models can be used. However, their partitions for mirroring must be set at exactly the same size in bytes. This may be impossible if there is a difference in the disk geometry. A client can access the cluster servers through a virtual IP (VIP) address. Using a VIP address requires a router to communicate the RIP host route.

Configuring a cluster between servers in remote sites by using WAN, as shown below, is a solution for disaster control.

Using asynchronous mirror disks can curb a decrease in disk performance due to the network delay. There is still a chance that the information updated immediately before a failover gets lost.

It is necessary to secure enough communication bandwidth for the traffic amount of updated information on mirror disks. Insufficient bandwidth can cause delay of communication with a business operation client or interruption of mirroring.

Use Dynamic DNS resource or Virtual IP resource to switch the connected server.

Fig. 2.7 Example of configuring a remote cluster by using asynchronous mirror disks with two nodes¶

See also

For information on resolving network partition and the VIP settings, see "Understanding virtual IP resources" in "Group resource details" and "Details on network partition resolution resources" in the "Reference Guide".

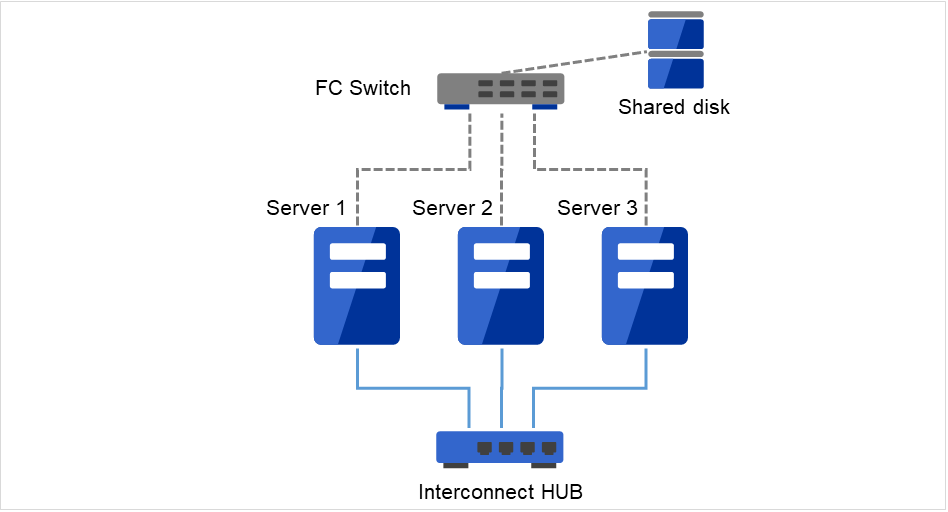

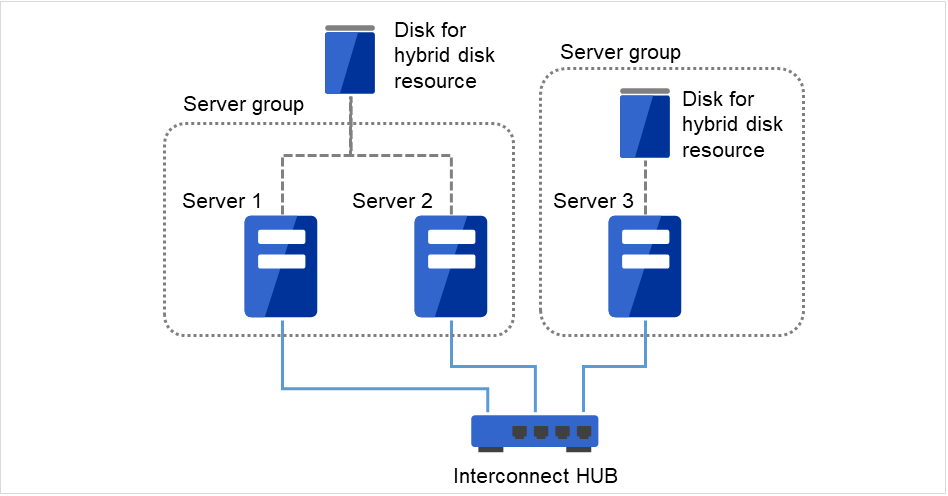

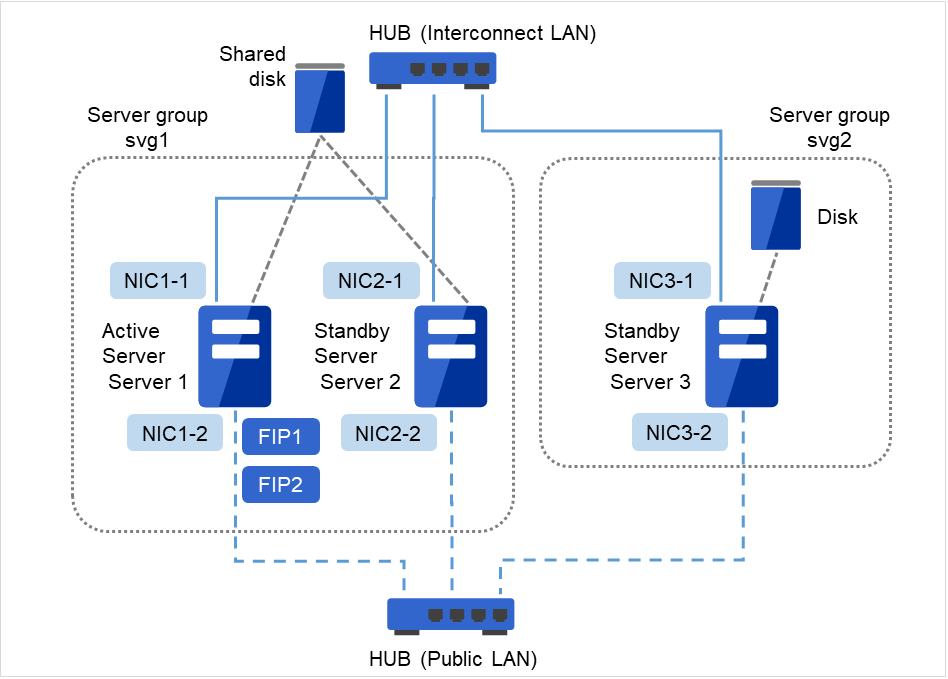

2.3.8. Example 7: Configuration using the hybrid type with 3 nodes¶

This is a configuration with three nodes that consists of two nodes connected to the shared disk and one node having a disk to be mirrored.

The servers should not necessarily be the same model.

Install a dedicated HUB for interconnection and LAN of mirror disk connect.

Use a HUB with faster performance as much as possible.

Fig. 2.10 Example of a configuration of the hybrid type with three nodes¶

Interconnect LAN cables are connected to the interconnect hub, which is not connected to any other server or client.

2.4. Checking system requirements for each EXPRESSCLUSTER module¶

EXPRESSCLUSTER X consists of two modules: EXPRESSCLUSTER Server (main module) and Cluster WebUI. Check configuration and operation requirements of each machine where these modules will be used. For details about the operating environments, see "Installation requirements for EXPRESSCLUSTER" in the "Getting Started Guide".

2.5. Determining a hardware configuration¶

Determine a hardware configuration considering an application to be duplicated on a cluster system and how a cluster system is configured. Read "3. Configuring a cluster system" before you determine a hardware configuration.

See also

Refer to "3. Configuring a cluster system."

2.6. Settings after configuring hardware¶

After you have determined the hardware configuration and installed the hardware, verify the following:

2.6.2. Mirror partition settings (Required for mirror disks)

2.6.3. Adjustment of the operating system startup time (Required)

2.6.8. Setup of SNMP service (Required if ESMPRO Server is to be used cooperated with EXPRESSCLUSTER)

2.6.9. Setup of BMC and ipmiutil (Required for using the forced stop function of a physical machine and chassis ID lamp association)

2.6.10. Setup of a function equivalent to rsh provided by the network warning light vendor (Required)

2.6.2. Mirror partition settings (Required for mirror disks)¶

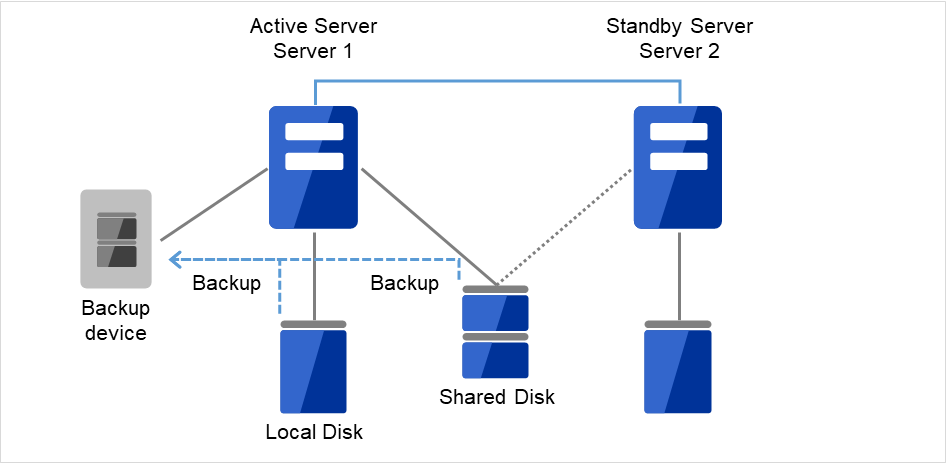

Set up partitions for mirror disk resources by following the steps below. This is required for a local disk (a disk connected to only one of the servers) to be mirrored with the shared disk in the hybrid configuration.

Note

When you cluster a single server and continue using data on the existing partitions, do not re-create the partitions. If you re-create partitions, data on the shared disks will be deleted.

Note

The partition to be allocated as described below cannot be used by mounting it on an NTFS folder.

- Allocate cluster partitions.Create partitions to be used by the mirror disk resources/hybrid disk resources. The partition is used for managing the status of mirror disk resources/hybrid disk resources. Create the partition in every server in the cluster that uses mirror resources. Create partitions by using "Disk Management" function of OS, and leave them as raw partition without formatting. Configure a drive letter for them.

Note

The cluster partition should be 1GB (1,073,741,824 bytes) or larger. Leave the disk cluster partition as RAW partition without formatting.

- Allocate data partitionsCreate the data partitions for mirroring by mirror disk resources/hybrid disk resources. For mirror disk resources, create the data partitions on the two servers on which disk mirroring is performed.Format partitions with NTFS from "Disk Management" function of OS and configure a drive letter.

Note

When partitions (drive) to be mirrored already exist (in the cases such as reinstalling EXPRESSCLUSTER), you do not need to create partitions again. When data that should be mirrored already exist on partitions, if you create partitions again or format partitions, the data will be deleted.A drive with a system drive and/or page file and a drive where EXPRESSCLUSTER is installed cannot be used as partitions for mirror disk resources. The data partitions in both servers must be precisely the same size in byte. If the geometries of the servers differ among the servers, it might not be able to create precisely same size of partitions. Check the partition sizes with the clpvolsz command and adjust them. The same drive letter must be configured on the partitions in the servers.

2.6.3. Adjustment of the operating system startup time (Required)¶

It is necessary to configure the time from power-on of each node in the cluster to the server operating system startup to be longer than the following:

The time from power-on of the shared disk to the point they become available.

Heartbeat timeout time (30 seconds by default.)

Adjustment of the startup time is necessary to prevent the following problems:

If the cluster system is started by powering on the shared disk and servers, starting a shared disk is not completed before the OS is rebooted. OS is started in the status where the shared disk is not recognized, and activation of disk resources fails.

A failover fails if a server, with data you want to fail over by rebooting the server, reboots within the heartbeat timeout. This is because a remote server assumes that the heartbeat is continued.

Consider the times durations above and adjust the operating system startup time by using the bcdedit command of Windows.

Note

2.6.4. Verification of the network settings (Required)¶

On all servers in the cluster, verify the status of the following network resources using the ipconfig or ping command.

Public LAN (used for communication with all the other machines)

LAN dedicated to interconnect (used for communication between EXPRESSCLUSTER Servers)

Host name

Note

It is not necessary to specify the IP addresses of floating IP resources virtual resources used in the cluster in the operating system.

2.6.5. Verification of the firewall settings (Required)¶

EXPRESSCLUSTER uses several port numbers for communication between the modules. For details about the port numbers to be used, see "Before installing EXPRESSCLUSTER" of "Notes and Restrictions" in the "Getting Started Guide".

2.6.6. Server clock synchronization (Recommended)¶

It is recommended to regularly synchronize the clocks of all the servers in the cluster. Make the settings that synchronize server clocks through protocol such as ntp on a daily basis.

Note

When the time of each server is not synchronized, the system time on the server from a client's point of view may change at a failover or group moving, which can lead to a failure of the operation of the application used in this system. The times of logs become different between servers, resulting in delay of failure analysis at occurrence of error.

Note

If the date or time setting on the OS is changed while a System monitor resource or a Process resource monitor resource is operating, the System monitor resource or the Process resource monitor resource may not operate normally.

2.6.7. Power saving function - OFF (Required)¶

In EXPRESSCLUSTER, power saving function (for example, standby or hibernation) with OnNow, ACPI, and/or APM functions cannot be used. Make sure to turn off the power saving function.

2.6.8. Setup of SNMP service (Required if ESMPRO Server is to be used cooperated with EXPRESSCLUSTER)¶

SNMP service is required if ESMPRO Server is to be used cooperated with EXPRESSCLUSTER. Set up SNMP service first before installing EXPRESSCLUSTER.

2.6.9. Setup of BMC and ipmiutil (Required for using the forced stop function of a physical machine and chassis ID lamp association)¶

For using the forced stop function of a physical machine and Chassis ID lamp association, configure the Baseboard Management Controller (BMC) of the servers to enable the communication between IP addresses of LAN ports for managing BMC and IP addresses used by the OS. These functions are not available when BMC is not installed on the server or when the network for managing BMC is disabled. For information on how to configure the BMC, refer to the manuals of your server.

Use ipmiutil of the versions 2.0.0 to 3.0.8

EXPRESSCLUSTER uses the hwreset command or ireset command, and alarms command or ialarms command of ipmiutil. To execute these commands without specifying path, include the path of the ipmiutil execution file in the system environment variable PATH or copy the execution file to the folder including the variable in its path (for example, the bin folder in the folder where EXPRESSCLUSTER is installed).

Because EXPRESSCLUSTER does not use the function that requires the IPMI driver, it is not necessary to install the IPMI driver.

To control BMC via LAN by the above commands, an IPMI account with Administrator privilege in BMC in each server. When you use NEC Express5800/100 series server, use User IDs 4 or later to add or change the account, because User IDs 3 or earlier are reserved by other tools. Use tools complying with the IPMI standards such as IPMITool for checking and changing account configuration.

2.6.10. Setup of a function equivalent to rsh provided by the network warning light vendor (Required)¶

For using the network warning light, set up a command equivalent to rsh supported by the warning light vendor.

3. Configuring a cluster system¶

This chapter provides information required to configure a cluster including requirements of applications to be duplicated, cluster topology, and explanation on resources constituting a cluster.

This chapter covers:

3.1. Configuring a cluster system¶

This chapter provides information necessary to configure a cluster system, including the following topics:

Determining a cluster system topology

Determining applications to be duplicated

Creating the cluster configuration data

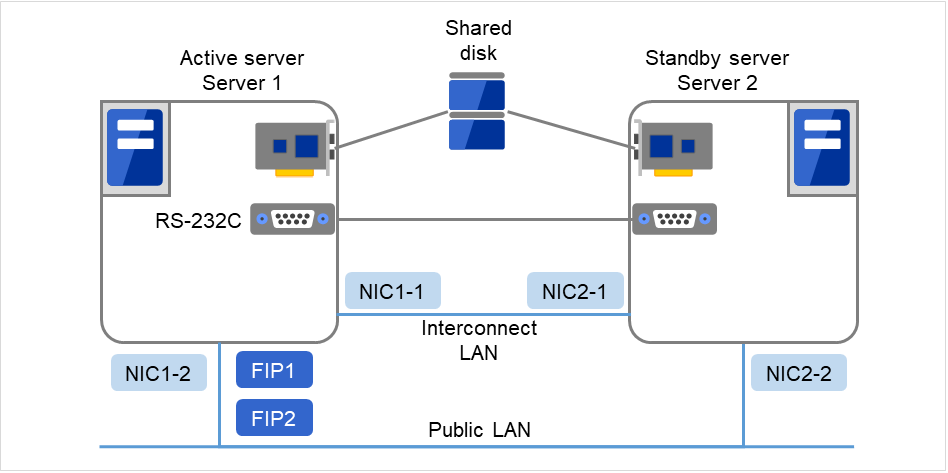

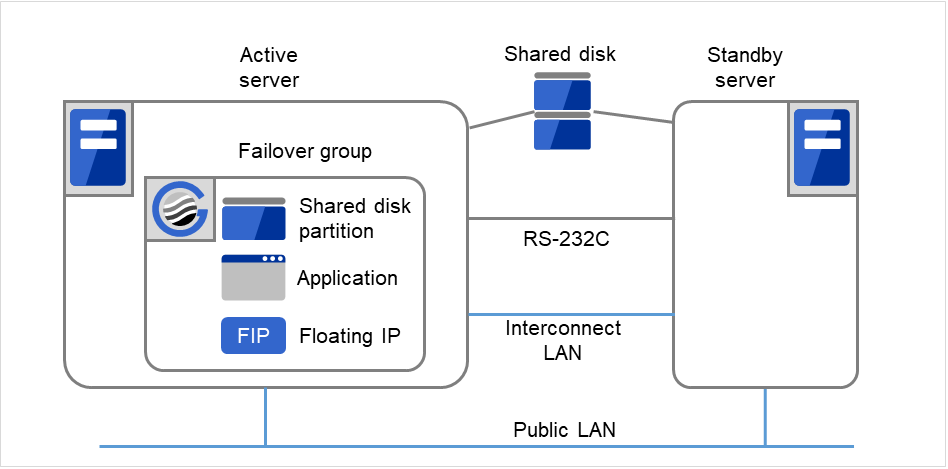

The following is a typical example of cluster environment with 2 nodes where standby is uni-directional.

Fig. 3.1 Example of a 2-node and uni-directional standby cluster environment¶

FIP1 |

10.0.0.11

(to be accessed by Cluster WebUI clients)

|

FIP2 |

10.0.0.12

(to be accessed by operation clients)

|

NIC1-1 |

192.168.0.1 |

NIC1-2 |

10.0.0.1 |

NIC2-1 |

192.168.0.2 |

NIC2-2 |

10.0.0.2 |

Serial port |

COM1 |

Shared disk

Drive letter of the disk heartbeat

Q

File system

RAW

Drive letter of the switchable partition for resources

R

File system

NTFS

3.2. Determining a cluster topology¶

EXPRESSCLUSTER supports multiple cluster topologies. There are uni-directional standby cluster system that considers one server as an active server and other as standby server, and multi-directional standby cluster system in which both servers act as active and standby servers for different operations.

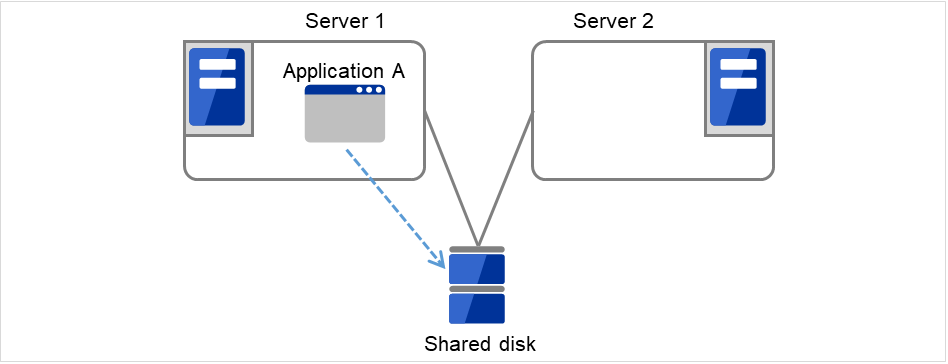

- Uni-directional standby cluster systemIn this operation, only one application runs on an entire cluster system. There is no performance deterioration even when a failover occurs. However, resources in a standby server will be wasted.

Fig. 3.2 Uni-directional standby cluster system¶

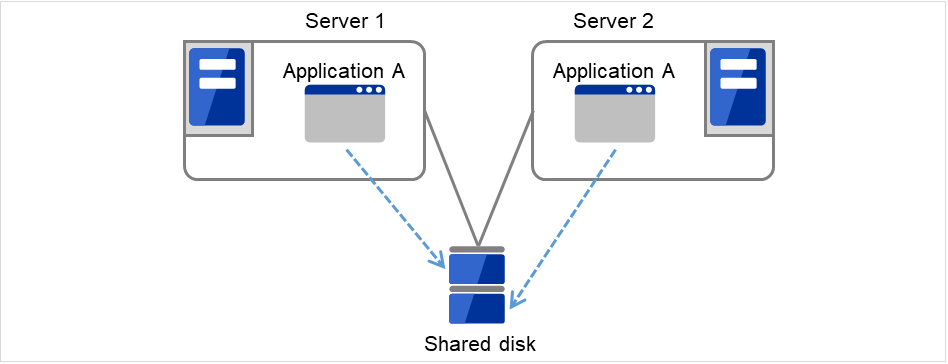

- Multi-directional standby cluster system with the same applicationIn this operation, the same application runs on more than one server simultaneously in a cluster system. Applications used in this system must support multi-directional standby operations.

Fig. 3.3 Multi-directional standby cluster system with the same application¶

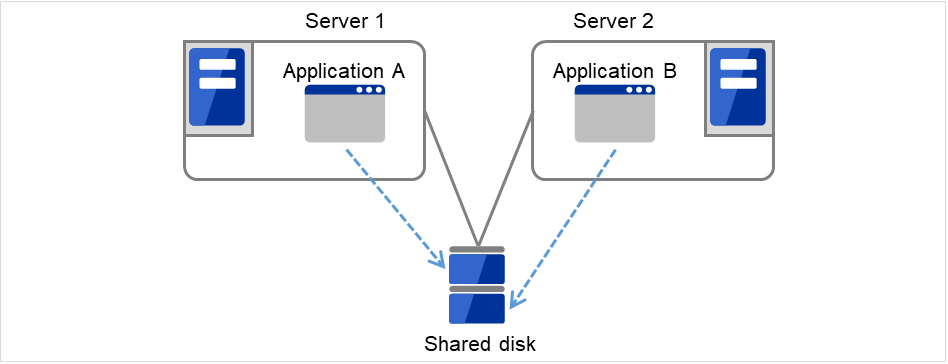

- Multi-directional standby cluster system with different applicationsIn this operation, different applications run on different servers and standby each other. Resources will not be wasted during normal operation; however, two applications run on one server after failing over and system performance deteriorates.

Fig. 3.4 Multi-directional standby cluster system with different applications¶

3.2.1. Failover in uni-directional standby cluster¶

On a uni-directional standby cluster system, the number of groups for an operation service is limited to one as described in the diagrams below:

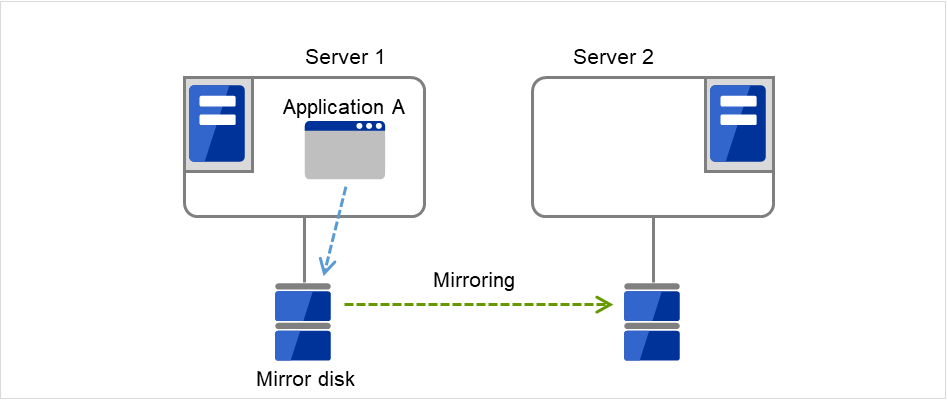

3.2.1.2. When mirror disks are used¶

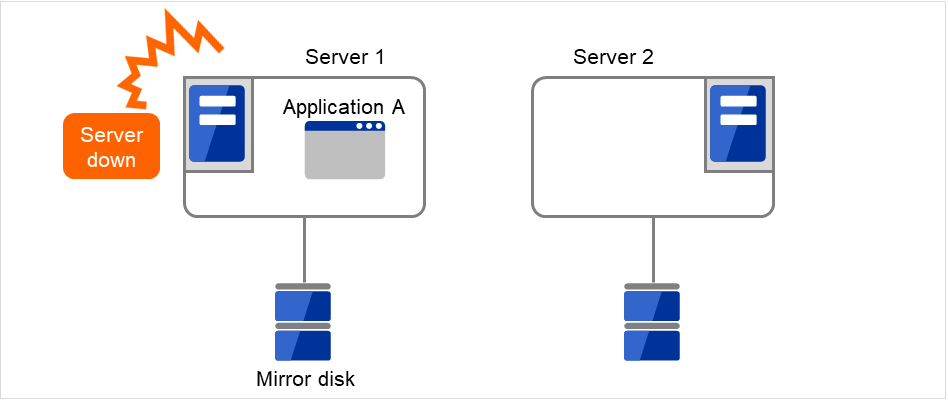

1. Server 1 runs Application A. Application A can be run on only one server in the same cluster.

Fig. 3.9 Uni-directional standby cluster with mirror disks (1): in normal operation¶

Server 1 crashes due to some error.

Fig. 3.10 Uni-directional standby cluster with mirror disks (2): when the server crashes¶

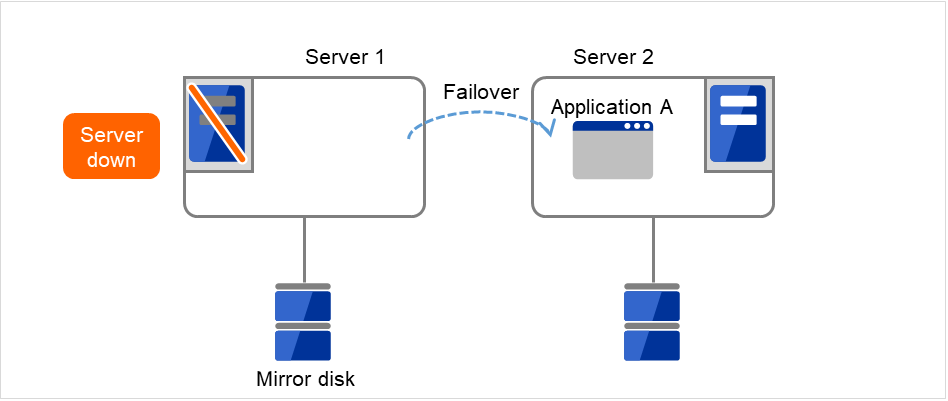

The application is failed over from Server 1 to Server 2.

Fig. 3.11 Uni-directional standby cluster with mirror disks (3): during a failover¶

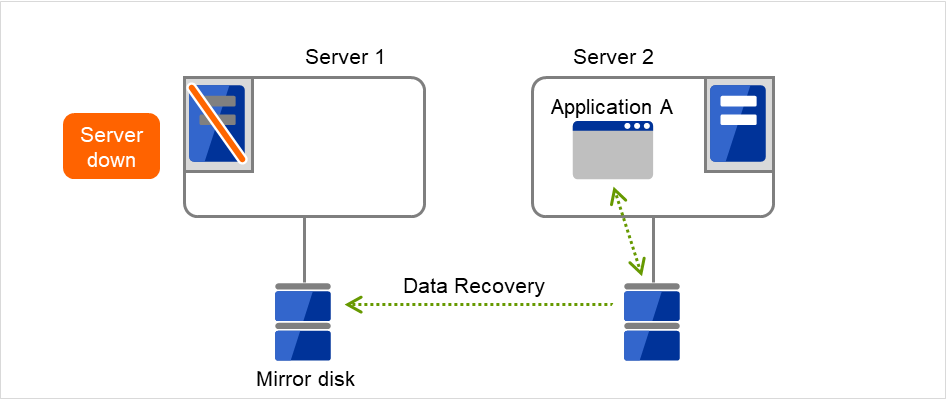

To resume the application, data is recovered from Server 2's mirror disk.

Fig. 3.12 Uni-directional standby cluster with mirror disks (4): during data recovery¶

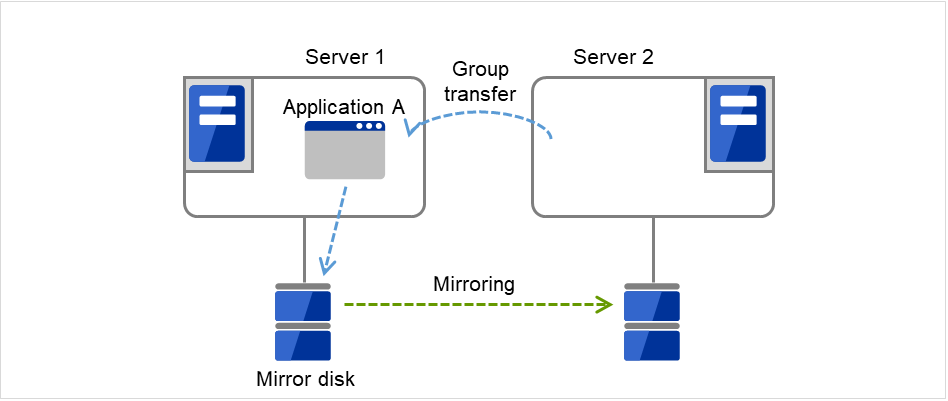

After Server 1 is restored, a group transfer can be made for Application A to be returned from Server 2 to Server 1.

Fig. 3.13 Uni-directional standby cluster with mirror disks (5): After the server is restored¶

3.2.2. Failover in multi-directional standby cluster¶

On a multi-directional standby cluster system, different applications run on servers. If a failover occurs on the one sever, multiple applications start to run on the other server. As a result, the failover destination server is more loaded than the time of normal operation and performance decreases.

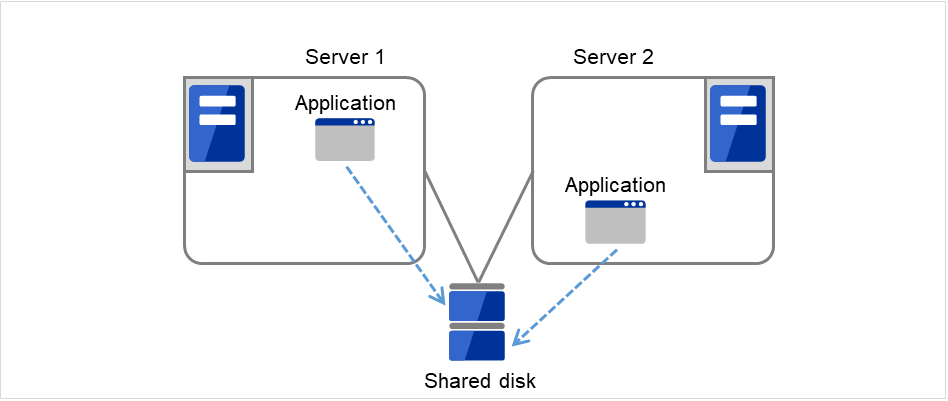

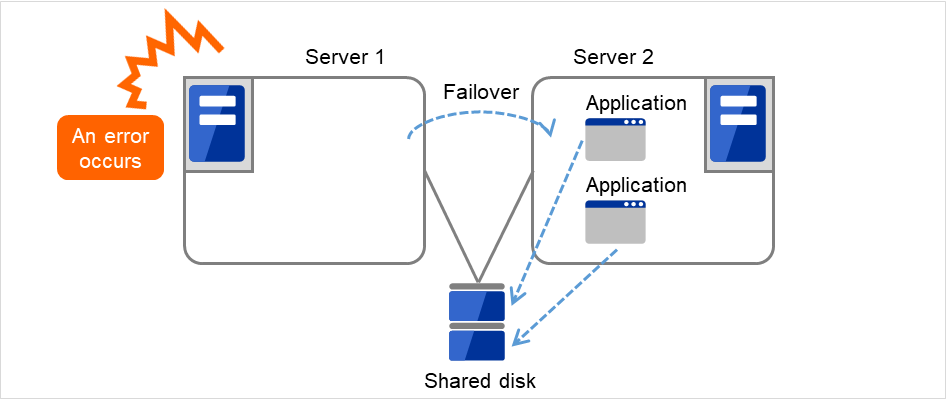

3.2.2.1. When a shared disk is used¶

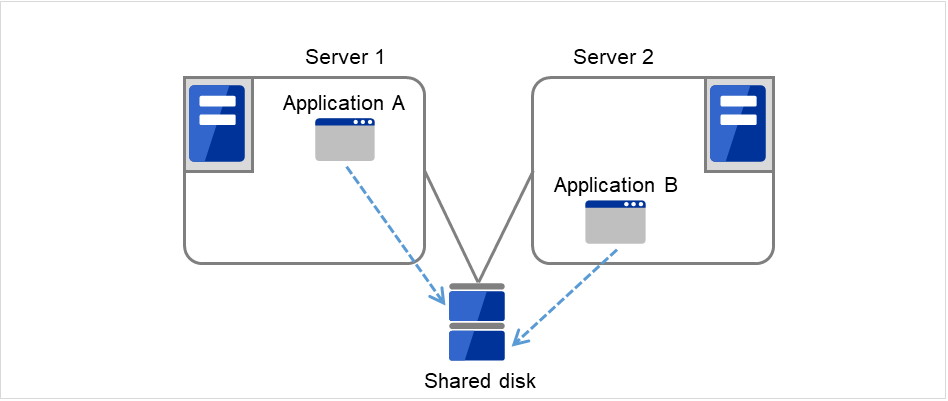

Server 1 runs Application A while Server 2 runs Application B.

Fig. 3.14 Multi-directional standby cluster with a shared disk (1): in normal operation¶

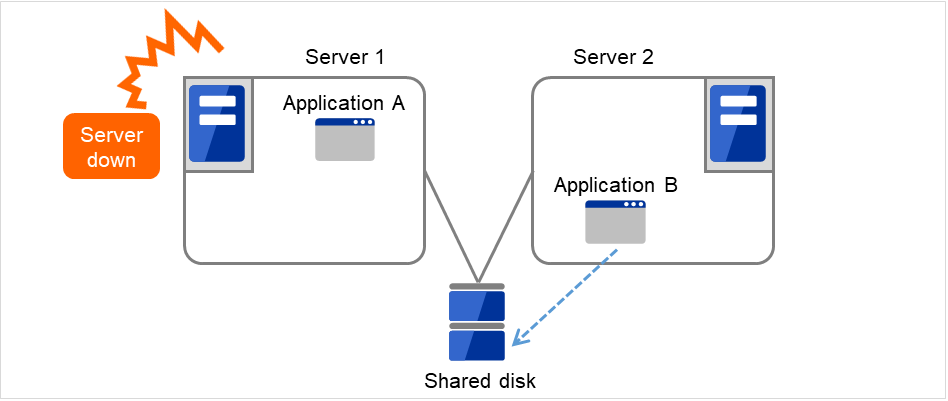

Server 1 crashes due to some error.

Fig. 3.15 Multi-directional standby cluster with a shared disk (2): when the server crashes¶

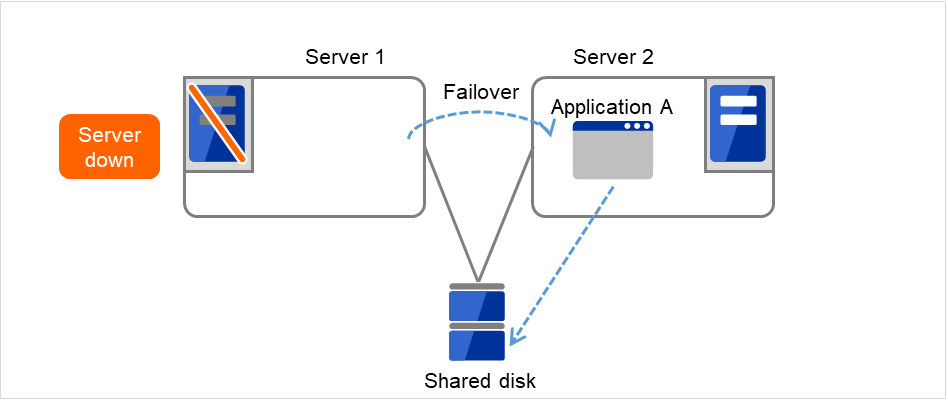

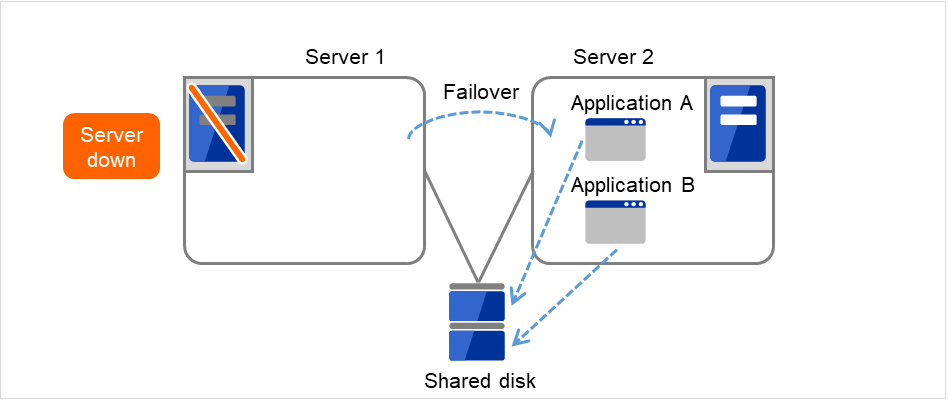

Application A is failed over from Server 1 to Server 2.

Fig. 3.16 Multi-directional standby cluster with a shared disk (3): during a failover¶

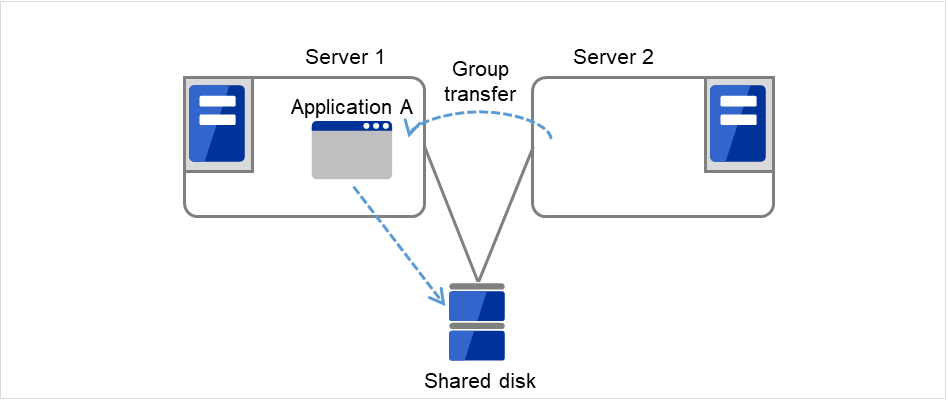

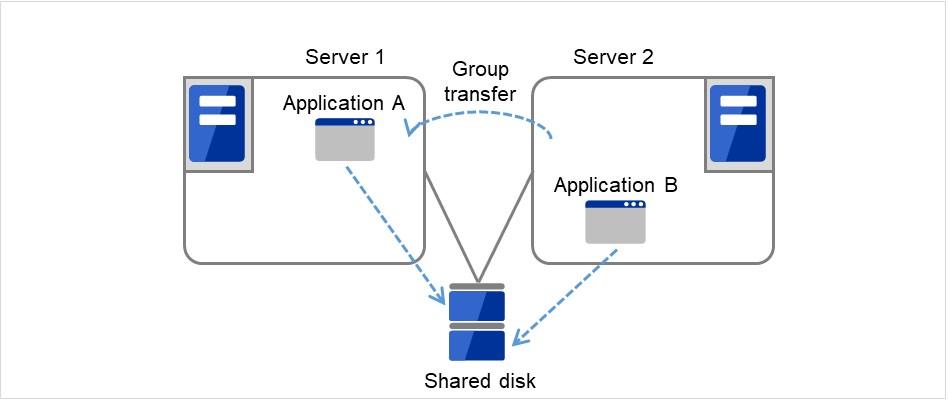

After Server 1 is restored, a group transfer can be made for Application A to be returned from Server 2 to Server 1.

Fig. 3.17 Multi-directional standby cluster with a shared disk (4): after the server is restored¶

3.2.2.2. When mirror disks are used¶

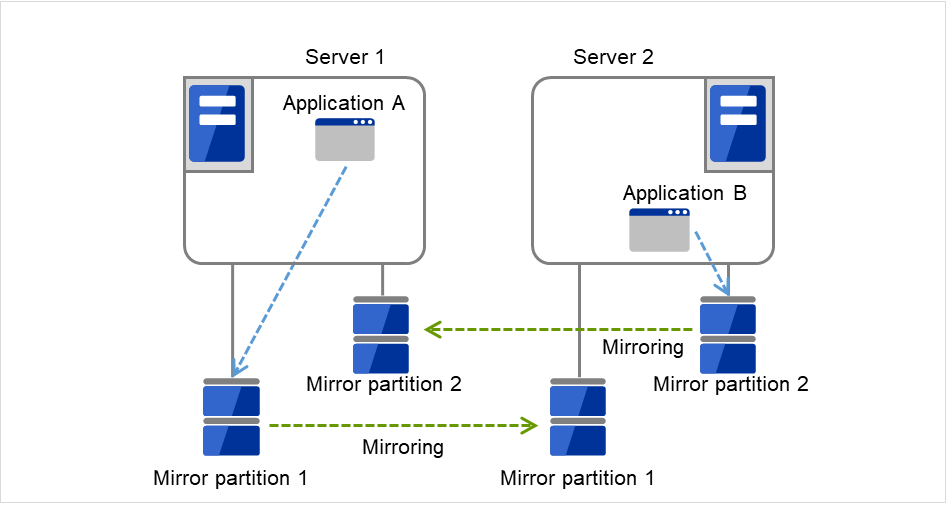

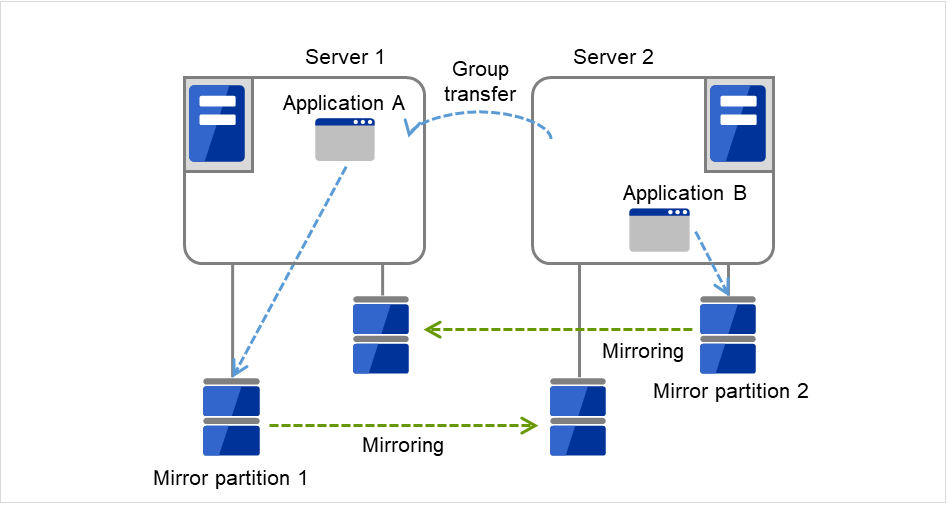

Server 1 runs Application A while Server 2 runs Application B.

Fig. 3.18 Multi-directional standby cluster with mirror disks (1): in normal operation¶

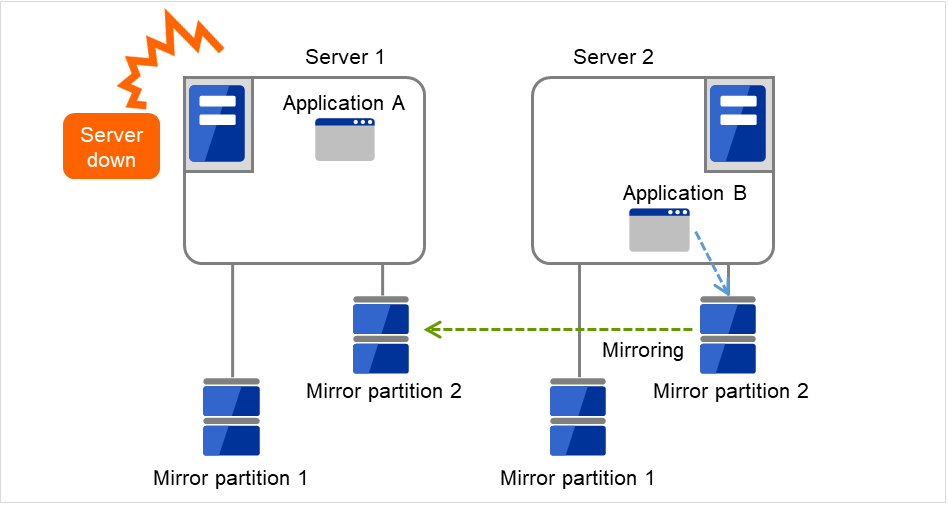

Server 1 crashes due to some error.

Fig. 3.19 Multi-directional standby cluster with mirror disks (2): when the server crashes¶

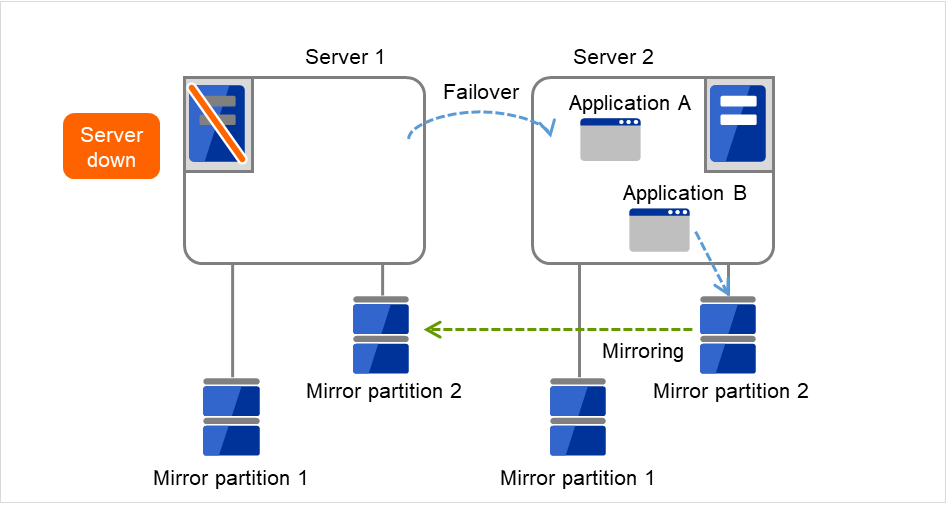

Application A is failed over from Server 1 to Server 2.

Fig. 3.20 Multi-directional standby cluster with mirror disks (3): during a failover¶

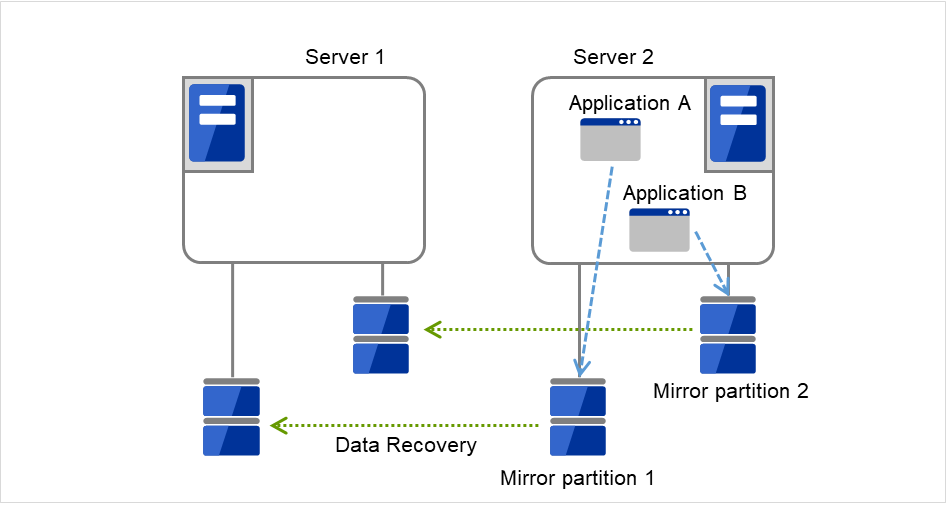

To resume Application A, data is recovered from Server 2's Mirror partition 1.

Fig. 3.21 Multi-directional standby cluster with mirror disks (4): during data recovery¶

After Server 1 is restored, a group transfer can be made for Application A to be returned from Server 2 to Server 1.

Fig. 3.22 Multi-directional standby cluster with mirror disks (5): after the server is restored¶

3.3. Determining applications to be duplicated¶

When you determine applications to be duplicated, study candidate applications taking what is described below into account to see whether or not they should be clustered in your EXPRESSCLUSTER cluster system.

3.3.1. Server applications¶

3.3.1.1. Note 1: Data recovery after an error¶

If an application was updating a file when an error has occurred, the file update may not be completed when the standby server accesses to that file after the failover.

The same problem can happen on a non-clustered server (single server) if it goes down and then is rebooted. In principle, applications should be ready to handle this kind of errors. A cluster system should allow recovery from this kind of errors without human interventions (from a script).

3.3.1.2. Note 2: Application termination¶

When EXPRESSCLUSTER stops or transfers (performs online failback of) a group for application, it unmounts the file system used by the application group. Therefore, you have to issue an exit command for applications so that all files on the shared disk or mirror disk are stopped.

Typically, you give an exit command to applications in their stop scripts; however, you have to pay attention if an exit command completes asynchronously with termination of the application.

3.3.1.3. Note 3: Location to store the data¶

EXPRESSCLUSTER can pass the following types of data between severs:

Data in the switchable partition on the disk resource, or data in the data partition on the mirror disk resource/hybrid disk resource.

Data type

Example

Where to store

Data to be shared among servers

User data, etc.

Switching partition of the disk resource or data partition of the mirror disk resource/hybrid disk resource

Data specific to a server

Programs, configuration data

On server's local disks

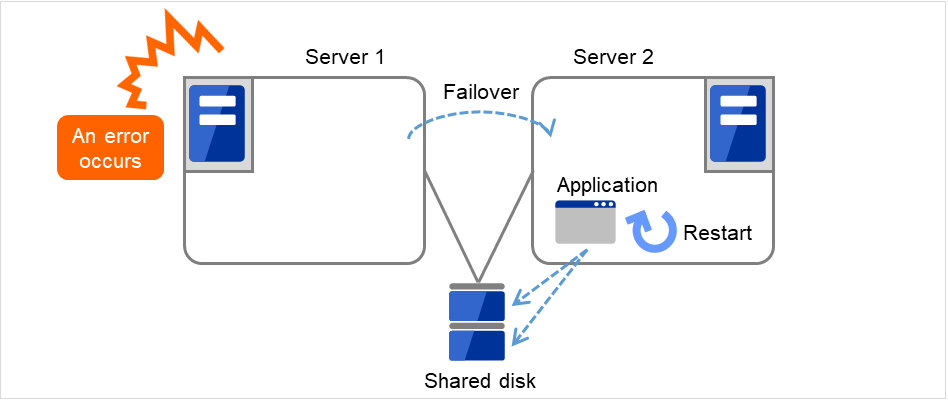

3.3.1.4. Note 4: Multiple application service groups¶

When you run the same application service in the multi-directional standby operation, you have to assume (in case of degeneration due to a failure) that multiple application groups are run by the same application on a server.Applications should have capabilities to take over the passed resources by one of the following methods described in the diagram below. A single server is responsible for running multiple application groups.The figures displayed below are the same with an example of a shared disk and/or mirror disk.

Fig. 3.23 Application running normally on each server in a multi-directional standby cluster¶

Fig. 3.24 Starting up multiple instances¶

Fig. 3.25 Restarting the application¶

Fig. 3.26 Adding resources dynamically¶

3.3.1.5. Note 5: Mutual interference and compatibility with applications¶

Sometimes mutual interference between applications and EXPRESSCLUSTER functions or the operating system functions required to use EXPRESSCLUSTER functions prevents applications or EXPRESSCLUSTER from working properly.

Generally, you can assume when an application that is started up by EXPRESSCLUSTER is started, the switchable partition or data partition to which it should access is already accessible.

3.3.2. Configuration relevant to the notes¶

What you need to consider differs depending on which standby cluster system is selected for an application. Following is the notes for each cluster system. The numbers corresponds to the numbers of notes (1 through 5) described below:

Note for uni-directional standby [Active-Standby]: 1, 2, 3, and 5

Note for multi-directional standby [Active-Active]: 1, 2, 3, 4, and 5

- Note for co-existing behaviors: 5(Applications co-exist and run. The cluster system does not fail over the applications.)

3.3.3. Solutions to the problems relevant to the notes¶

Problems |

Solution |

Note to refer |

|---|---|---|

When an error occurs while updating a data file, the application does not work properly on the standby server. |

Modify the program, or add/modify script source to run a process to recover being updated during failover. |

Note 1: Data recovery after an error |

The application keeps accessing shared disk or mirror disk for a certain period of time even after it is stopped. |

Execute the sleep command during stop script execution. |

Note 2: Application termination |

The same application cannot be started more than once on one server. |

In multi-directional operation, reboot the application at failover and pass the shared data. |

Note 3: Location to store the data |

3.3.4. How to determine a cluster topology¶

Carefully read this chapter and determine the cluster topology that suits your needs:

When to start which application

Actions that are required at startup and failover

Data to be placed in switchable partitions or data partitions

3.4. Planning a failover group¶

A failover group (hereafter referred to as group) is a set of resources required to perform an independent operation service in a cluster system. Failover takes place by the unit of group. A group has its own group name and the attribute of the group resources.

Fig. 3.27 Failover group and group resources¶

Resources in each group are handled by the unit of the group. If a failover occurs in group1 that has disk resource1 and Floating IP resource1, a failover of Disk resource1 and a failover of Floating IP1 are concurrent. (Disk resource 1 never fails over alone.) Likewise, a resource is never included in other groups.

3.5. Considering group resources¶

For a failover to occur in a cluster system, a group that works as a unit of failover must be created. A group consists of group resources. In order to create an optimal cluster, you must understand what group resources to be added to the group you create, and have a clear vision of your operation.

See also

For details on each resource, refer to "Group resource details" in the "Reference Guide".

The following are currently supported group resources:

Group Resource Name |

Abbreviation |

|---|---|

Application resource |

appli |

CIFS resource |

cifs |

Dynamic DNS resource |

ddns |

Floating IP resource |

fip |

Hybrid disk resource |

hd |

Mirror disk resource |

md |

NAS resource |

nas |

Registry synchronization resource |

regsync |

Script resource |

script |

Disk resource |

sd |

Service resource |

service |

Print spooler resource |

spool |

Virtual computer name resource |

vcom |

Virtual IP resource |

vip |

VM resource |

vm |

AWS Elastic IP resource |

awseip |

AWS Virtual IP resource |

awsvip |

AWS DNS resource |

awsdns |

Azure probe port resource |

azurepp |

Azure DNS resource |

azuredns |

Google Cloud virtual IP resource |

gcvip |

Google Cloud DNS resource |

gcdns |

Oracle Cloud virtual IP resource |

ocvip |

3.6. Understanding monitor resources¶

- Always monitors

Monitoring is performed from when the cluster is started up until it is shut down.

- Monitors while activated

Monitoring is performed from when a group is activated until it is deactivated.

See also

For the details of each resource, see "Monitor resource details" in the "Reference Guide".

The following are currently supported monitor resources:

Monitor Resource Name |

Abbreviation |

Always monitors |

Monitors While activated |

|---|---|---|---|

Application monitor resource |

appliw |

✓ |

|

CIFS monitor resource |

cifsw |

✓ |

|

DB2 monitor resource |

db2w |

✓ |

|

Dynamic DNS monitor resource |

ddnsw |

✓ |

|

Disk RW monitor resource |

diskw |

✓ |

|

Floating IP monitor resource |

fipw |

✓ |

|

FTP monitor resource |

ftpw |

✓ |

|

Custom monitor resource |

genw |

✓ |

|

Hybrid disk monitor resource |

hdw |

✓ |

|

Hybrid disk TUR monitor resource |

hdtw |

✓ |

|

HTTP monitor resource |

httpw |

✓ |

|

IMAP4 monitor resource |

imap4w |

✓ |

|

IP monitor resource |

ipw |

✓ |

✓ |

Mirror disk monitor resource |

mdw |

✓ |

|

Mirror connect monitor resource |

mdnw |

✓ |

|

NIC Link UP/Down monitor resource |

miiw |

✓ |

✓ |

Multi target monitor resource |

mtw |

✓ |

|

NAS monitor resource |

nasw |

✓ |

|

ODBC monitor resource |

odbcw |

✓ |

|

Oracle monitor resource |

oraclew |

✓ |

|

WebOTX monitor resource |

otxw |

✓ |

|

POP3 monitor resource |

pop3w |

✓ |

|

PostgreSQL monitor resource |

psqlw |

✓ |

|

Registry synchronization monitor resource |

regsyncw |

✓ |

|

Disk TUR monitor resource |

sdw |

✓ |

|

Service monitor resource |

servicew |

✓ |

|

SMTP monitor resource |

smtpw |

✓ |

|

Print spooler monitor resource |

spoolw |

✓ |

|

SQL Server monitor resource |

sqlserverw |

✓ |

|

Tuxedo monitor resource |

tuxw |

✓ |

|

Virtual computer name monitor resource |

vcomw |

✓ |

|

Virtual IP monitor resource |

vipw |

✓ |

|

WebSphere monitor resource |

wasw |

✓ |

|

WebLogic monitor resource |

wlsw |

✓ |

|

VM monitor resource |

vmw |

✓ |

|

Message receive monitor resource |

mrw |

✓ |

|

JVM monitor resource |

jraw |

✓ |

✓ |

System monitor resource |

sraw |

✓ |

|

Process resource monitor resource |

psrw |

✓ |

|

Process name monitor resource |

psw |

✓ |

✓ |

User mode monitor resource |

userw |

✓ |

|

AWS Elastic IP monitor resource |

awseipw |

✓ |

|

AWS Virtual IP monitor resource |

awsvipw |

✓ |

|

AWS AZ monitor resource |

awsazw |

✓ |

|

AWS DNS monitor resource |

awsdnsw |

✓ |

|

Azure probe port monitor resource |

azureppw |

✓ |

|

Azure load balance monitor resource |

azurelbw |

✓ |

|

Azure DNS monitor resource |

azurednsw |

✓ |

|

Google Cloud virtual IP monitor resource |

gcvipw |

✓ |

|

Google Cloud load balance monitor resource |

gclbw |

✓ |

|

Google Cloud DNS monitor resource |

gcdnsw |

✓ |

|

Oracle Cloud virtual IP monitor resource |

ocvipw |

✓ |

|

Oracle Cloud load balance monitor resource |

oclbw |

✓ |

3.7. Understanding heartbeat resources¶

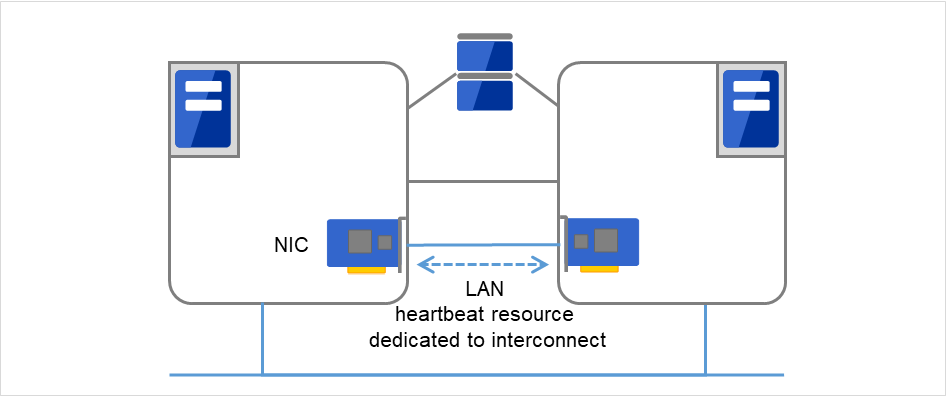

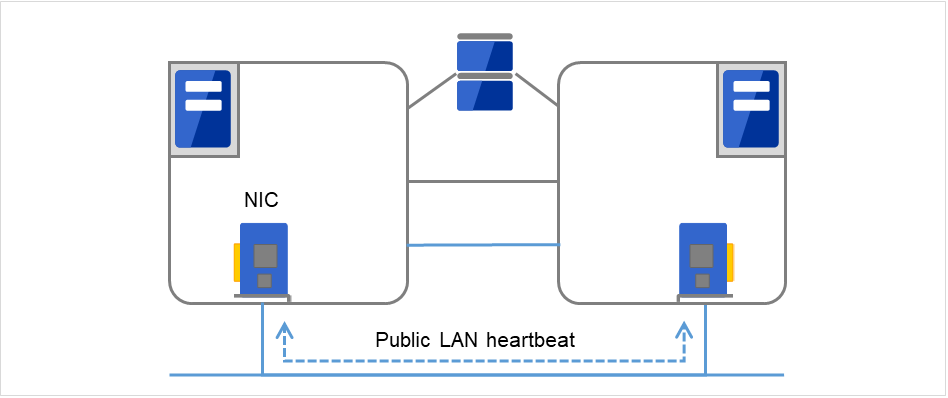

Servers in a cluster system monitor whether or not other servers in the cluster are active.

Kernel mode LAN heartbeat (primary interconnect)

Fig. 3.28 Kernel mode LAN heartbeat (primary interconnect)¶

Kernel mode LAN heartbeat (secondary interconnect)

Fig. 3.29 Kernel mode LAN heartbeat (secondary interconnect)¶

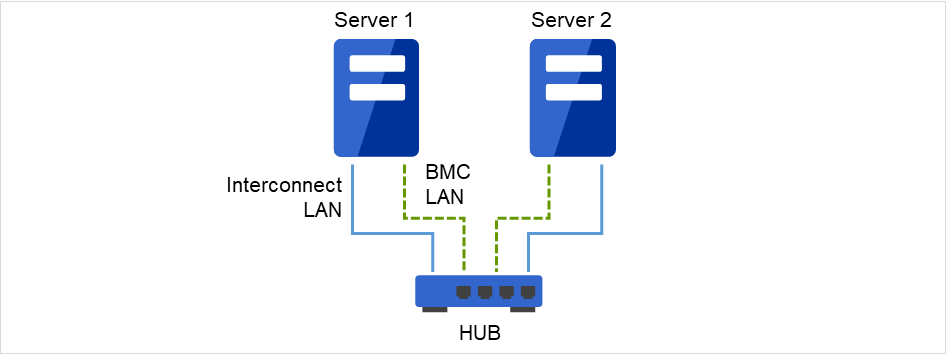

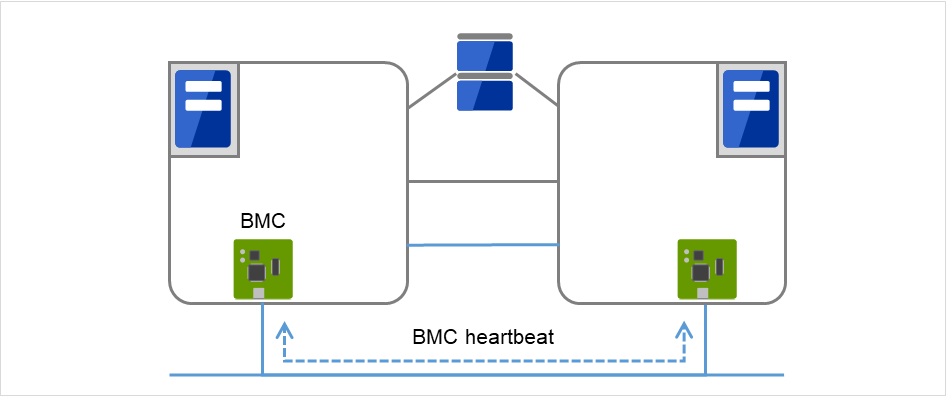

BMC heartbeat

Fig. 3.30 BMC heartbeat¶

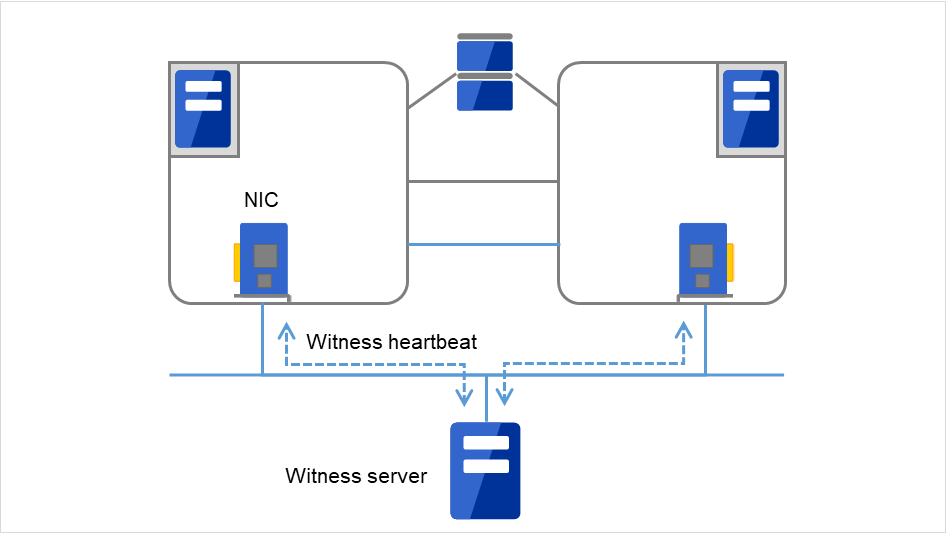

Witness heartbeat

Fig. 3.31 Witness heartbeat¶

Type of Heartbeat Resource |

Abbreviation |

Functional Overview |

|---|---|---|

Kernel mode LAN heartbeat resource (1), (2) |

lankhb |

A kernel mode module uses a LAN to monitor whether or not servers are active. |

BMC heartbeat (3) |

bmchb |

A module uses BMC to monitor whether or not servers are active. |

Witness heartbeat resource (4) |

witnesshb |

A module uses the Witness server to monitor whether or not servers are active. |

At least one kernel mode LAN heartbeat resource needs to be set. Setting up more than two is recommended.

Set up one or more kernel mode LAN heartbeat resource to be used among all the servers.

3.8. Understanding network partition resolution resources¶

Network partitioning refers to the status where all communication channels have problems and the network between servers is partitioned.

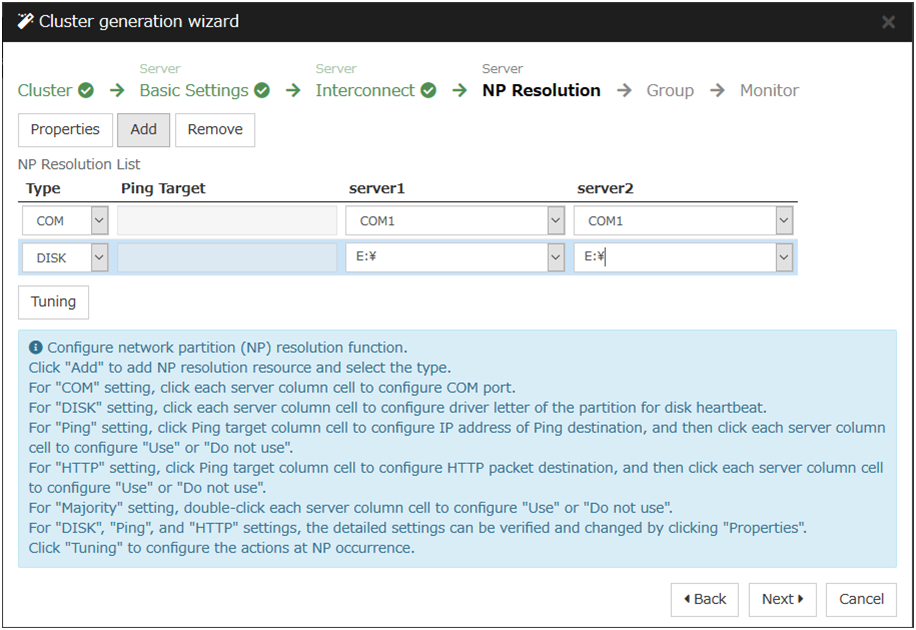

In a cluster system that is not equipped with solutions for network partitioning, a failure on a communication channel cannot be distinguished from an error on a server. This can cause data corruption brought by access from multiple servers to the same resource. EXPRESSCLUSTER, on the other hand, distinguishes a failure on a server from network partitioning when the heartbeat from a server is lost. If the lack of heartbeat is determined to be caused by the server failure, the system performs a failover by activating each resource and rebooting applications on a server running normally. When the lack of heartbeat is determined to be caused by network partitioning, emergency shutdown is executed because protecting data has higher priority over continuity of the operation. Network partitions can be resolved by the following methods:

COM method

Available in a 2-nodes cluster

Cross cables are needed.

The COM channel is used to check if the other server is active and then to determine whether or not the problem is caused by network partitioning.

If a server failure occurs when there is a failure in the COM channel (such as COM port and serial cross cable), resolving the network partition fails. Thus, a failover does not take place. Emergency shutdown takes place in servers including the normal server.

If a failure occurs on all network channels when the COM channel is working properly, it is regarded as network partitions. In this case, emergency shutdown takes place in all servers except the master server.

If a failure occurs on all network channels when there is a problem in the COM channel (such as COM port and serial cross cable), emergency shutdown takes in all servers excluding the master server.

If failures occur in all network channels between cluster server and the COM channel simultaneously, both active and standby servers fail over. This can cause data corruption due to access to the same resource from multiple servers.

PING method

A device that is always active to receive and respond to the ping command (hereafter described as ping device) is required.

More than one ping device can be specified.

When the heartbeat from the other server is lost, but the ping device is responding to the ping command, it is determined that the server without heartbeat has failed and a failover takes place. If there is no response to the ping command, the local server is isolated from the network due to network partitioning, and emergency shutdown takes place. This will allow a server that can communicate with clients to continue operation even if network partitioning occurs.

When the status where no response returns from the ping command on all servers continues before the heartbeat is lost, which is caused by a failure in the ping device, the network partitions cannot be resolved. If the heartbeat is lost in this status, a failover takes place in all servers. Because of this, using this method in a cluster with a shared disk can cause data corruption due to access to a resource from multiple servers.

HTTP method

A Web server that is always active is required.

When the heartbeat from the other server is lost, but there is a response to an HTTP HEAD request, it is determined that the server without heartbeat has failed and a failover takes place. If there is no response to an HTTP HEAD request, it is determined that the local server is isolated from the network due to network partitioning, and an emergency shutdown takes place. This will allow a server that can communicate with clients to continue operation even if network partitioning occurs.

When there remains no response to an HTTP HEAD request before the heartbeat is lost, which is caused by a failure in Web server, the network partitions cannot be resolved. If the heartbeat is lost in this status, emergency shutdowns occur in all the servers.

DISK method

Available to a cluster that uses a shared disk.

A dedicated disk partition (disk heartbeat partition) is required on the shared disk.

Network partitioning is determined by writing data periodically on a shared disk and calculating the last existing time of the other server.

If the heartbeat from other server is lost while there is any failure in the shared disk or channel to the shared disk (such as SCSI bus), resolving network partitions fails, which means failover does not take place. In this case, emergency shutdown takes place in servers working properly.

If failures occur on all network channels while the shared disk is working properly, a network partition is detected. Then failover takes place in the master server and a server that can communicate with the master server. Emergent shutdown takes place in the rest of servers.

Compared to the other methods, the time needed to resolve network partitions is longer in the shared disk method because the delay of the disk I/O must be taken into account. The time is about twice as long as the heartbeat time-out and disk I/O wait time.

If the I/O time to the shared disk is longer than the disk I/O wait time, the resolving network may time out, and failover may not take place.

Note

Shared DISK method cannot be used if VERITAS Storage Foundation is used.

COM + DISK method

This is a method that combines the COM method and the DISK method. This method is available in a cluster that uses a shared disk with two nodes.

This method requires serial cross cables. A dedicated disk partition (disk heartbeat partition) must be allocated on the shared disk.

When the COM channel (such as a COM port and serial cross cable) is working properly, this method works in the same way as the COM method. When an error occurs on the COM channel, this method switches to the shared DISK method. This mechanism offers higher availability than the COM method. The method also achieves network partition resolving faster than the DISK method.

Even if failures occur on all network channels between cluster servers and the COM channel simultaneously, emergency shutdown takes place at least on one of the servers. This will prevent data corruption.

PING + DISK method

This is a method that the PING method and the DISK are combined.

This method requires a device (a ping device) that can always receive the ping command and return response. You can specify more than one ping device. This method also requires the dedicated disk partition (disk heartbeat partition) on the shared disk.

This method usually works in the same way as the PING method. However, if the state where a response to the ping command on all servers does not return continues, due to a failure of the ping device before the heartbeat is lost, the method is switched to the DISK method. If the servers using the NP resolution resources of the PING method and those using the NP resolution resources of the DISK method do not match (such as when the PING method resources are used by all servers, but the DISK method resources are used only by some servers connected to a shared disk), the resources of these two types work independently. Therefore, the DISK method works as well, regardless of the state of the ping device.

If the heartbeat from the other server is lost while there is a failure in the shared disk and/or a path to the shared disk, emergency shutdown takes place even if there is response to the ping command.

Majority method

This method can be used in a cluster with three or more nodes.

This method prevents data corruption caused by the Split Brain syndrome by shutting down a server that can no longer communicate with the majority of the servers in the entire cluster because of network failure. When communication with exactly half of the servers in the entire cluster is failing, emergency shutdown takes place in a server that cannot communicate with the master server.

When more than half of the servers are down, the rest servers running properly also go down.

If all servers are isolated due to a hub error, all servers go down.

Not solving the network partition

This method can be selected in a cluster that does not use any disk resource (a shared disk).

If a failure occurs on all network channels between servers in a cluster, all servers failover.

The following are the recommended methods to resolve the network partition:

The ping + shared disk method is recommended for a cluster that uses a shared disk with three or more nodes. When using the hybrid type, use the PING + DISK method for the servers connected to the DISK, and use only the PING method for the servers not connected to the shared disk.

The PING method is recommended for a cluster with three or more nodes but without a shared disk.

The COM + DISK method or the PING + DISK method is recommended for a cluster that uses a shared disk with two nodes.

The COM method or the PING method is recommended for a cluster with two nodes but without a shared disk.

The HTTP method is recommended for a cluster that uses the Witness heartbeat resource but does not use a shared disk.

Method to resolve a network partition |

Number of nodes |

Required hardware |

Circumstance where failover cannot be performed |

When all network channels are disconnected |

Circumstance where both servers fail over |

Time required to resolve network partition |

|---|---|---|---|---|---|---|

COM |

2 |

Serial cable |

COM error |

The master server survives |

COM error and network disconnection occur simultaneously |

0 |

DISK |

No limit |

Shared disk |

Disk error |

The master server survives |

None |

Time calculated by the heartbeat timeout and disk I/O wait time is needed |

PING |

No limit |

Device to receive the ping command and return a response |

None |

Server that responses to the ping command survives |

All networks are disconnected after the ping command timeouts the specified times consecutively |

0 |

HTTP |

No limit |

Web server |

Web server failure |

A server that can communicate with the Web server survives |

None |

0 |

COM +

DISK

|

2 |

Serial cables shared disk |

COM error and

disk error

|

The master server survives |

None |

0 |

PING +

DISK

|

No limit |

Device to receive the ping command and return response

Shared disk

|

None |

Server responding to the ping command survives |

None |

0 |

Majority |

3 or more |

None |

Majority of servers go down |

A server that can communicate with majority of servers survives |

None |

0 |

None |

No limit |

None |

None |

All servers fail over |

All networks are disconnected |

0 |

4. Installing EXPRESSCLUSTER¶

This chapter provides instructions for installing EXPRESSCLUSTER.

This chapter covers:

4.1. Steps from Installing EXPRESSCLUSTER to creating a cluster¶

The following describes the steps from installing EXPRESSCLUSTER, license registration, cluster system creation, to verifying the cluster system status.

Before proceeding to the following steps, make sure to read "2. Determining a system configuration" and "3. Configuring a cluster system" and check system requirements and the configuration of a cluster.

Install the EXPRESSCLUSTER Server

Install the EXPRESSCLUSTER Server, which is the core EXPRESSCLUSTER module, to each server that constitutes a cluster. When installing the Server, a license registration is performed as well. (See "4. Installing EXPRESSCLUSTER.")Reboot the serverCreate the cluster configuration data using Cluster WebUI

Create the cluster configuration data by using the Cluster WebUI. (See "6. Creating the cluster configuration data.")

Create a cluster

Create a cluster by applying the cluster configuration data created with theCluster WebUI. (See "6. Creating the cluster configuration data".)

Verify the cluster status using the Cluster WebUI

Verify the status of a cluster that you have created using the Cluster WebUI. (See "7. Verifying a cluster system.")

See also

You need to refer to the "Reference Guide" as needed by following the steps written in this guide to perform operation following this guide. For the latest information on the system requirements and lease information, refer to "Installation requirements for EXPRESSCLUSTER" and "Latest version information" in the "Getting Started Guide".

4.2. Installing the EXPRESSCLUSTER Server¶

The EXPRESSCLUSTER Server consists of the following system services:

Service Display Name |

Service Name |

Description |

Startup Type |

Service Status (usual) |

|---|---|---|---|---|

EXPRESSCLUSTER |

clpstartup |

EXPRESSCLUSTER |

Automatic |

Running |

EXPRESSCLUSTER API |

clprstd |

Control of the EXPRESSCLUSTER RESTful API |

Automatic |

Stopped |

EXPRESSCLUSTER Disk Agent |

clpdiskagent |

Shared disk, mirror disk, hybrid disk control |

Manual |

Running |

EXPRESSCLUSTER Event |

clpevent |

Event log output |

Automatic |

Running |

EXPRESSCLUSTER Information Base |

clpibsv |

Cluster information management |

Automatic |

Running |

EXPRESSCLUSTER Java Resource Agent |

clpjra |

Java Resource Agent |

Manual |

Stopped |

EXPRESSCLUSTER Manager |

clpwebmgr |

WebManager Server |

Automatic |

Running |

EXPRESSCLUSTER Old API Support |

clpoldapi |

Compatible API process |

Automatic |

Running |

EXPRESSCLUSTER Server |

clppm |

EXPRESSCLUSTER Server |

Automatic |

Running |

EXPRESSCLUSTER System Resource Agent |

clpsra |

System Resource Agent |

Manual |

Stopped |

EXPRESSCLUSTER Transaction |

clptrnsv |

Communication process |

Automatic |

Running |

EXPRESSCLUSTER Web Alert |

clpwebalt |

Alert synchronization |

Automatic |

Running |

Note

The status of EXPRESSCLUSTER Java Resource Agent will be "Running" when JVM monitor resource is set.

Note

The status of EXPRESSCLUSTER System Resource Agent will be "Running" When the system monitor resource or the process resource monitor resource is set or Collect the System Resource Information is checked on the Monitor tab in Cluster Properties.

4.2.1. Installing the EXPRESSCLUSTER Server for the first time¶

Install the EXPRESSCLUSTER X on all servers that constitute the cluster by following the procedures below.

Important

When a shared disk is used, make sure not to start more than one OS on servers connected to the shared disk before installing EXPRESSCLUSTER. Data on the shared disk may be corrupted.

Note

Install the EXPRESSCLUSTER Server using Administrator account.

Note

When installing EXPRESSCLUSTER server, Windows media sense function which is the function to deactivate IP address due to disconnection of the cable at link down occurrence will be disabled.

Note

Insert the installation CD-ROM into the CD-ROM drive.

After the menu window is displayed, select EXPRESSCLUSTER for Windows.

Note

If the menu window does not open automatically, double-click the menu.exe in the root folder of the CD-ROM.

Select EXPRESSCLUSTER X 4.3 for Windows.

The NEC EXPRESSCLUSTER Setup window is displayed. Click Next.

The Choose Destination Location dialog box is displayed. When changing the install destination, click Browse to select a directory.

In the Ready to Install the Program window, click Install to start installing.

After the installation is completed, click Next without changing the default value in Port Number.

Note

The port number configured here needs to be configured again when creating the cluster configuration data. For details on port number, refer to "Parameter details" in the "Reference Guide".

In Filter Settings of Shared Disk, right-click SCSI controller or HBA connected to a shared disk, and click Filtering. Click Next.

Important

When a shared disk is used, configure filtering settings to the SCSI controller or HBA to be connected to the shared disk. If the shared disk is connected without configuring filtering settings, data on the shared disk may be corrupted. When the disk path is duplicated, it is necessary to configure the filter for all the HBAs physically connected with the shared disk though it may look the shared disk is connected to only one HBA.

Important

When using mirror disk resources, do not perform filtering settings for SCSI controller/HBA which an internal disk for the mirroring target is connected. If the filter is activated on mirror disk resources, starting mirror disk resources fails. However, it is essential to perform filtering settings when shared disks are expected to consist mirroring.

The window that shows the completion of setting is displayed. Click Yes.

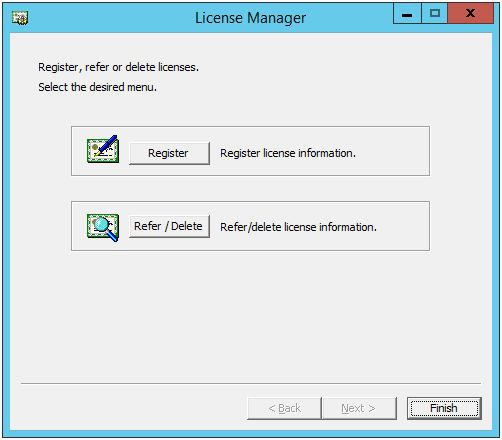

License Manager is displayed. Click Register to register the license. For detailed information on the registration procedure, refer to "5. Registering the license" in this guide.

Click Finish to close the License Manager dialog box.

The Complete InstallShiled Wizard dialog box is displayed. Select Restarting and click Finish. The server will be rebooted.

Note

When a shared disk is used, it cannot be accessed due to access restriction after OS reboot.

4.2.2. Installing the EXPRESSCLUSTER Server in Silent Mode¶

Note

Installation in silent mode is not available for a shared disk configuration. For a shared disk configuration, install the EXPRESSCLUSTER Server by referring to "Installing the EXPRESSCLUSTER Server for the first time."

Note

Install the EXPRESSCLUSTER Server using Administrator account.

Note

When installing EXPRESSCLUSTER server, Windows media sense function which is the function to deactivate IP address due to disconnection of the cable at link down occurrence will be disabled.

Note

Preparation

If you want to change the installation folder (default:

C:\Program Files\EXPRESSCLUSTER), create a response file in advance following the procedure below.

Windows\4.3\common\server\x64\response\setup_inst_en.issOpen the response file (setup_inst_en.iss) with a text editor, then change the folder written in the szDir line.

Count=4 Dlg1={8493CDB6-144B-4330-B945-1F2123FADD3A}-SdAskDestPath-0 Dlg2={8493CDB6-144B-4330-B945-1F2123FADD3A}-SdStartCopy2-0 Dlg3={8493CDB6-144B-4330-B945-1F2123FADD3A}-SdFinishReboot-0 [{8493CDB6-144B-4330-B945-1F2123FADD3A}-SdWelcome-0] Result=1 [{8493CDB6-144B-4330-B945-1F2123FADD3A}-SdAskDestPath-0] szDir=C:\Program Files\CLUSTERPRO Result=1

Installation procedure

Windows\4.3\common\server\x64\silent-install.batin the installation CD-ROM.* When installing the EXPRESSCLUSTER Server in the default directory (C:\Program Files\EXPRESSCLUSTER), omit <Path of response file>.Restart the server.

4.2.3. Upgrading EXPRESSCLUSTER Server from the previous version¶

Before starting the upgrade, read the following notes.

It is possible to upgrade version from EXPRESSCLUSTER X 1.0, 2.0, 2.1, 3.0, 3.1, 3.2 or 3.3 to EXPRESSCLUSTER X 4.3.

You need CD-ROM contains setup files and software licenses for EXPRESSCLUSTER X 4.3.

You cannot use the cluster configuration data that was created by using EXPRESSCLUSTER X higher than EXPRESSCLUSTER X in use.

The cluster configuration data that was created by using EXPRESSCLUSTER X 1.0, 2.0, 2.1, 3.0, 3.1, 3.2, 3.3,4.0,4.1,4.2 or 4.3 for Windows is available for EXPRESSCLUSTER X in use.

If mirror disk resources or hybrid disk resources are set, cluster partitions require space of 1 GB or larger. And also, executing full copy of mirror disk resources or hybrid disk resources is required.

If mirror disk resources or hybrid disk resources are set, it is recommended to backup data in advance. For details of a backup procedure, refer to "Performing a snapshot backup" in "The system maintenance information" in the "Maintenance Guide".

EXPRESSCLUSTER Server must be upgraded with the account having the Administrator's privilege.

See also

For the update from X 4.0/4.1/4.2 to X 4.3, see "Update Procedure Manual".

The following procedures explain how to upgrade from EXPRESSCLUSTER X 1.0, 2.0, 2.1, 3.0, 3.1, 3.2 or 3.3 to EXPRESSCLUSTER X 4.3.

Before upgrading, confirm that the servers in the cluster and all the resources are in normal status by using WebManager or the command.

Save the current cluster configuration file with the Builder or clpcfctrl command. For details about saving the cluster configuration file with clpcfctrl command, refer to "Backing up the cluster configuration data (clpcfctrl --pull)" of "Creating a cluster and backing up configuration data (clpcfctrl command)" in "EXPRESSCLUSTER command reference" in the "Reference Guide".

When the EXPRESSCLUSTER Server service of the target server is configured as Auto Startup, change the settings to Manual Startup.

Shut down the entire cluster.

Start only one server, and uninstall the EXPRESSCLUSTER Server. For details about uninstalling the EXPRESSCLUSTER Server, refer to "10.1.1. Uninstalling the EXPRESSCLUSTER Server" in "10. Uninstalling and reinstalling EXPRESSCLUSTER" in this guide.

Install the EXPRESSCLUSTER X 4.3 on the server from which was uninstalled old version of the EXPRESSCLUSTER server in the step 5, and then register the license as necessary. For details about how to install the EXPRESSCLUSTER Server, refer to "4.2. Installing the EXPRESSCLUSTER Server" in "4. Installing EXPRESSCLUSTER" in this guide.

Shut down the server on which was installed the EXPRESSCLUSTER X 4.3 in the step 6.

Perform the steps 5 to 7 on each server.

Start all the servers.

If mirror disk resources or hybrid disk resources are set, allocate cluster partition (The cluster partition should be 1 GB or larger).

- Access the below URL to start the WebManager.

http://actual IP address of an installed server:29003/main.htm

Import the cluster configuration file which was saved in the step 2.If the drive letter of the cluster partition is different from the configuration, modify the configuration. And regarding the groups which mirror disk resources or hybrid disk resources belong to, if Startup Attribute is Auto Startup on the Attribute tab of Group Properties, change it to Manual Startup.In order to use the values of Maximum Failover Count which were set before version up EXPRESSCLUSTER, set Cluster Properties -> Extension tab -> Failover Count Method to Cluster from Server. - Upload the cluster configuration data with the Cluster WebUI.When the message "There is difference between the disk information in the configuration information and the disk information in the server. Are you sure you want automatic modification?" appears, select Yes.If the fixed-term license is used, run the following command.

clplcnsc --distribute

Start the cluster on Cluster WebUI.

If mirror disk resources or hybrid disk resources are set, from the mirror disk list, execute a full copy assuming that the server with the latest data is the copy source.

Start the group and confirm that each resource starts normally.

If Startup Attribute was changed from Auto Startup to Manual Startup in step 11, use the config mode of Cluster WebUI to change this to Auto Startup. Then, click Apply the Configuration File to apply the cluster configuration data to the cluster.

This completes the procedure for upgrading the EXPRESSCLUSTER Server. Check that the servers are operating normally as the cluster by the clpstat command or Cluster WebUI

4.2.4. Setting up the SNMP linkage function manually¶

Note

If you are using only the SNMP trap transmission function, you do not need to perform this procedure.

When the Windows SNMP Service has not been installed, follow the procedure below to manually register the SNMP linkage function.

Note

Use an Administrator account to perform the registration.

Install the Windows SNMP Service.

Stop the Windows SNMP Service.

- Register the SNMP linkage function of EXPRESSCLUSTER with the Windows SNMP Service.3-1. Start the registry editor.3-2. Open the following key:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\SNMP\Parameters\ExtensionAgents

3-3. Specify the following to create a string value in the opened key:Value name : mgtmibValue type : REG_SZValue data :SOFTWARE\NEC\EXPRESSCLUSTER\SnmpAgent\mgtmib\CurrentVersion3-4. Exit the registry editor. Start the Windows SNMP Service.

Note

Specify the settings required for SNMP communication on the Windows SNMP Service.

5. Registering the license¶

To run EXPRESSCLUSTER as a cluster system, you need to register the license. This chapter describes how to register an EXPRESSCLUSTER license.

This chapter covers:

5.1. Registering the license¶

EXPRESSCLUSTER licenses can be registered during installation, as well as be added or deleted after installation.

5.1.1. Registering the CPU license¶

For the following CPU licenses of the EXPRESSCLUSTER, register the license to the master server of a cluster.

Main Products

EXPRESSCLUSTER X 4.3 for Windows

EXPRESSCLUSTER X SingleServerSafe 4.3 for Windows

EXPRESSCLUSTER X SingleServerSafe for Windows Upgrade

5.1.2. Registering the node license¶

For the following node licenses of the EXPRESSCLUSTER, register the license to each cluster server.

Main Products

EXPRESSCLUSTER X 4.3 for Windows VM

EXPRESSCLUSTER X SingleServerSafe 4.3 for Windows VM

EXPRESSCLUSTER X SingleServerSafe for Windows VM Upgrade

Optional Products

EXPRESSCLUSTER X Replicator 4.3 for Windows

EXPRESSCLUSTER X Replicator DR 4.3 for Windows

EXPRESSCLUSTER X Replicator DR 4.3 Upgrade for Windows

EXPRESSCLUSTER X Database Agent 4.3 for Windows

EXPRESSCLUSTER X Internet Server Agent 4.3 for Windows

EXPRESSCLUSTER X Application Server Agent 4.3 for Windows

EXPRESSCLUSTER X Java Resource Agent 4.3 for Windows

EXPRESSCLUSTER X System Resource Agent 4.3 for Windows

EXPRESSCLUSTER X Alert Service 4.3 for Windows

Note

If the licenses for optional products have not been installed, the resources and monitor resources corresponding to those licenses are not shown in the list on the Cluster WebUI.

There are two ways of license registration; specifying the license file and using the information on the license sheet.

Entering the license information attached to the license product to register the license. Refer to "5.1.4. Registering the license by entering the license information".

Specifying the license file to register the license. Refer to "5.1.5. Registering the license by specifying the license file".

5.1.3. Notes on the CPU license¶

Notes on using the CPU license are as follows:

After registration of the CPU license on the master server, Cluster WebUI on the master server must be used in order to edit and reflect the cluster configuration data as described in "6. Creating the cluster configuration data".

5.1.4. Registering the license by entering the license information¶

EXPRESSCLUSTER CPU license

You have the license sheet you officially obtained from the sales agent. The values on this license sheet are used for registration.

You have the administrator privileges to log in the server intended to be used as master server in the cluster.

EXPRESSCLUSTER node license

You have the license sheet you officially obtained from the sales agent. The number of license sheets you need is as many as the number of servers on which the product will be used. The values on this license sheet are used for registration.

You have the administrator privileges to log in the server on which you intend to use the product.

On the Start menu, click License Manager of the EXPRESSCLUSTER Server.

In the License Manager dialog box, click Register.

In the window to select a license method, select Register with License Information.

In the Product selection dialog box, select the product category, and click Next.

In the License Key Entry dialog box, enter the serial number and license key of the license sheet. Click Next.

Confirm what you have entered on the License Registration Confirmation dialog box. Click Next.

Make sure that the pop-up message, "The license was registered." is displayed. If the license registration fails, start again from the step 2.

5.1.5. Registering the license by specifying the license file¶

The following describes how to register the license by specifying the license.

Before you register the license, check that:

EXPRESSCLUSTER CPU license

You have the administrator privileges to log in the server intended to be used as master server in the cluster.

The license file is located in the server intended to be used as master server in the cluster.

EXPRESSCLUSTER node license

You have the administrator privileges to log in the server on which you intend to use the product.

The license file is located in the server in which you intend to use products among servers that constitute a cluster system.

On the Start menu, click License Manager of the EXPRESSCLUSTER Server.

In the License Manager dialog box, click Register.

In the window to select a license method is displayed, select Register with License File.

In the License File Specification dialog box, select the license file to be registered and then click Open.

The message confirming registration of the license is displayed. Click OK.

Click Finish to close the license manager.

5.2. Referring and/or deleting the license¶

5.2.1. How to refer to and/or delete the registered license¶

The following procedure describes how to refer to and delete the registered license.

On the Start menu, click License Manager of the EXPRESSCLUSTER Server.

In the License Manager dialog box, click Refer / Delete.

The registered licenses are listed.

Select the license to delete and click Delete.

The confirmation message to delete the license is displayed. Click OK.

5.3. Registering the fixed term license¶

The fixed term license applies to the EXPRESSCLUSTER X 4.3 for Windows and optional products as shown below. Among servers that constitute the cluster, use the master server to register the fixed term license.

Main Products

EXPRESSCLUSTER X 4.3 for Windows

Optional Products

EXPRESSCLUSTER X Replicator 4.3 for Windows

EXPRESSCLUSTER X Replicator DR 4.3 for Windows

EXPRESSCLUSTER X Database Agent 4.3 for Windows

EXPRESSCLUSTER X Internet Server Agent 4.3 for Windows

EXPRESSCLUSTER X Application Server Agent 4.3 for Windows

EXPRESSCLUSTER X Java Resource Agent 4.3 for Windows

EXPRESSCLUSTER X System Resource Agent 4.3 for Windows

EXPRESSCLUSTER X Alert Service 4.3 for Windows

Note

If the licenses for optional products have not been installed, the resources and monitor resources corresponding to those licenses are not shown in the list on the Cluster WebUI.

5.3.1. Notes on the fixed term license¶

Notes on using the fixed term license are as follows:

The fixed term license cannot be registered to serveral of the servers constituting the cluster to operate them.

After registration of the license on the master server, Cluster WebUI on the master server must be used in order to edit and reflect the cluster configuration data as described in "6. Creating the cluster configuration data".

The number of the fixed term license must be larger than the number of the servers constituting the cluster.

After starting the operation of the cluster, additional fixed term license must be registered in the master server.

Once enabled, the fixed term license cannot be reregistered despite its validity through the license/server removal or the server replacement.

5.3.2. Registering the fixed term license by specifying the license file¶

You have the administrator privileges to log in the server intended to be used as master server in the cluster.

The license files for all the products you intend to use are stored in the server that will be set as a master server among servers that constitute the cluster system.

Follow the following steps to register all the license files for the products to be used. If you have two or more license files for the same product in preparation for the expiration, execute the command to register the extra license files in the same way as the following steps.

On the Start menu, click License Manager of the EXPRESSCLUSTER Server.

In the License Manager dialog box, click Register.

In the window to select a license method is displayed, select Register with License File.

In the License File Specification dialog box, select the license file to be registered and then click Open.

The message confirming registration of the license is displayed. Click OK.

Click Finish to close the license manager.

5.4. Referring and/or deleting the fixed term license¶

5.4.1. How to refer to and/or delete the registered fixed term license¶

The procedure for referring and/or deleting the registered fixed term license is the same as that described in "5.2.1. How to refer to and/or delete the registered license".

6. Creating the cluster configuration data¶

In EXPRESSCLUSTER, data that contains information on how a cluster system is configured is called "cluster configuration data."This data is created using the Cluster WebUI. This chapter provides the information on how to start the Cluster WebUI and the procedures to create the cluster configuration data using the Cluster WebUI with a sample cluster configuration.

This chapter covers:

6.1. Creating the cluster configuration data¶

Creating the cluster configuration data is performed by using the config mode of Cluster WebUI, the function for creating and modifying cluster configuration data.

Start the Cluster WebUI accessed from the management PC and create the cluster configuration data. The cluster configuration data will be applied in the cluster system by the Cluster WebUI.

6.2. Starting up the Cluster WebUI¶

Accessing to the Cluster WebUI is required to create cluster configuration data. This section describes the overview of the Cluster WebUI, and how to create cluster configuration data.

See also

For the system requirements of the Cluster WebUI, refer to "Installation requirements for EXPRESSCLUSTER" in the Getting Started Guide.

6.2.1. What is Cluster WebUI?¶

The Cluster WebUI is a function for setting up the cluster, monitoring its status, starting up or stopping servers and groups, and collecting cluster operation logs through a Web browser. The overview of the Cluster WebUI is shown in the following figures.

EXPRESSCLUSTER Server (Main module)

Cluster WebUI

Fig. 6.1 Cluster WebUI¶

This figure shows two servers with EXPRESSCLUSTER installed. You can display the Cluster WebUI screen, by using a Web browser on the Management PC to access one of the servers. For this access, specify the management group's floating IP (FIP) address or virtual IP (VIP) address.

Specify the floating IP address or virtual IP address for accessing Cluster WebUI for the URL when connecting from a Web browser of the management PC.These addresses are registered as the resources of the management group. When the management group does not exist, you can specify the address of one of servers configuring the cluster (fixed address allocated to the server) to connect management PC with the server. In this case, the Cluster WebUI cannot acquire the status of the cluster if the server to be connected is not working.

6.2.2. Browsers supported by the Cluster WebUI¶

For information about evaluated Web browsers, refer to the "Getting Started Guide".

6.2.3. Starting the Cluster WebUI¶

The following describes how to start the Cluster WebUI.

Start your Web browser.

Enter the actual IP address and port number of the server where the EXPRESSCLUSTER Server is installed in the Address bar of the browser.

http://ip-address:port/

- ip-address

Specify the actual IP address of the first server in the cluster, because no management group exists just after the installation.

- port

Specify the same port number as that of WebManager specified during the installation (default: 29003).

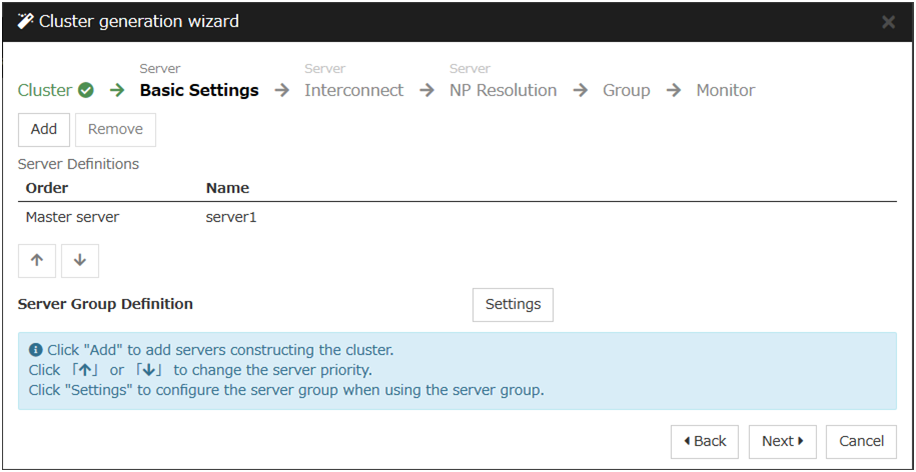

The Cluster WebUI starts. To create the cluster configuration data, select Config Mode from the drop down menu of the tool bar.

Click Cluster generation wizard to start the wizard.

See also

https://ip-address:29003/

6.3. Checking the values to be configured¶

Before you create the cluster configuration data using Cluster generation wizard, check values you are going to enter. Write down the values to see whether your cluster is efficiently configured and there is no missing information.

6.3.1. Sample cluster environment¶