1. Preface¶

1.1. Who Should Use This Guide¶

EXPRESSCLUSTER X Getting Started Guide is intended for first-time users of the EXPRESSCLUSTER. The guide covers topics such as product overview of the EXPRESSCLUSTER, how the cluster system is installed, and the summary of other available guides. In addition, latest system requirements and restrictions are described.

1.2. How This Guide is Organized¶

2. What is a cluster system?: Helps you to understand the overview of the cluster system.

3. Using EXPRESSCLUSTER: Provides instructions on how to use EXPRESSCLUSTER and other related-information.

4. Installation requirements for EXPRESSCLUSTER: Provides the latest information that needs to be verified before starting to use EXPRESSCLUSTER.

5. Latest version information: Provides information on latest version of the EXPRESSCLUSTER.

6. Notes and Restrictions: Provides information on known problems and restrictions..

1.3. EXPRESSCLUSTER X Documentation Set¶

The EXPRESSCLUSTER X manuals consist of the following six guides. The title and purpose of each guide is described below:

Getting Started Guide

This guide is intended for all users. The guide covers topics such as product overview, system requirements, and known problems.

Installation and Configuration Guide

This guide is intended for system engineers and administrators who want to build, operate, and maintain a cluster system. Instructions for designing, installing, and configuring a cluster system with EXPRESSCLUSTER are covered in this guide.

This guide is intended for system administrators. The guide covers topics such as how to operate EXPRESSCLUSTER, function of each module and troubleshooting. The guide is supplement to the "Installation and Configuration Guide".

This guide is intended for administrators and for system administrators who want to build, operate, and maintain EXPRESSCLUSTER-based cluster systems. The guide describes maintenance-related topics for EXPRESSCLUSTER.

This guide is intended for administrators and for system engineers who want to build EXPRESSCLUSTER-based cluster systems. The guide describes features to work with specific hardware, serving as a supplement to the "Installation and Configuration Guide".

This guide is intended for administrators and for system engineers who want to build EXPRESSCLUSTER-based cluster systems. The guide describes EXPRESSCLUSTER X 4.0 WebManager, Builder, and EXPRESSCLUSTER Ver 8.0 compatible commands.

1.4. Conventions¶

In this guide, Note, Important, See also are used as follows:

Note

Used when the information given is important, but not related to the data loss and damage to the system and machine.

Important

Used when the information given is necessary to avoid the data loss and damage to the system and machine.

See also

Used to describe the location of the information given at the reference destination.

The following conventions are used in this guide.

Convention |

Usage |

Example |

|---|---|---|

Bold |

Indicates graphical objects, such as fields, list boxes, menu selections, buttons, labels, icons, etc. |

In User Name, type your name.

On the File menu, click Open Database.

|

Angled bracket within the command line |

Indicates that the value specified inside of the angled bracket can be omitted. |

|

Monospace |

Indicates path names, commands, system output (message, prompt, etc), directory, file names, functions and parameters. |

|

bold |

Indicates the value that a user actually enters from a command line. |

Enter the following:

clpcl -s -a

|

italic |

Indicates that users should replace italicized part with values that they are actually working with. |

|

In the figures of this guide, this icon represents EXPRESSCLUSTER.

In the figures of this guide, this icon represents EXPRESSCLUSTER.

1.5. Contacting NEC¶

For the latest product information, visit our website below:

2. What is a cluster system?¶

This chapter describes overview of the cluster system.

This chapter covers:

2.1. Overview of the cluster system¶

A key to success in today's computerized world is to provide services without them stopping. A single machine down due to a failure or overload can stop entire services you provide with customers. This will not only result in enormous damage but also in loss of credibility you once had.

Introducing a cluster system allows you to minimize the period during which your system stops (down time) or to improve availability by load distribution.

As the word "cluster" represents, a system aiming to increase reliability and performance by clustering a group (or groups) of multiple computers. There are various types of cluster systems, which can be classified into following three listed below. EXPRESSCLUSTER is categorized as a high availability cluster.

- High Availability (HA) ClusterIn this cluster configuration, one server operates as an active server. When the active server fails, a stand-by server takes over the operation. This cluster configuration aims for high-availability. The high availability cluster is available in the shared disk type and the mirror disk type.

- Load Distribution ClusterThis is a cluster configuration where requests from clients are allocated to each of the nodes according to appropriate load distribution rules. This cluster configuration aims for high scalability. Generally, data cannot be passed. The load distribution cluster is available in a load balance type or parallel database type.

- High Performance Computing (HPC) ClusterThis is a cluster configuration where the computation amount is huge and a single operation is performed with a super computer. CPUs of all nodes are used to perform a single operation.

2.2. High Availability (HA) cluster¶

To enhance the availability of a system, it is generally considered that having redundancy for components of the system and eliminating a single point of failure is important. "Single point of failure" is a weakness of having a single computer component (hardware component) in the system. If the component fails, it will cause interruption of services. The high availability (HA) cluster is a cluster system that minimizes the time during which the system is stopped and increases operational availability by establishing redundancy with multiple nodes.

The HA cluster is called for in mission-critical systems where downtime is fatal. The HA cluster can be divided into two types: shared disk type and mirror disk type. The explanation for each type is provided below.

The HA cluster can be divided into two types: shared disk type and data mirror type. The explanation for each type is provided below.

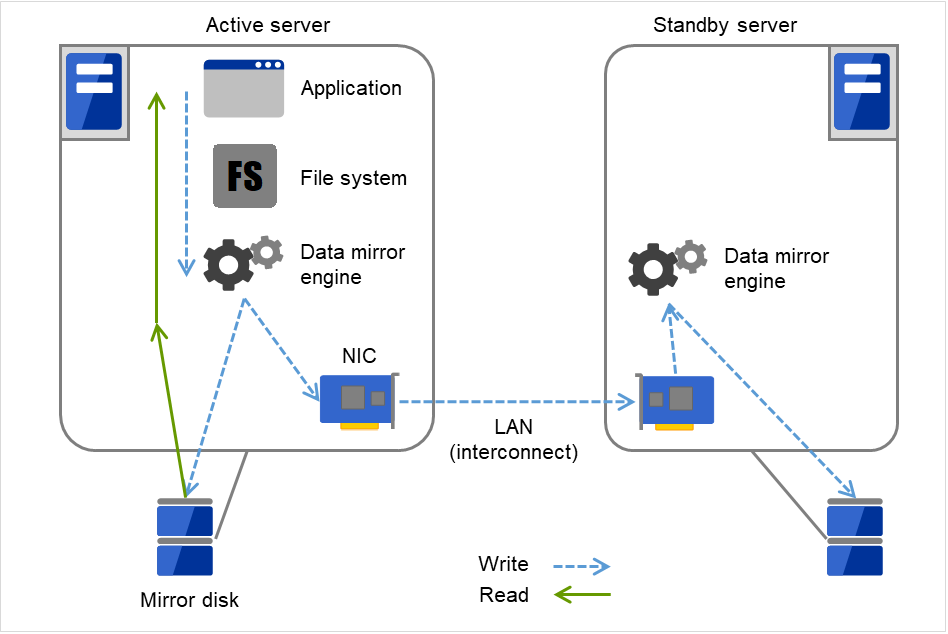

2.2.2. Mirror disk type¶

The shared disk type cluster system is good for large-scale systems. However, creating a system with this type can be costly because shared disks are generally expensive. The mirror disk type cluster system provides the same functions as the shared disk type with smaller cost through mirroring of server disks.

The mirror disk type is not recommended for large-scale systems that handle a large volume of data since data needs to be mirrored between servers.

When a write request is made by an application, the data mirror engine writes data in the local disk and sends the written data to the stand-by server via the interconnect. Interconnect is a cable connecting servers. It is used to monitor whether the server is activated or not in the cluster system. In addition to this purpose, interconnect is sometimes used to transfer data in the data mirror type cluster system. The data mirror engine on the stand-by server achieves data synchronization between stand-by and active servers by writing the data into the local disk of the stand-by server.

For read requests from an application, data is simply read from the disk on the active server.

Fig. 2.5 Data mirror mechanism¶

Snapshot backup is applied usage of data mirroring. Because the data mirror type cluster system has shared data in two locations, you can keep the data of the stand-by server as snapshot backup by simply separating the server from the cluster.

HA cluster mechanism and problems

The following sections describe cluster implementation and related problems.

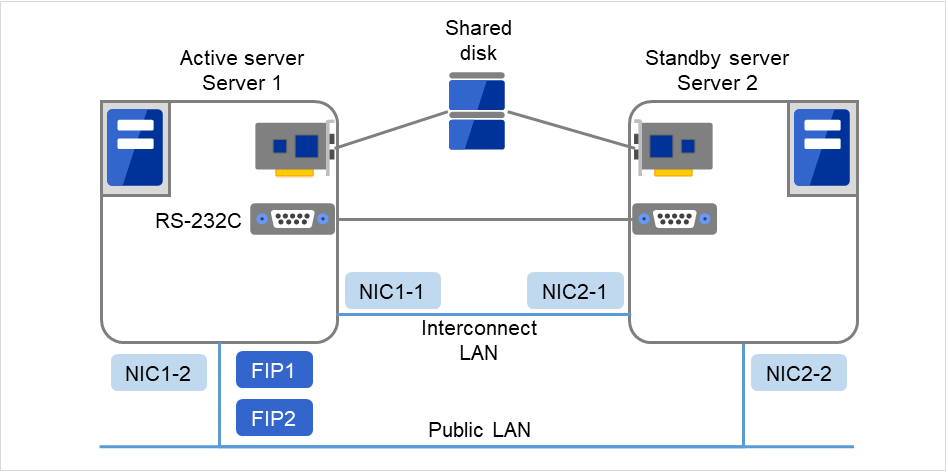

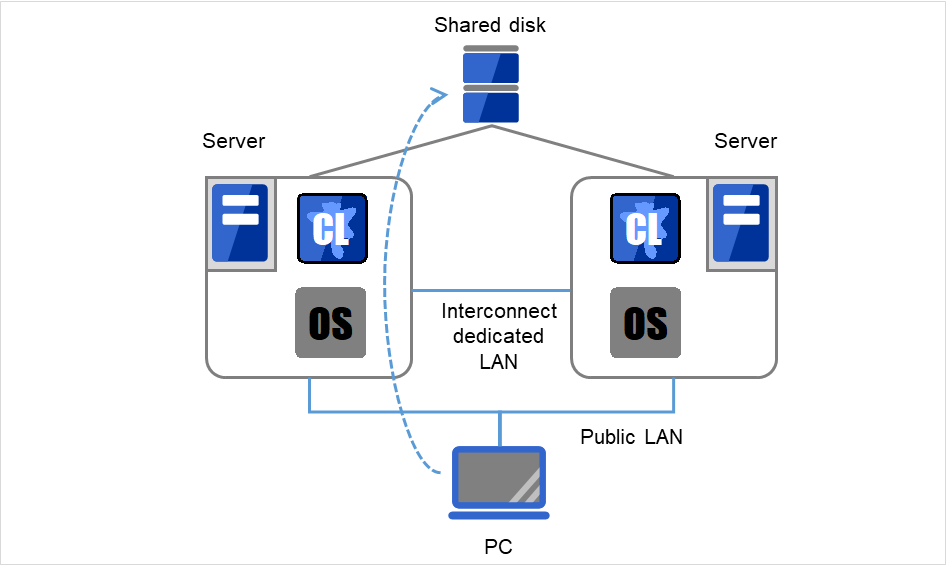

2.3. System configuration¶

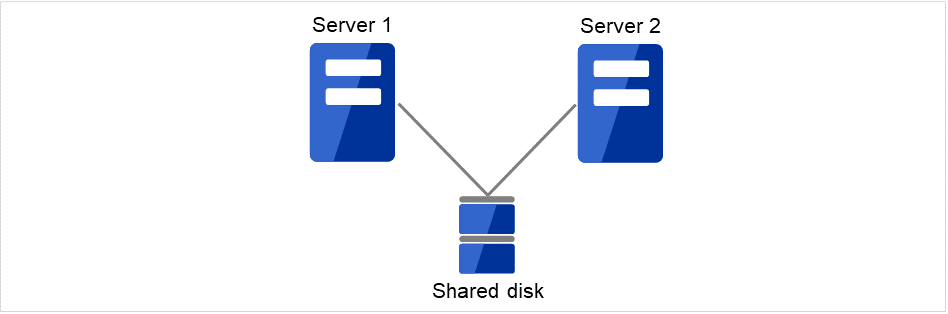

In a shared disk-type cluster, a disk array device is shared between the servers in a cluster. When an error occurs on a server, the standby server takes over the applications using the data on the shared disk.

In the mirror disk type cluster, a data disk on the cluster server is mirrored via the network. When an error occurs on a server, the applications are taken over using the mirror data on the stand-by server. Data is mirrored for every I/O. Therefore, the mirror disk type cluster appears the same as the shared disk viewing from a high level application.

The following the shared disk type cluster configuration.

Fig. 2.6 System configuration¶

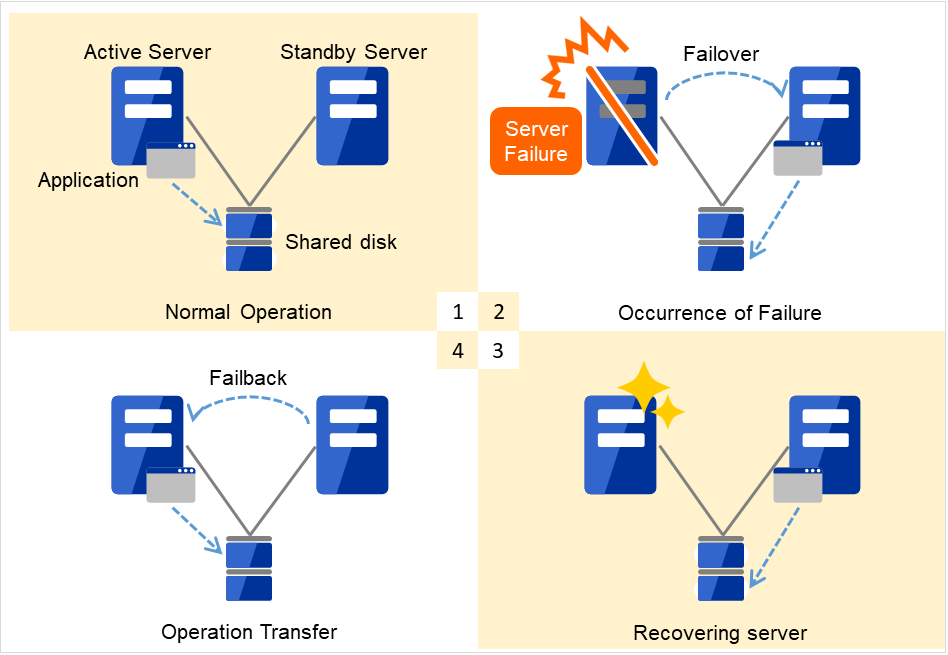

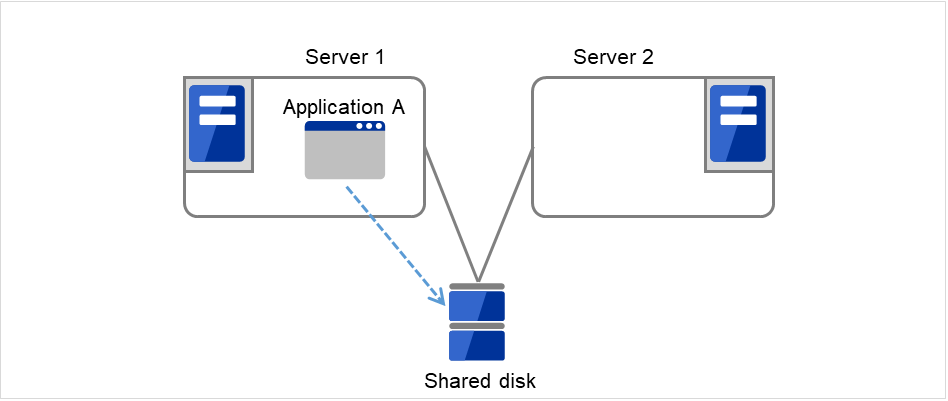

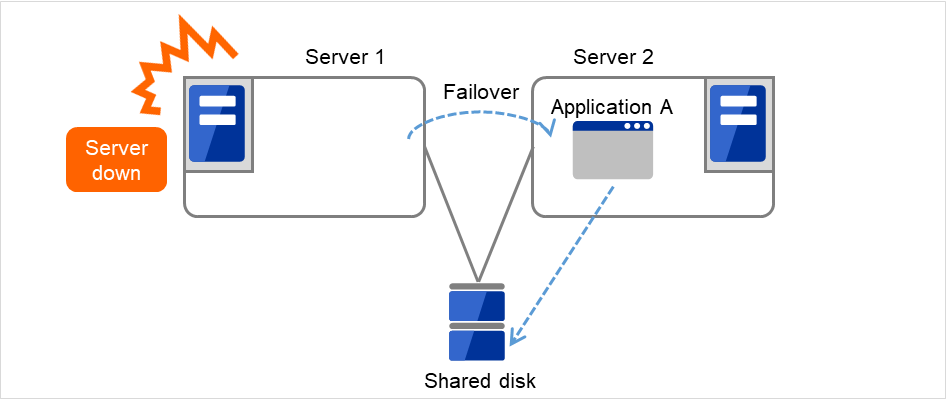

A failover-type cluster can be divided into the following categories depending on the cluster topologies:

Uni-Directional Standby Cluster System

In the uni-directional standby cluster system, the active server runs applications while the other server, the standby server, does not. This is the simplest cluster topology and you can build a high-availability system without performance degradation after failing over.

Fig. 2.7 Uni-directional standby cluster (1)¶

Fig. 2.8 Uni-directional standby cluster (2)¶

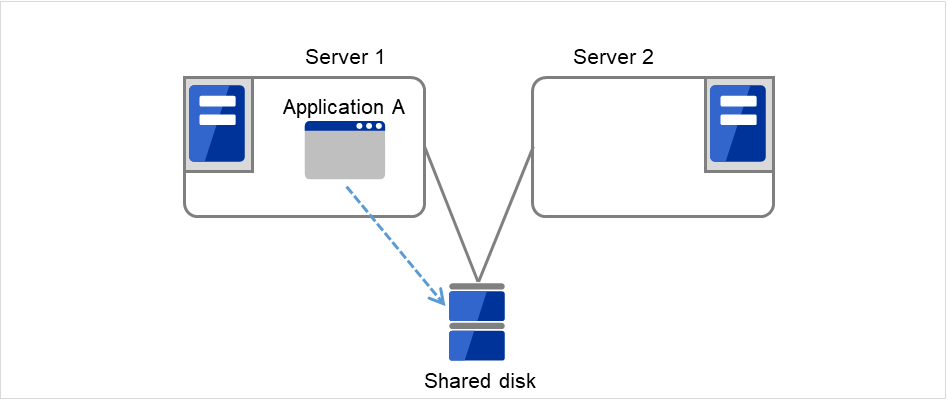

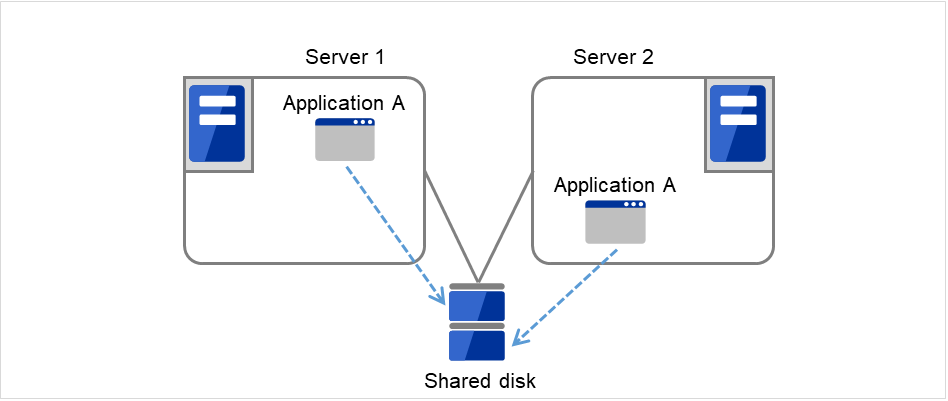

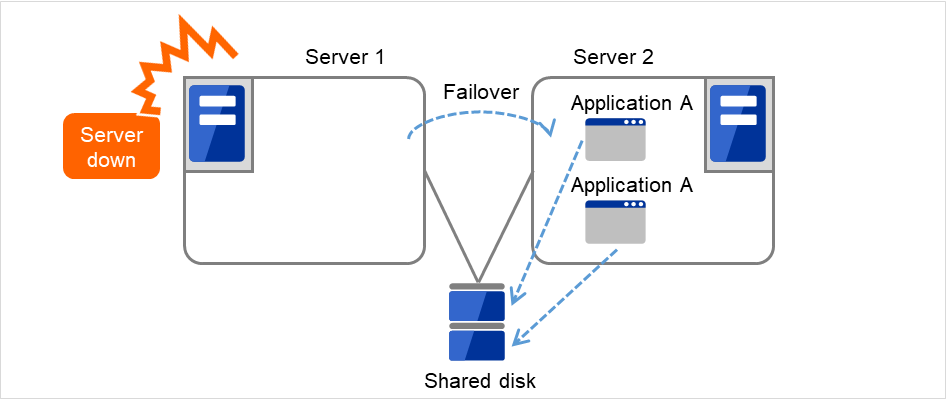

Multi-directional standby cluster system with the same application

In the same application multi-directional standby cluster system, the same applications are activated on multiple servers. These servers also operate as standby servers. These applications are operated on their own. When a failover occurs, the same applications are activated on one server. Therefore, the applications that can be activated by this operation need to be used. When the application data can be split into multiple data, depending on the data to be accessed, you can build a load distribution system per data partitioning basis by changing the client's connecting server.

Fig. 2.9 Multi-directional standby cluster system with the same application (1)¶

Fig. 2.10 Multi-directional standby cluster system with the same application (2)¶

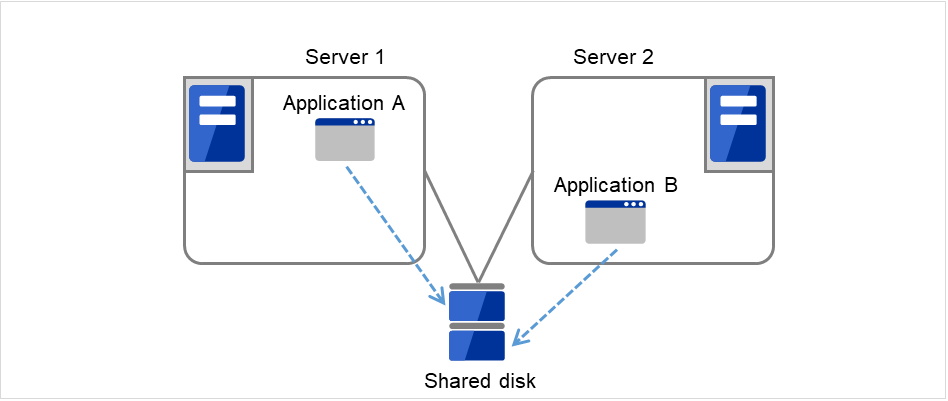

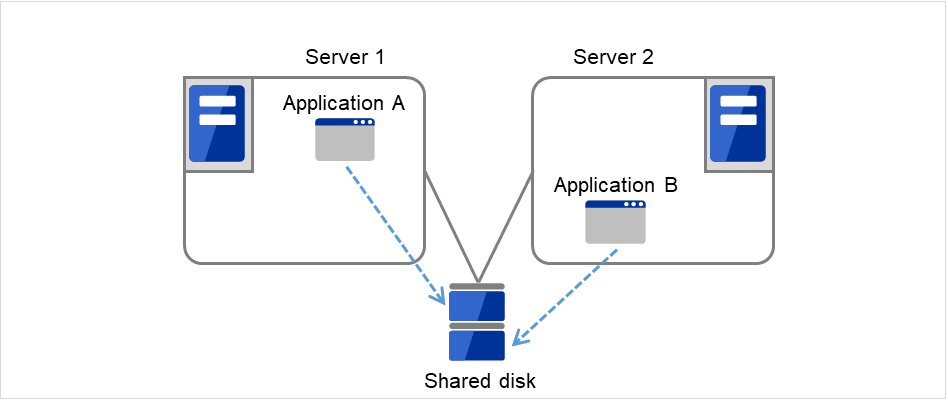

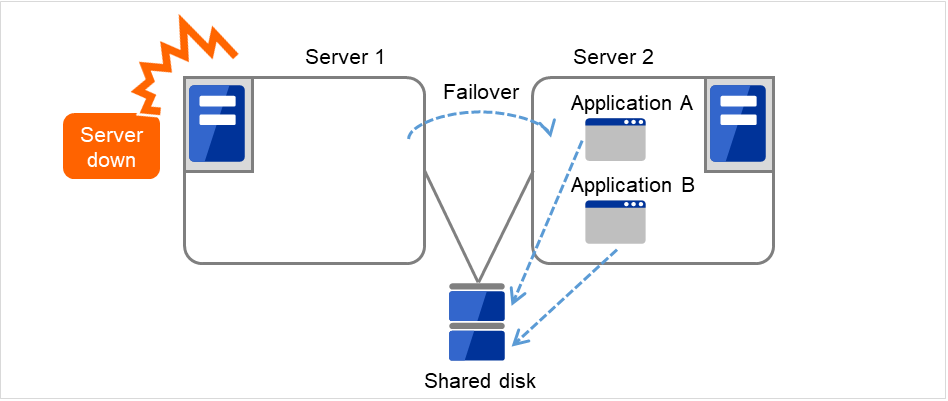

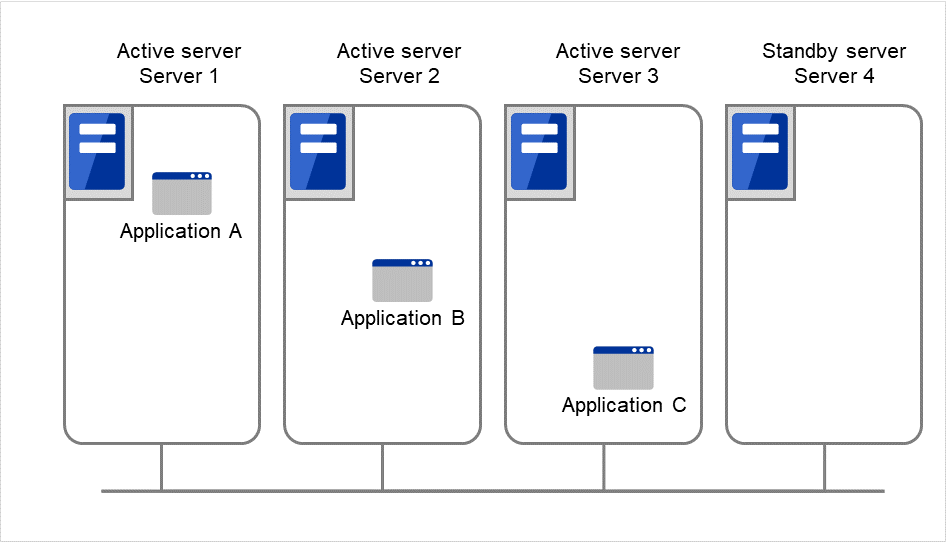

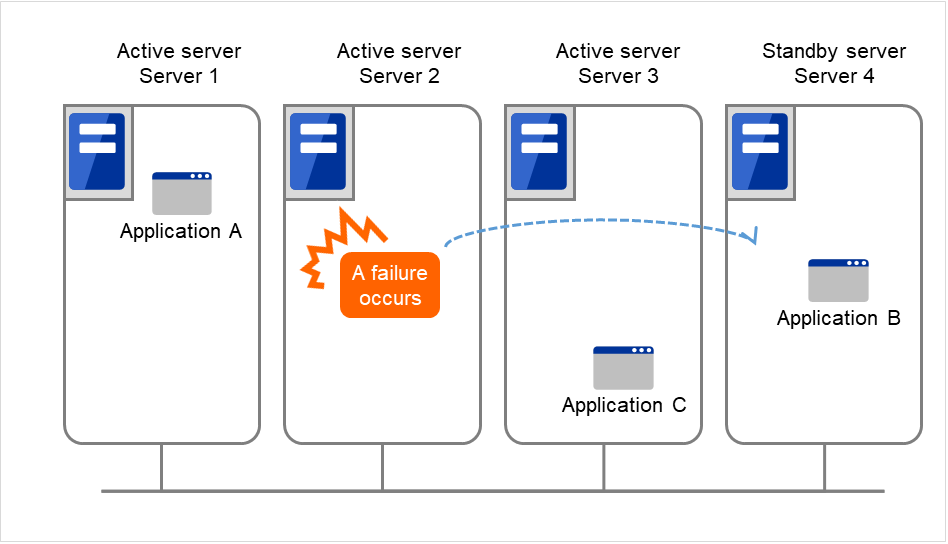

Multi-directional standby cluster system with different applications

In the different application multi-directional standby cluster system, different applications are activated on multiple servers and these servers operate as standby servers. When a failover occurs, two or more applications are activated on one server. Therefore, these applications need to be able to coexist. You can build a load distribution system per application unit basis.

Application A and Application B are different applications.

Fig. 2.11 Multi-directional standby cluster system with different applications (1)¶

Fig. 2.12 Multi-directional standby cluster system with different applications (2)¶

N-to-N Configuration

The configuration can be expanded with more nodes by applying the configurations introduced thus far. In an N-to-N configuration described below, three different applications are run on three servers and one standby server takes over the application if any problem occurs. In a uni-directional standby cluster system, the stand-by server does not operate anything, so one of the two server functions as a stand-by server. However, in an N-to N configuration, only one of the four servers functions as a stand-by server. Performance deterioration is not anticipated if an error occurs only on one server.

Fig. 2.13 Node to node configuration (1)¶

Fig. 2.14 Node to node configuration (2)¶

2.4. Error detection mechanism¶

Cluster software executes failover (for example, passing operations) when a failure that can affect continued operation is detected. The following section gives you a quick view of how the cluster software detects a failure.

EXPRESSCLUSTER regularly checks whether other servers are properly working in the cluster system. This function is called "heartbeat communication."

Heartbeat and detection of server failures

Failures that must be detected in a cluster system are failures that can cause all servers in the cluster to stop. Server failures include hardware failures such as power supply and memory failures, and OS panic. To detect such failures, the heartbeat is used to monitor whether the server is active or not.

Some cluster software programs use heartbeat not only for checking if the target is active through ping response, but for sending status information on the local server. Such cluster software programs begin failover if no heartbeat response is received in heartbeat transmission, determining no response as server failure. However, grace time should be given before determining failure, since a highly loaded server can cause delay of response. Allowing grace period results in a time lag between the moment when a failure occurred and the moment when the failure is detected by the cluster software.

Detection of resource failures

Factors causing stop of operations are not limited to stop of all servers in the cluster. Failure in disks used by applications, NIC failure, and failure in applications themselves are also factors that can cause the stop of operations. These resource failures need to be detected as well to execute failover for improved availability.

Accessing a target resource is used to detect resource failures if the target is a physical device. For monitoring applications, trying to service ports within the range not affecting operation is a way of detecting an error in addition to monitoring if application processes are activated.

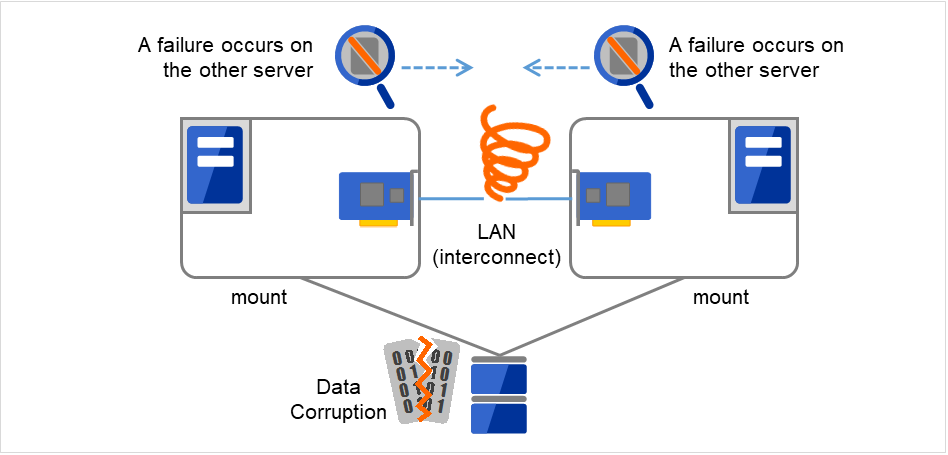

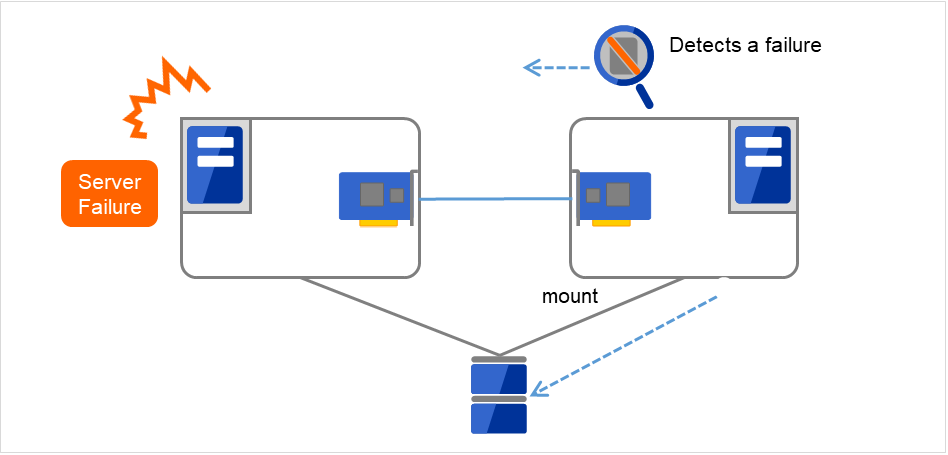

2.4.2. Network partition (Split-Brain Syndrome)¶

When all interconnects between servers are disconnected, it is not possible to tell if a server is down, only by monitoring if it is activated by a heartbeat. In this status, if a failover is performed and multiple servers mount a file system simultaneously considering the server has been shut down, data on the shared disk may be corrupted.

Fig. 2.15 Network partition¶

The problem explained in the section above is referred to as "network partition" or "Split Brain Syndrome." To resolve this problem, the failover cluster system is equipped with various mechanisms to ensure shared disk lock at the time when all interconnects are disconnected.

2.5. Inheriting cluster resources¶

As mentioned earlier, resources to be managed by a cluster include disks, IP addresses, and applications. The functions used in the failover cluster system to inherit these resources are described below.

2.5.1. Inheriting data¶

In the shared disk type cluster, data to be passed from a server to another in a cluster system is stored in a partition in a shared disk. This means inheriting data is re-mounting the file system of files that the application uses from a healthy server. What the cluster software should do is simply mount the file system because the shared disk is physically connected to a server that inherits data.

Fig. 2.16 Inheriting data¶

The diagram above (Figure 2.16 Inheriting data) may look simple. Consider the following issues in designing and creating a cluster system.

One issue to consider is recovery time for a file system or database. A file to be inherited may have been used by another server or to be updated just before the failure occurred. For this reason, a cluster system may need to do consistency checks to data it is moving on some file systems, as well as it may need to rollback data for some database systems. These checks are not cluster system-specific, but required in many recovery processes, including when you reboot a single server that has been shut down due to a power failure. If this recovery takes a long time, the time is wholly added to the time for failover (time to take over operation), and this will reduce system availability.

Another issue you should consider is writing assurance. When an application writes data into the shared disk, usually the data is written through a file system. However, even though the application has written data - but the file system only stores it on a disk cache and does not write into the shared disk - the data on the disk cache will not be inherited to a stand-by server when an active server shuts down. For this reason, it is required to write important data that needs to be inherited to a stand-by server into a disk, by using a function such as synchronous writing. This is same as preventing the data becoming volatile when a single server shuts down. Namely, only the data registered in the shared disk is inherited to a stand-by server, and data on a memory disk such as a disk cache is not inherited. The cluster system needs to be configured considering these issues.

2.5.2. Inheriting IP addresses¶

When a failover occurs, it does not have to be concerned which server is running operations by inheriting IP addresses. The cluster software inherits the IP addresses for this purpose.

2.5.3. Inheriting applications¶

The last to come in inheritance of operation by cluster software is inheritance of applications. Unlike fault tolerant computers (FTC), no process status such as contents of memory is inherited in typical failover cluster systems. The applications running on a failed server are inherited by rerunning them on a healthy server.

For example, when the database instance is failed over, the database that is started in the stand-by server can not continue the exact processes and transactions that have been running in the failed server, and roll-back of transaction is performed in the same as restarting the database after it was down. It is required to connect to the database again from the client. The time needed for this database recovery is typically a few minutes though it can be controlled by configuring the interval of DBMS checkpoint to a certain extent.

Many applications can restart operations by re-execution. Some applications, however, require going through procedures for recovery if a failure occurs. For these applications, cluster software allows to start up scripts instead of applications so that recovery process can be written. In a script, the recovery process, including cleanup of files half updated, is written as necessary according to factors for executing the script and information on the execution server.

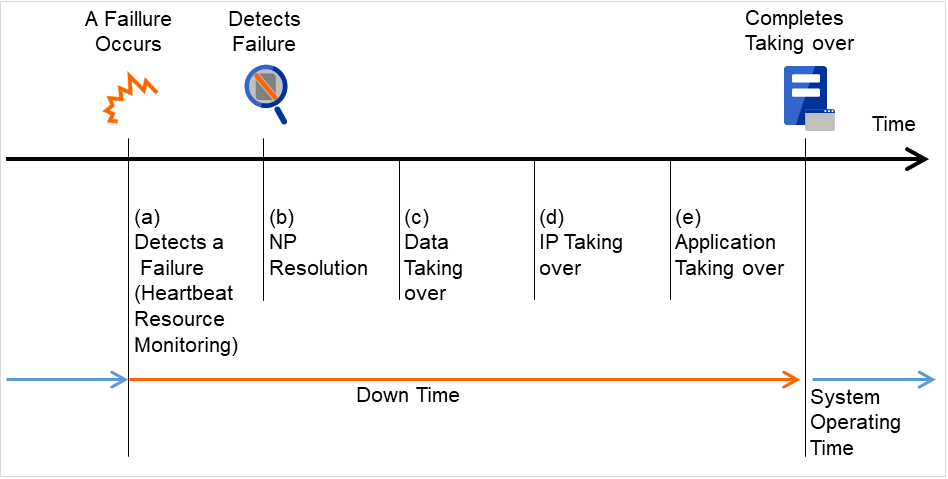

2.5.4. Summary of failover¶

To summarize the behavior of cluster software:

Detects a failure (heartbeat/resource monitoring)

Resolves a network partition (NP resolution)

Pass data

Pass IP address

Pass applications

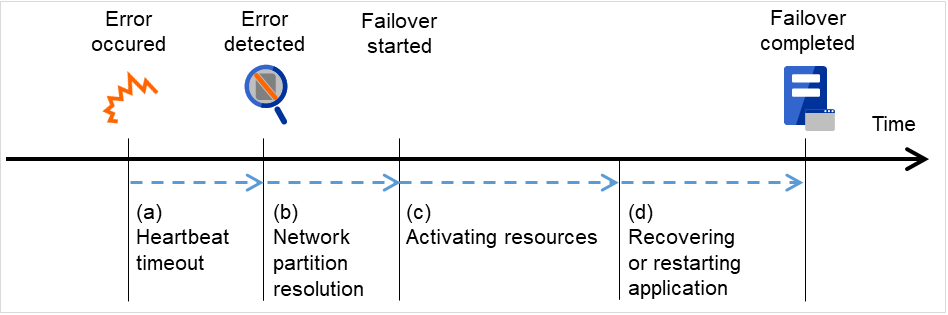

Fig. 2.17 Failover time chart¶

Cluster software is required to complete each task quickly and reliably (see Figure 2.17 Failover time chart) Cluster software achieves high availability with due consideration on what has been described so far.

2.6. Eliminating single point of failure¶

Having a clear picture of the availability level required or aimed is important in building a high availability system. This means when you design a system, you need to study cost effectiveness of countermeasures, such as establishing a redundant configuration to continue operations and recovering operations within a short period, against various failures that can disturb system operations.

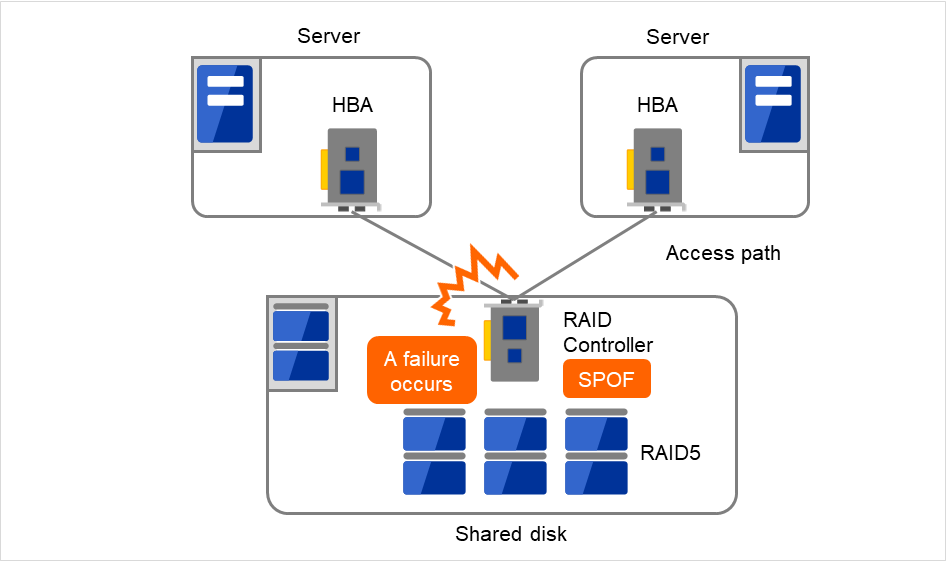

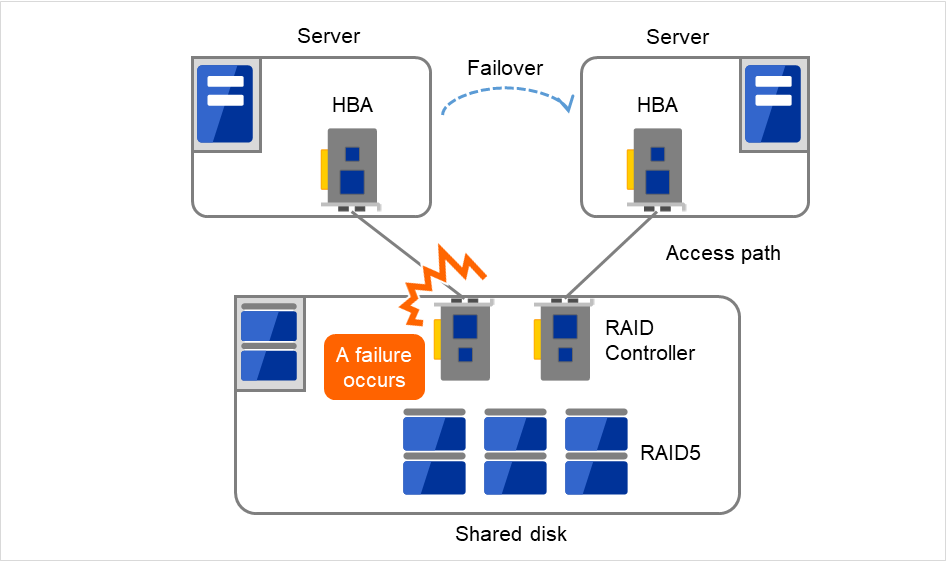

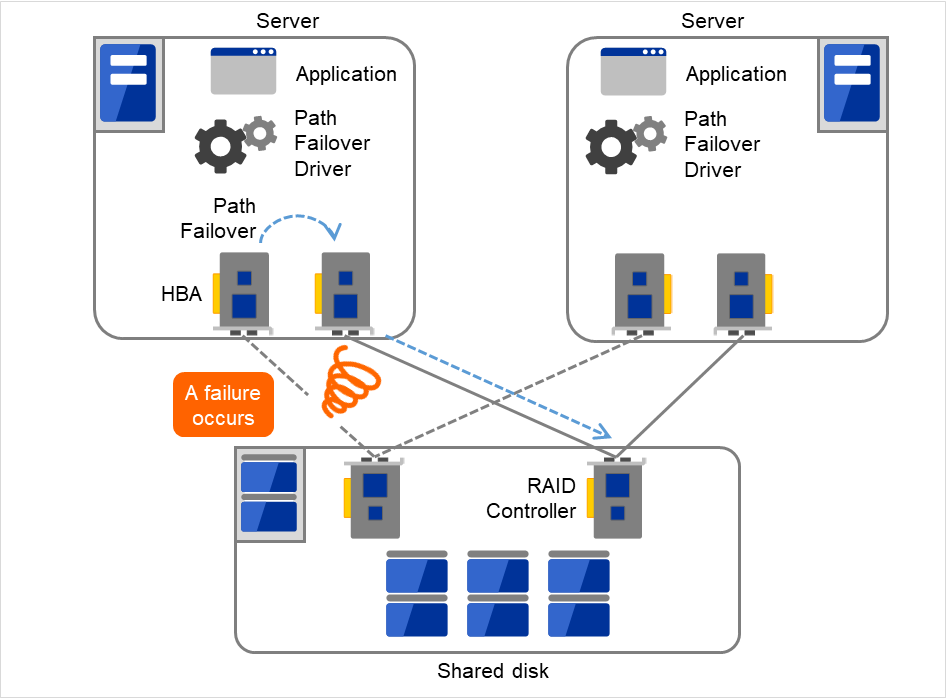

Single point of failure (SPOF), as described previously, is a component where failure can lead to stop of the system. In a cluster system, you can eliminate the system's SPOF by establishing server redundancy. However, components shared among servers, such as shared disk may become a SPOF. The key in designing a high availability system is to duplicate or eliminate this shared component.

A cluster system can improve availability but failover will take a few minutes for switching systems. That means time for failover is a factor that reduces availability. Solutions for the following three, which are likely to become SPOF, will be discussed hereafter although technical issues that improve availability of a single server such as ECC memory and redundant power supply are important.

Shared disk

Access path to the shared disk

LAN

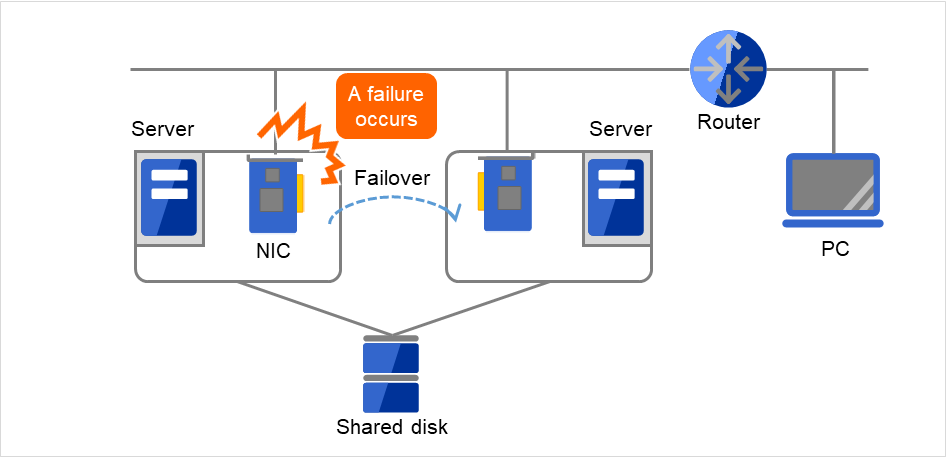

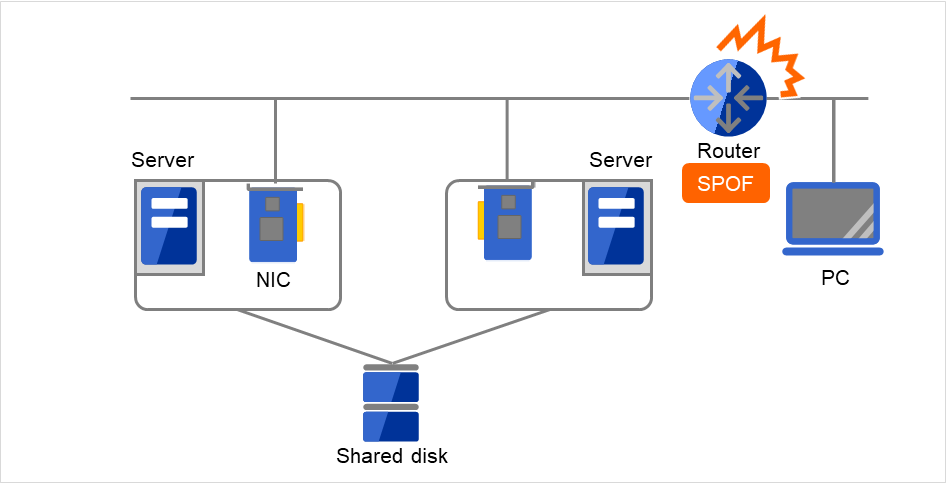

2.6.3. LAN¶

In any systems that run services on a network, a LAN failure is a major factor that disturbs operations of the system. If appropriate settings are made, availability of cluster system can be increased through failover between nodes at NIC failures. However, a failure in a network device that resides outside the cluster system disturbs operation of the system.

Fig. 2.21 Example of a failure with LAN (NIC)¶

In the case of this above figure, even if NIC on the server has a failure, a failover will keep the access from the PC to the service on the server.

Fig. 2.22 Example of a failure with LAN (Router)¶

In the case of this above figure, if the router has a failure, the access from the PC to the service on the server cannot be maintained (Router becomes a SPOF).

LAN redundancy is a solution to tackle device failure outside the cluster system and to improve availability. You can apply ways used for a single server to increase LAN availability. For example, choose a primitive way to have a spare network device with its power off, and manually replace a failed device with this spare device. Choose to have a multiplex network path through a redundant configuration of high-performance network devices, and switch paths automatically. Another option is to use a driver that supports NIC redundant configuration such as Intel's ANS driver.

Load balancing appliances and firewall appliances are also network devices that are likely to become SPOF. Typically, they allow failover configurations through standard or optional software. Having redundant configuration for these devices should be regarded as requisite since they play important roles in the entire system.

2.7. Operation for availability¶

2.7.1. Evaluation before starting operation¶

Given many of factors causing system troubles are said to be the product of incorrect settings or poor maintenance, evaluation before actual operation is important to realize a high availability system and its stabilized operation. Exercising the following for actual operation of the system is a key in improving availability:

Clarify and list failures, study actions to be taken against them, and verify effectiveness of the actions by creating dummy failures.

Conduct an evaluation according to the cluster life cycle and verify performance (such as at degenerated mode)

Arrange a guide for system operation and troubleshooting based on the evaluation mentioned above.

Having a simple design for a cluster system contributes to simplifying verification and improvement of system availability.

2.7.2. Failure monitoring¶

Despite the above efforts, failures still occur. If you use the system for long time, you cannot escape from failures: hardware suffers from aging deterioration and software produces failures and errors through memory leaks or operation beyond the originally intended capacity. Improving availability of hardware and software is important yet monitoring for failure and troubleshooting problems is more important. For example, in a cluster system, you can continue running the system by spending a few minutes for switching even if a server fails. However, if you leave the failed server as it is, the system no longer has redundancy and the cluster system becomes meaningless should the next failure occur.

If a failure occurs, the system administrator must immediately take actions such as removing a newly emerged SPOF to prevent another failure. Functions for remote maintenance and reporting failures are very important in supporting services for system administration.

To achieve high availability with a cluster system, you should:

Remove or have complete control on single point of failure.

Have a simple design that has tolerance and resistance for failures, and be equipped with a guide for operation and troubleshooting.

Detect a failure quickly and take appropriate action against it.

3. Using EXPRESSCLUSTER¶

This chapter explains the components of EXPRESSCLUSTER, how to design a cluster system, and how to use EXPRESSCLUSTER.

This chapter covers:

3.1. What is EXPRESSCLUSTER?¶

EXPRESSCLUSTER is software that enables the HA cluster system.

3.2. EXPRESSCLUSTER modules¶

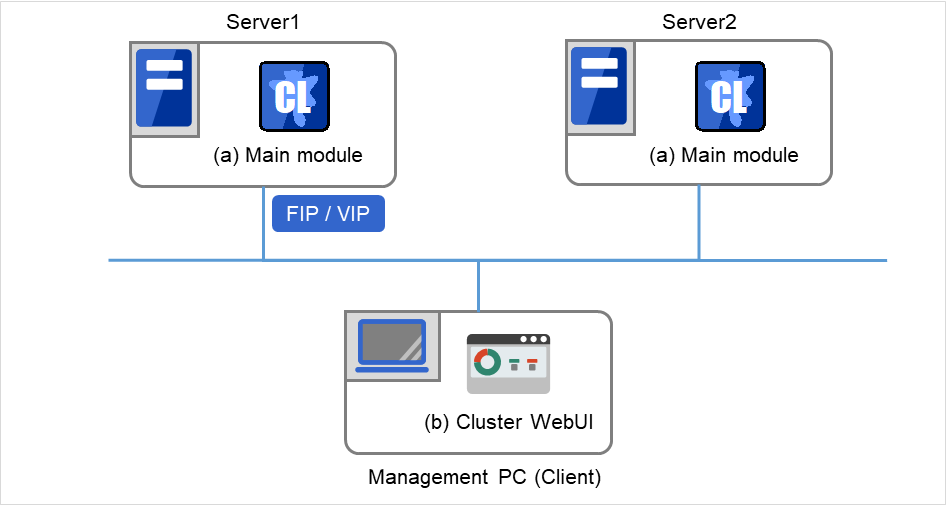

EXPRESSCLUSTER consists of following two modules:

- EXPRESSCLUSTER ServerA core component of EXPRESSCLUSTER. Install this to the server machines that constitute the cluster system. This includes all high availability functions of EXPRESSCLUSTER. The server functions of the Cluster WebUI are also included.

- Cluster WebUIThis is a tool to create the configuration data of EXPRESSCLUSTER and to manage EXPRESSCLUSTER operations. Uses a Web browser as a user interface. The Cluster WebUI is installed in EXPRESSCLUSTER Server, but it is distinguished from the EXPRESSCLUSTER Server because the Cluster WebUI is operated from the Web browser on the management PC.

3.3. Software configuration of EXPRESSCLUSTER¶

The software configuration of EXPRESSCLUSTER should look similar to the figure below. Install the EXPRESSCLUSTER Server (software) on a server that constitutes a cluster. Because the main functions of Cluster WebUI are included in EXPRESSCLUSTER Server, it is not necessary to separately install them. The Cluster WebUI can be used through the web browser on the management PC or on each server in the cluster.

EXPRESSCLUSTER Server (Main module)

Cluster WebUI

Fig. 3.1 Software configuration of EXPRESSCLUSTER¶

3.3.1. How an error is detected in EXPRESSCLUSTER¶

There are three kinds of monitoring in EXPRESSCLUSTER: (1) server monitoring, (2) application monitoring, and (3) internal monitoring. These monitoring functions let you detect an error quickly and reliably. The details of the monitoring functions are described below.

3.3.2. What is server monitoring?¶

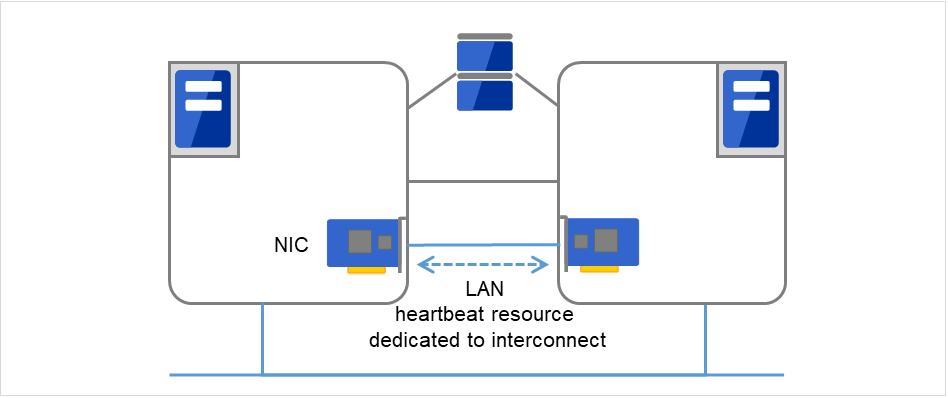

- Primary InterconnectLAN dedicated to communication between the cluster servers. This is used to exchange information between the servers as well as to perform heartbeat communication.

Fig. 3.2 LAN heartbeat/Kernel mode LAN heartbeat (Primary Interconnect)¶

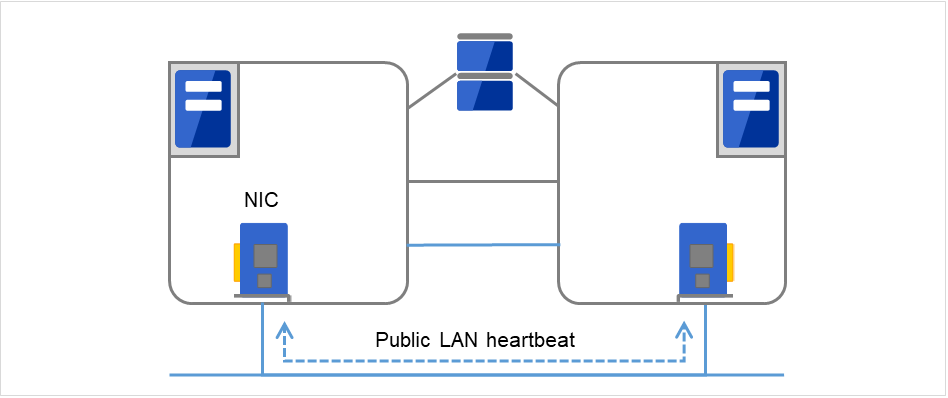

- Secondary InterconnectThis is used as a path to be used for the communicating with a client. This is used for exchanging data between the servers as well as for a backup interconnects.

Fig. 3.3 LAN heartbeat/Kernel mode LAN heartbeat (Secondary Interconnect)¶

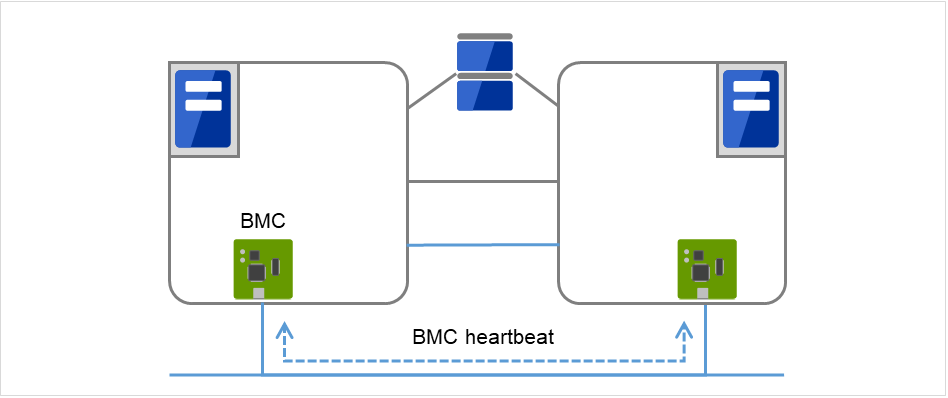

- BMCThis is used to check that other server exists by performing a heartbeat communication via BMC between servers constructing a failover-type cluster.

Fig. 3.4 BMC heartbeat¶

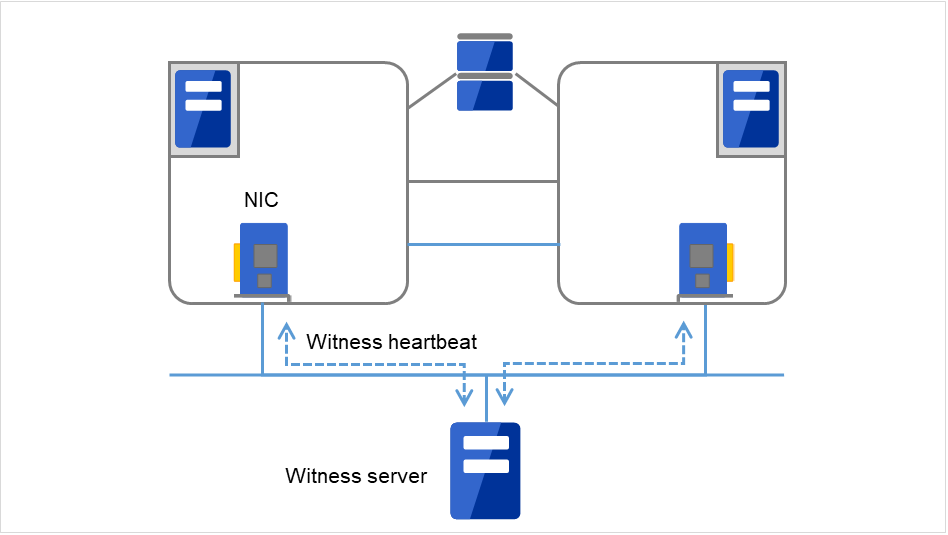

- WitnessThis is used by the external Witness server running the Witness server service to check if other servers constructing the failover-type cluster exist through communication with them.

Fig. 3.5 Witness heartbeat¶

3.3.3. What is application monitoring?¶

Application monitoring is a function that monitors applications and factors that cause a situation where an application cannot run.

- Monitoring applications and/or protocols to see if they are stalled or failed by using the monitoring option.In addition to the basic monitoring of successful startup and existence of applications, you can even monitor stall and failure in applications including specific databases (such as Oracle, DB2), protocols (such as FTP, HTTP) and / or application servers (such as WebSphere, WebLogic) by introducing optional monitoring products of EXPRESSCLUSTER. For the details, see "Monitor resource details" in the "Reference Guide".

- Monitoring activation status of applicationsAn error can be detected by starting up an application by using an application-starting resource (called application resource and service resource) of EXPRESSCLUSTER and regularly checking whether the process is active or not by using application-monitoring resource (called application monitor resource and service monitor resource). It is effective when the factor for application to stop is due to error termination of an application.

Note

An error in resident process cannot be detected in an application started up by EXPRESSCLUSTER.

Note

An internal application error (for example, application stalling and result error) cannot be detected.

- Resource monitoringAn error can be detected by monitoring the cluster resources (such as disk partition and IP address) and public LAN using the monitor resources of the EXPRESSCLUSTER. It is effective when the factor for application to stop is due to an error of a resource that is necessary for an application to operate.

3.3.4. What is internal monitoring?¶

Internal monitoring refers to an inter-monitoring of modules within EXPRESSCLUSTER. It monitors whether each monitoring function of EXPRESSCLUSTER is properly working. Activation status of EXPRESSCLUSTER process monitoring is performed within EXPRESSCLUSTER.

Monitoring activation status of an EXPRESSCLUSTER process

3.3.5. Monitorable and non-monitorable errors¶

There are monitorable and non-monitorable errors in EXPRESSCLUSTER. It is important to know what kind of errors can or cannot be monitored when building and operating a cluster system.

3.3.6. Detectable and non-detectable errors by server monitoring¶

Monitoring conditions: A heartbeat from a server with an error is stopped

Example of errors that can be monitored:

Hardware failure (of which OS cannot continue operating)

Stop error

Example of error that cannot be monitored:

Partial failure on OS (for example, only a mouse or keyboard does not function)

3.3.7. Detectable and non-detectable errors by application monitoring¶

Monitoring conditions: Termination of application with errors, continuous resource errors, disconnection of a path to the network devices.

Example of errors that can be monitored:

Abnormal termination of an application

Failure to access the shared disk (such as HBA failure)

Public LAN NIC problem

Example of errors that cannot be monitored:

- Application stalling and resulting in error.EXPRESSCLUSTER cannot monitor application stalling and error results 1. However, it is possible to perform failover by creating a program that monitors applications and terminates itself when an error is detected, starting the program using the application resource, and monitoring application using the application monitor resource.

- 1

Stalling and error results can be monitored for the database applications (such as Oracle, DB2), the protocols (such as FTP, HTTP) and application servers (such as WebSphere and WebLogic) that are handled by a monitoring option.

3.4. Network partition resolution¶

COM method

PING method

HTTP method

Shared disk method

COM + shared disk method

PING + shared disk method

Majority method

Not solving the network partition

See also

For the details on the network partition resolution method, see "Details on network partition resolution resources" in the "Reference Guide".

3.5. Failover mechanism¶

Upon detecting that a heartbeat from a server is interrupted, EXPRESSCLUSTER determines whether the cause of this interruption is an error in a server or a network partition before starting a failover. Then a failover is performed by activating various resources and starting up applications on a properly working server.

The group of resources which fail over at the same time is called a "failover group." From a user's point of view, a failover group appears as a virtual computer.

Note

In a cluster system, a failover is performed by restarting the application from a properly working node. Therefore, what is saved in an application memory cannot be failed over.

From occurrence of error to completion of failover takes a few minutes. See the time-chart below:

Fig. 3.6 Failover time chart¶

Heartbeat timeout

The time for a standby server to detect an error after that error occurred on the active server.

The setting values of the cluster properties should be adjusted depending on the delay caused by application load. (The default value is 30 seconds.)

Network partition resolution

This is the time to check whether stop of heartbeat (heartbeat timeout) detected from the other server is due to a network partition or an error in the other server.

Confirmation completes immediately.

Activating resources

The time to activate the resources necessary for operating an application.

The file system recovery, transfer of the data in disks, and transfer of IP addresses are performed.

The resources can be activated in a few seconds in ordinary settings, but the required time changes depending on the type and the number of resources registered to the failover group. For more information, see the "Installation and Configuration Guide".

Recovering and restarting applications

The startup time of the application to be used in operation. The data recovery time such as a roll-back or roll-forward of the database is included.

The time for roll-back or roll-forward can be predicted by adjusting the check point interval. For more information, refer to the document that comes with each software product.

3.5.2. Hardware configuration of the mirror disk type cluster configured by EXPRESSCLUSTER¶

The mirror disk type cluster is an alternative to the shared disk device, by mirroring the partition on the server disks. This is good for the systems that are smaller-scale and lower-budget, compared to the shared disk type cluster.

Note

To use a mirror disk, it is a requirement to purchase the Replicator option or the Replicator DR option.

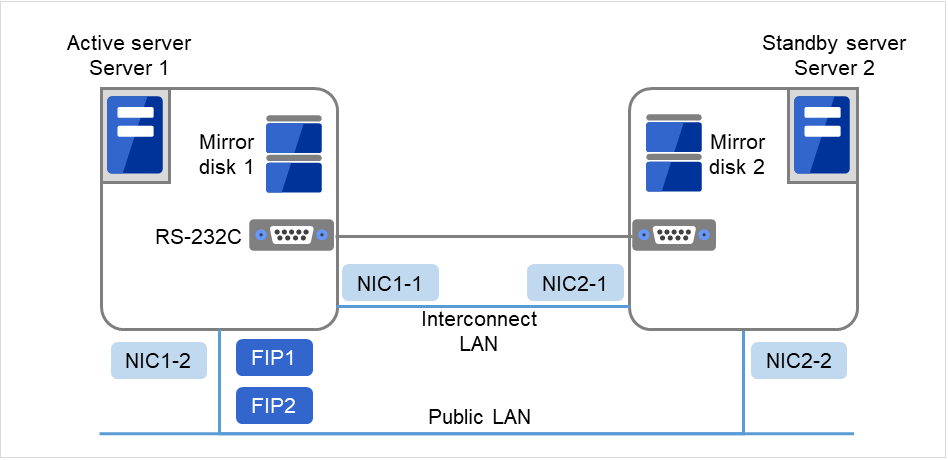

Sample cluster environment with mirror disks used (when the cluster partitions and data partitions are allocated to the OS-installed disks)

In the following configuration, free partitions of the OS-installed disks are used as cluster partitions and data partitions.

Fig. 3.8 Example of cluster configuration (1) (Mirror disk type)¶

FIP1

10.0.0.11 (Access destination from the Cluster WebUI client)

FIP2

10.0.0.12 (Access destination from the operation client)

NIC1-1

192.168.0.1

NIC1-2

10.0.0.1

NIC2-1

192.168.0.2

NIC2-2

10.0.0.2

RS-232C port

COM1

Drive letter of the cluster partition

E

File system

RAW

Drive letter of the data partition

F

File system

NTFS

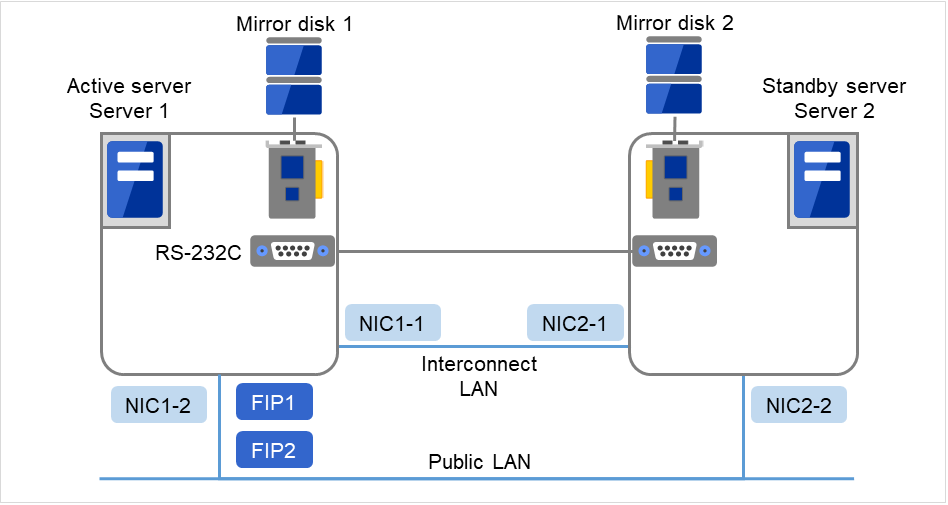

Sample cluster environment with mirror disks used (when disks are prepared for cluster partitions and data partitions)

In the following configuration, disks are prepared for cluster partitions and data partitions and connected to the servers.

Fig. 3.9 Example of cluster configuration (2) (Mirror disk type)¶

FIP1

10.0.0.11 (Access destination from the Cluster WebUI client)

FIP2

10.0.0.12 (Access destination from the operation client)

NIC1-1

192.168.0.1

NIC1-2

10.0.0.1

NIC2-1

192.168.0.2

NIC2-2

10.0.0.2

RS-232C port

COM1

Drive letter of the cluster partition

E

File system

RAW

Drive letter of the data partition

F

File system

NTFS

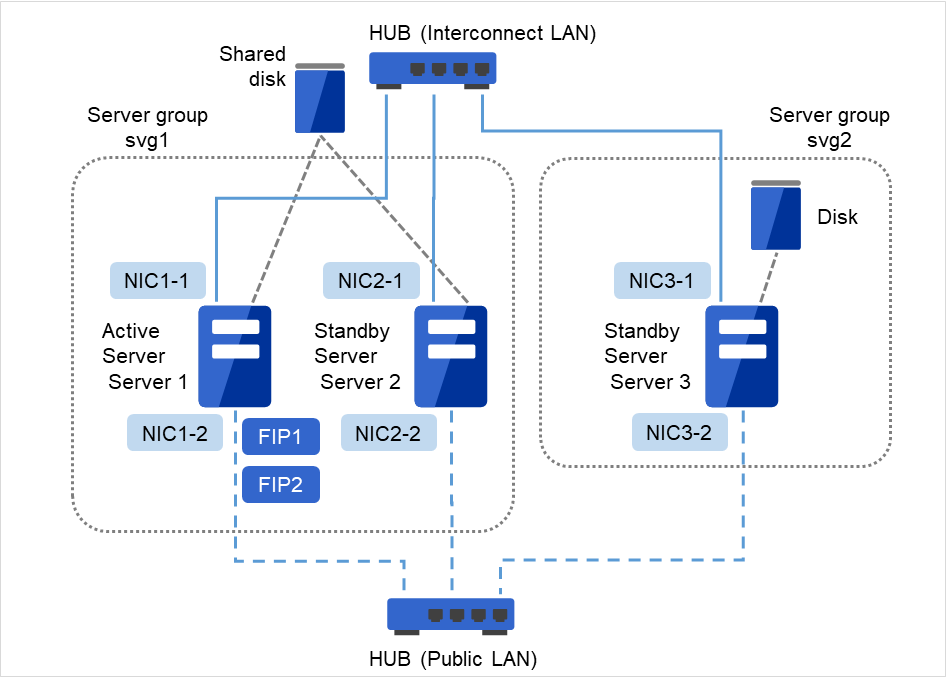

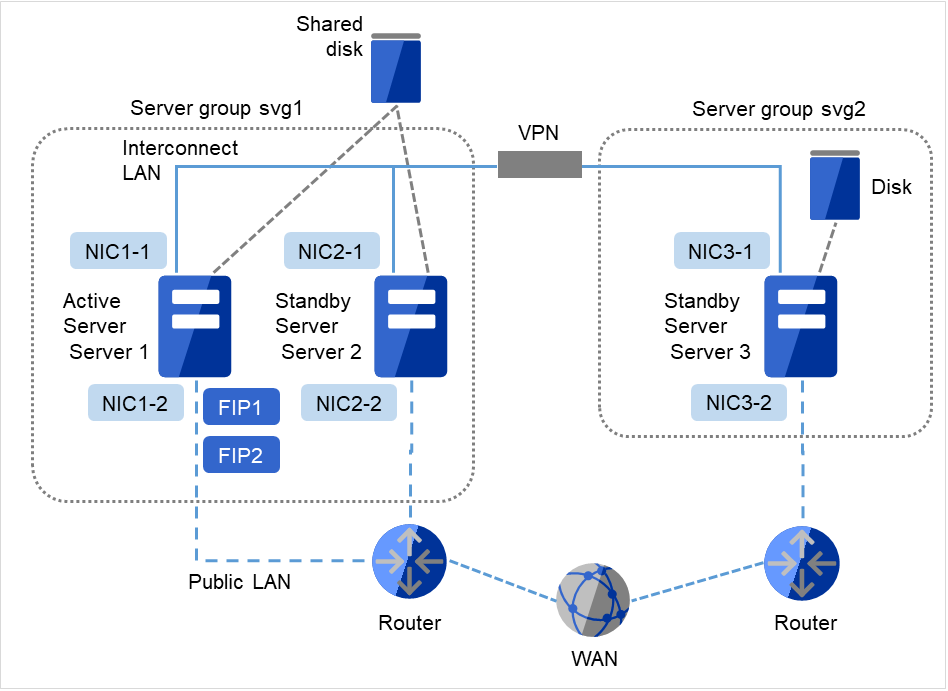

3.5.3. Hardware configuration of the hybrid disk type cluster configured by EXPRESSCLUSTER¶

By combining the shared disk type and the mirror disk type and mirroring the partitions on the shared disk, this configuration allows the ongoing operation even if a failure occurs on the shared disk device. Mirroring between remote sites can also serve as a disaster countermeasure.

Note

To use the hybrid disk type configuration, it is a requirement to purchase the Replicator DR option.

Sample cluster environment with hybrid disks used (a shared disk is used by two servers and the data is mirrored to the normal disk of the third server)

Fig. 3.10 Example of cluster configuration (Hybrid disk type)¶

FIP1

10.0.0.11 (Access destination from the Cluster WebUI client)

FIP2

10.0.0.12 (Access destination from the operation client)

NIC1-1

192.168.0.1

NIC1-2

10.0.0.1

NIC2-1

192.168.0.2

NIC2-2

10.0.0.2

NIC3-1

192.168.0.3

NIC3-2

10.0.0.3

Shared disk

Drive letter of the partition for heartbeat

E

File system

RAW

Drive letter of the cluster partition

F

File system

RAW

Drive letter of the data partition

G

File system

NTFS

The above figure shows a sample of the cluster environment where a shared disk is mirrored in the same network. While the hybrid disk type configuration mirrors between server groups that are connected to the same shared disk device, the sample above mirrors the shared disk to the local disk in server3. Because of this, the stand-by server group svg2 has only one member server, server3.

Fig. 3.11 Example of cluster configuration (Hybrid disk type, remote cluster)¶

FIP1 |

10.0.0.11 (Access destination from the Cluster WebUI client) |

FIP2 |

10.0.0.12 (Access destination from the operation client) |

NIC1-1 |

192.168.0.1 |

NIC1-2 |

10.0.0.1 |

NIC2-1 |

192.168.0.2 |

NIC2-2 |

10.0.0.2 |

NIC3-1 |

192.168.0.3 |

NIC3-2 |

10.0.0.3 |

Shared disk

Drive letter of the partition for heartbeat

E

File system

RAW

Drive letter of the cluster partition

F

File system

RAW

Drive letter of the data partition

G

File system

NTFS

The above sample shows a sample of the cluster environment where mirroring is performed between remote sites. This sample uses virtual IP addresses but not floating IP addresses because the server groups have different network segments of the Public-LAN. When a virtual IP address is used, all the routers located in between must be configured to pass on the host route. The mirror connect communication transfers the write data to the disk as it is. It is recommended to enable use a VPN with a dedicated line or the compression and encryption functions.

3.5.4. What is cluster object?¶

In EXPRESSCLUSTER, the various resources are managed as the following groups:

- Cluster objectConfiguration unit of a cluster.

- Server objectIndicates the physical server and belongs to the cluster object.

- Server group objectIndicates a group that bundles servers and belongs to the cluster object. This object is required when a hybrid disk resource is used.

- Heartbeat resource objectIndicates the network part of the physical server and belongs to the server object.

- Network partition resolution resource objectIndicates the network partition resolution mechanism and belongs to the server object.

- Group objectIndicates a virtual server and belongs to the cluster object.

- Group resource objectIndicates resources (network, disk) of the virtual server and belongs to the group object.

- Monitor resource objectIndicates monitoring mechanism and belongs to the cluster object.

3.6. What is a resource?¶

In EXPRESSCLUSTER, a group used for monitoring the target is called "resources." The resources that perform monitoring and those to be monitored are classified into two groups and managed. There are four types of resources and are managed separately. Having resources allows distinguishing what is monitoring and what is being monitored more clearly. It also makes building a cluster and handling an error easy. The resources can be divided into heartbeat resources, network partition resolution resources, group resources, and monitor resources.

See also

For the details of each resource, see the "Reference Guide".

3.6.1. Heartbeat resources¶

Heartbeat resources are used for verifying whether the other server is working properly between servers. The following heartbeat resources are currently supported:

- LAN heartbeat resourceUses Ethernet for communication.

- Witness heartbeat resourceUses the external server running the Witness server service to show the status (of communication with each server) obtained from the external server.

- BMC heartbeat resourceUses Ethernet for communication via BMC. This is available only when BMC hardware and firmware are supported.

3.6.2. Network partition resolution resources¶

The following resource is used to resolve a network partition:

- COM network partition resolution resourceThis is a network partition resolution resource by the COM method.

- DISK network partition resolution resourceThis is a network partition resolution resource by the DISK method and can be used only for the shared disk configuration.

- PING network partition resolution resourceThis is a network partition resolution resource by the PING method.

- HTTP network partition resolution resourceUses the external server running the Witness server service to show the status (of communication with each server) obtained from the external server.

- Majority network partition resolution resourceThis is a network partition resolution resource by the majority method.

3.6.3. Group resources¶

A group resource constitutes a unit when a failover occurs. The following group resources are currently supported:

- Application resource (appli)Provides a mechanism for starting and stopping an application (including user creation application.)

- Floating IP resource (fip)Provides a virtual IP address. A client can access a virtual IP address the same way as accessing a regular IP address.

- Mirror disk resource (md)Provides a function to perform mirroring a specific partition on the local disk and control access to it. It can be used only on a mirror disk configuration.

- Registry synchronization resource (regsync)Provides a mechanism to synchronize specific registries of more than two servers, to set the applications and services in the same way among the servers that constitute a cluster.

- Script resource (script)Provides a mechanism for starting and stopping a script (BAT) such as a user creation script.

- Disk resource (sd)Provides a function to control access to a specific partition on the shared disk. This can be used only when the shared disk device is connected.

- Service resource (service)Provides a mechanism for starting and stopping a service such as database and Web.

- Print spooler resource (spool)Provides a mechanism for failing over print spoolers.

- Virtual computer name resource (vcom)Provides a virtual computer name. This can be accessed from a client in the same way as a general computer name.

- Dynamic DNS resource (ddns)Registers a virtual host name and the IP address of the active server to the dynamic DNS server.

- Virtual IP resource (vip)Provides a virtual IP address. This can be accessed from a client in the same way as a general IP address. This can be used in the remote cluster configuration among different network addresses.

- CIFS resource (cifs)Provides a function to disclose and share folders on the shared disk and mirror disks.

- NAS resource (nas)Provides a function to mount the shared folders on the file servers as network drives.

- Hybrid disk resource (hd)A resource in which the disk resource and the mirror disk resource are combined. Provides a function to perform mirroring on a certain partition on the shared disk or the local disk and to control access.

- VM resource (vm)Starts, stops, or migrates the virtual machine.

- AWS elastic ip resource (awseip)Provides a system for giving an elastic IP (referred to as EIP) when EXPRESSCLUSTER is used on AWS.

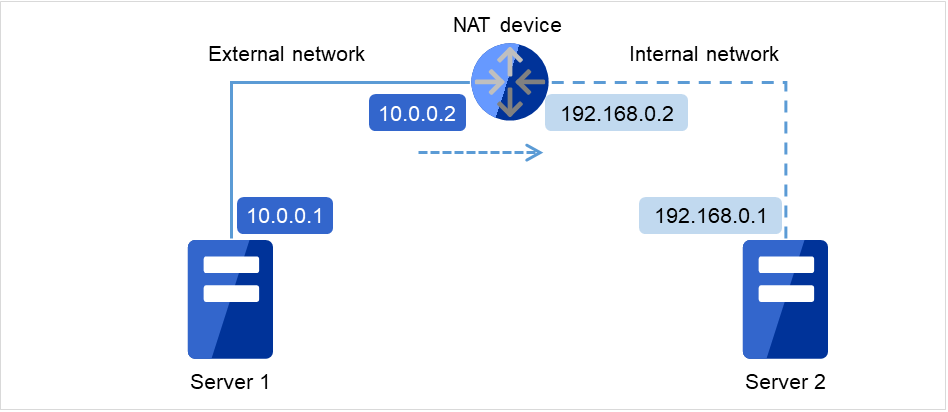

- AWS virtual ip resource (awsvip)Provides a system for giving a virtual IP (referred to as VIP) when EXPRESSCLUSTER is used on AWS.

- AWS DNS resource (awsdns)Registers the virtual host name and the IP address of the active server to Amazon Route 53 when EXPRESSCLUSTER is used on AWS.

- Azure probe port resource (azurepp)Provides a system for opening a specific port on a node on which the operation is performed when EXPRESSCLUSTER is used on Microsoft Azure.

- Azure DNS resource (azuredns)Registers the virtual host name and the IP address of the active server to Azure DNS when EXPRESSCLUSTER is used on Microsoft Azure.

- Google Cloud virtual IP resource (gcvip)Provides a system for opening a specific port on a node on which the operation is performed when EXPRESSCLUSTER is used on Google Cloud Platform.

- Google Cloud DNS resource (gcdns)Registers the virtual host name and the IP address of the active server to Cloud DNS when EXPRESSCLUSTER is used on Google Cloud Platform.

- Oracle Cloud virtual IP resource (ocvip)Provides a system for opening a specific port on a node on which the operation is performed when EXPRESSCLUSTER is used on Oracle Cloud Infrastructure.

Note

3.6.4. Monitor resources¶

A monitor resource monitors a cluster system. The following monitor resources are currently supported:

- Application monitor resource (appliw)Provides a monitoring mechanism to check whether a process started by application resource is active or not.

- Disk RW monitor resource (diskw)Provides a monitoring mechanism for the file system and function to perform a failover by resetting the hardware or an intentional stop error at the time of file system I/O stalling. This can be used for monitoring the file system of the shared disk.

- Floating IP monitor resource (fipw)Provides a monitoring mechanism of the IP address started by floating IP resource.

- IP monitor resource (ipw)Provides a mechanism for monitoring the network communication.

- Mirror disk monitor resource (mdw)Provides a monitoring mechanism of the mirroring disks.

- Mirror connect monitor resource (mdnw)Provides a monitoring mechanism of the mirror connect.

- NIC Link Up/Down monitor resource (miiw)Provides a monitoring mechanism for link status of LAN cable.

- Multi target monitor resource (mtw)Provides a status with multiple monitor resources.

- Registry synchronization monitor resource (regsyncw)Provides a monitoring mechanism of the synchronization process by a registry synchronization resource.

- Disk TUR monitor resource (sdw)Provides a mechanism to monitor the operation of access path to the shared disk by the TestUnitReady command of SCSI. This can be used for the shared disk of FibreChannel.

- Service monitor resource (servicew)Provides a monitoring mechanism to check whether a process started by a service resource is active or not.

- Print spooler monitor resource (spoolw)Provides a monitoring mechanism of the print spooler started by a print spooler resource.

- Virtual computer name monitor resource (vcomw)Provides a monitoring mechanism of the virtual computer started by a virtual computer name resource.

- Dynamic DNS monitor resource (ddnsw)Periodically registers a virtual host name and the IP address of the active server to the dynamic DNS server.

- Virtual IP monitor resource (vipw)Provides a monitoring mechanism of the IP address started by a virtual IP resource.

- CIFS resource (cifsw)Provides a monitoring mechanism of the shared folder disclosed by a CIFS resource.

- NAS resource (nasw)Provides a monitoring mechanism of the network drive mounted by a NAS resource.

- Hybrid disk monitor resource (hdw)Provides a monitoring mechanism of the hybrid disk.

- Hybrid disk TUR monitor resource (hdtw)Provides a monitoring mechanism for the behavior of the access path to the shared disk device used as a hybrid disk by the TestUnitReady command. It can be used for a shared disk using FibreChannel.

- Custom monitor resource (genw)Provides a monitoring mechanism to monitor the system by the operation result of commands or scripts which perform monitoring, if any.

- Process name monitor resource (psw)Provides a monitoring mechanism for checking whether a process specified by a process name is active.

- DB2 monitor resource (db2w)Provides a monitoring mechanism for the IBM DB2 database.

- ODBC monitor resource (odbcw)Provides a monitoring mechanism for the database that can be accessed by ODBC.

- Oracle monitor resource (oraclew)Provides a monitoring mechanism for the Oracle database.

- PostgreSQL monitor resource (psqlw)Provides a monitoring mechanism for the PostgreSQL database.

- SQL Server monitor resource (sqlserverw)Provides a monitoring mechanism for the SQL Server database.

- FTP monitor resource (ftpw)Provides a monitoring mechanism for the FTP server.

- HTTP monitor resource (httpw)Provides a monitoring mechanism for the HTTP server.

- IMAP4 monitor resource (imap4w)Provides a monitoring mechanism for the IMAP server.

- POP3 monitor resource (pop3w)Provides a monitoring mechanism for the POP server.

- SMTP monitor resource (smtpw)Provides a monitoring mechanism for the SMTP server.

- Tuxedo monitor resource (tuxw)Provides a monitoring mechanism for the Tuxedo application server.

- WebLogic monitor resource (wlsw)Provides a monitoring mechanism for the WebLogic application server.

- WebSphere monitor resource (wasw)Provides a monitoring mechanism for the WebSphere application server.

- WebOTX monitor resource (otxw)Provides a monitoring mechanism for the WebOTX application server.

- VM monitor resource (vmw)Provides a monitoring mechanism for a virtual machine started by a VM resource

- Message receive monitor resource (mrw)Specifies the action to take when an error message is received and how the message is displayed on the Cluster WebUI.

- JVM monitor resource (jraw)Provides a monitoring mechanism for Java VM.

- System monitor resource (sraw)Provides a monitoring mechanism for the resources of the whole system.

- Process resource monitor resource (psrw)Provides a monitoring mechanism for running processes on the server.

- User mode monitor resource (userw)Provides a stall monitoring mechanism for the user space and a function for performing failover by an intentional STOP error or an HW reset at the time of a user space stall.

- AWS Elastic Ip monitor resource (awseipw)Provides a monitoring mechanism for the elastic ip given by the AWS elastic ip (referred to as EIP) resource.

- AWS Virtual Ip monitor resource (awsvipw)Provides a monitoring mechanism for the virtual ip given by the AWS virtual ip (referred to as VIP) resource.

- AWS AZ monitor resource (awsazw)Provides a monitoring mechanism for an Availability Zone (referred to as AZ).

- AWS DNS monitor resource (awsdnsw)Provides a monitoring mechanism for the virtual host name and IP address provided by the AWS DNS resource.

- Azure probe port monitor resource (azureppw)Provides a monitoring mechanism for ports for alive monitoring for the node where an Azure probe port resource has been activated.

- Azure load balance monitor resource (azurelbw)Provides a mechanism for monitoring whether the port number that is same as the probe port is open for the node where an Azure probe port resource has not been activated.

- Azure DNS monitor resource (azurednsw)Provides a monitoring mechanism for the virtual host name and IP address provided by the Azure DNS resource.

- Google Cloud virtual IP monitor resource (gcvipw)Provides a mechanism for monitoring the alive-monitoring port for the node where a Google Cloud virtual IP resource has been activated.

- Google Cloud load balance monitor resource (gclbw)Provides a mechanism for monitoring whether the same port number as the health-check port number has already been used, for the node where a Google Cloud virtual IP resource has not been activated.

- Google Cloud DNS monitor resource (gcdnsw)Provides a monitoring mechanism for the virtual host name and IP address provided by the Google Cloud DNS resource.

- Oracle Cloud virtual IP monitor resource (ocvipw)Provides a mechanism for monitoring the alive-monitoring port for the node where an Oracle Cloud virtual IP resource has been activated.

- Oracle Cloud load balance monitor resource (oclbw)Provides a mechanism for monitoring whether the same port number as the health-check port number has already been used, for the node where an Oracle Cloud virtual IP resource has not been activated.

Note

3.7. Getting started with EXPRESSCLUSTER¶

Refer to the following guides when building a cluster system with EXPRESSCLUSTER:

3.7.1. Latest information¶

Refer to "4. Installation requirements for EXPRESSCLUSTER", "5. Latest version information" and "6. Notes and Restrictions" in this guide.

3.7.2. Designing a cluster system¶

Refer to "Determining a system configuration" and "Configuring a cluster system" in the "Installation and Configuration Guide" and "Group resource details", "Monitor resource details", "Heartbeat resources", "Details on network partition resolution resources", and "Information on other settings" in the "Reference Guide " and the "Hardware Feature Guide".

3.7.3. Configuring a cluster system¶

Refer to the "Installation and Configuration Guide"

3.7.4. Troubleshooting the problem¶

Refer to "The system maintenance information" in the "Maintenance Guide", and "Troubleshooting" and "Error messages" in the "Reference Guide".

4. Installation requirements for EXPRESSCLUSTER¶

This chapter provides information on system requirements for EXPRESSCLUSTER.

This chapter covers:

4.1. System requirements for hardware¶

EXPRESSCLUSTER operates on the following server architectures:

x86_64

4.1.1. General server requirements¶

Required specifications for the EXPRESSCLUSTER Server are the following:

RS-232C port 1 port (not necessary when configuring a cluster with 3 or more nodes)

Ethernet port 2 or more ports

Mirror disk or empty partition for mirror (required when the Replicator is used)

CD-ROM drive

4.1.2. Servers supporting Express5800/A1080a and Express5800/A1040a series linkage¶

The table below lists the supported servers that can use the Express5800/A1080a and Express5800/A1040a series linkage function of the BMC heartbeat resources and message receive monitor resources. This function cannot be used by servers other than the following.

Server |

Remarks |

|---|---|

Express5800/A1080a-E |

Update to the latest firmware. |

Express5800/A1080a-D |

Update to the latest firmware. |

Express5800/A1080a-S |

Update to the latest firmware. |

Express5800/A1040a |

Update to the latest firmware. |

4.2. System requirements for the EXPRESSCLUSTER Server¶

4.2.1. Supported operating systems¶

EXPRESSCLUSTER Server only runs on the operating systems listed below.

x86_64 version

OS |

Remarks |

|---|---|

Windows Server 2012 Standard |

|

Windows Server 2012 Datacenter |

|

Windows Server 2012 R2 Standard |

|

Windows Server 2012 R2 Datacenter |

|

Windows Server 2016 Standard |

|

Windows Server 2016 Datacenter |

|

Windows Server, version 1709 Standard |

|

Windows Server, version 1709 Datacenter |

|

Windows Server, version 1803 Standard |

|

Windows Server, version 1803 Datacenter |

|

Windows Server, version 1809 Standard |

|

Windows Server, version 1809 Datacenter |

|

Windows Server 2019 Standard |

|

Windows Server 2019 Datacenter |

|

Windows Server, version 1903 Standard |

|

Windows Server, version 1903 Datacenter |

|

Windows Server, version 1909 Standard |

|

Windows Server, version 1909 Datacenter |

|

Windows Server, version 2004 Standard |

|

Windows Server, version 2004 Datacenter |

4.2.2. Required memory and disk size¶

Required memory size

(User mode)

|

256MB( 2 ) |

|---|---|

Required memory size

(Kernel mode)

|

32 MB + 4 MB ( 3 ) x (number of mirror/hybrid resources) |

Required disk size

(Right after installation)

|

100MB |

Required disk size

(During operation)

|

5.0GB |

When changing to asynchronous method, changing the queue size or changing the difference bitmap size, it is required to add more memory. Memory size increases as disk load increases because memory is used corresponding to mirror disk I/O.

For the required size of a partition for a DISK network partition resolution resource, see "Partition for shared disk".

For the required size of a cluster partition, see "Partition for mirror disk" and "Partition for hybrid disk".

4.2.3. Application supported by the monitoring options¶

The following applications are the target monitoring options that are supported.

x86_64 version

Monitor resource |

Application to be monitored |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|---|

Oracle monitor |

Oracle Database 12c Release 1 (12.1) |

12.00 or later |

|

Oracle Database 12c Release 2 (12.2) |

12.00 or later |

||

Oracle Database 18c (18.3) |

12.10 or later |

||

Oracle Database 19c (19.3) |

12.10 or later |

||

DB2 monitor |

DB2 V10.5 |

12.00 or later |

|

DB2 V11.1 |

12.00 or later |

||

DB2 V11.5 |

12.20 or later |

||

PostgreSQL monitor |

PostgreSQL 9.3 |

12.00 or later |

|

PostgreSQL 9.4 |

12.00 or later |

||

PostgreSQL 9.5 |

12.00 or later |

||

PostgreSQL 9.6 |

12.00 or later |

||

PostgreSQL 10 |

12.00 or later |

||

PostgreSQL 11 |

12.10 or later |

||

PostgreSQL 12 |

12.22 or later |

||

PostgreSQL 13 |

12.30 or later |

||

PowerGres on Windows V9.1 |

12.00 or later |

||

PowerGres on Windows V9.4 |

12.00 or later |

||

PowerGres on Windows V9.6 |

12.00 or later |

||

PowerGres on Windows V11 |

12.10 or later |

||

SQL Server monitor |

SQL Server 2014 |

12.00 or later |

|

SQL Server 2016 |

12.00 or later |

||

SQL Server 2017 |

12.00 or later |

||

SQL Server 2019 |

12.20 or later |

||

Tuxedo monitor |

Tuxedo 12c Release 2 (12.1.3) |

12.00 or later |

|

WebLogic monitor |

WebLogic Server 11g R1 |

12.00 or later |

|

WebLogic Server 11g R2 |

12.00 or later |

||

WebLogic Server 12c R2 (12.2.1) |

12.00 or later |

||

WebLogic Server 14c (14.1.1) |

12.20 or later |

||

WebSphere monitor |

WebSphere Application Server 8.5 |

12.00 or later |

|

WebSphere Application Server 8.5.5 |

12.00 or later |

||

WebSphere Application Server 9.0 |

12.00 or later |

||

WebOTX monitor |

WebOTX Application Server V9.1 |

12.00 or later |

|

WebOTX Application Server V9.2 |

12.00 or later |

||

WebOTX Application Server V9.3 |

12.00 or later |

||

WebOTX Application Server V9.4 |

12.00 or later |

||

WebOTX Application Server V9.5 |

12.00 or later |

||

WebOTX Application Server V10.1 |

12.00 or later |

||

WebOTX Application Server V10.3 |

12.30 or later |

||

JVM monitor |

WebLogic Server 11g R1 |

12.00 or later |

|

WebLogic Server 11g R2 |

12.00 or later |

||

WebLogic Server 12c R2 (12.2.1) |

12.00 or later |

||

WebLogic Server 14c (14.1.1) |

12.20 or later |

||

WebOTX Application Server V9.1 |

12.00 or later |

||

WebOTX Application Server V9.2 |

12.00 or later |

||

WebOTX Application Server V9.3 |

12.00 or later |

||

WebOTX Application Server V9.4 |

12.00 or later |

||

WebOTX Application Server V9.5 |

12.00 or later |

||

WebOTX Application Server V10.1 |

12.00 or later |

||

WebOTX Application Server V10.3 |

12.30 or later |

||

WebOTX Enterprise Service Bus V8.4 |

12.00 or later |

||

WebOTX Enterprise Service Bus V8.5 |

12.00 or later |

||

WebOTX Enterprise Service Bus V10.3 |

12.30 or later |

||

Apache Tomcat 8.0 |

12.00 or later |

||

Apache Tomcat 8.5 |

12.00 or later |

||

Apache Tomcat 9.0 |

12.00 or later |

||

WebSAM SVF for PDF 9.1 |

12.00 or later |

||

WebSAM SVF for PDF 9.2 |

12.00 or later |

||

WebSAM Report Director Enterprise 9.1 |

12.00 or later |

||

WebSAM Report Director Enterprise 9.2 |

12.00 or later |

||

WebSAM Universal Connect/X 9.1 |

12.00 or later |

||

WebSAM Universal Connect/X 9.2 |

12.00 or later |

||

System monitor |

N/A |

12.00 or later |

|

Process resource monitor |

N/A |

12.10 or later |

Note

Above monitor resources are executed as 64-bit application in x86_64 environment. So that, the target applications must be 64-bit binaries.

4.2.4. Operation environment of VM resources¶

The following table shows the version information of the virtual machines on which the operation of the virtual machine resources has been verified.

Virtual Machine |

Version |

Remark |

|---|---|---|

Hyper-V |

Windows Server 2012 Hyper-V |

|

Windows Server 2012 R2 Hyper-V |

Note

VM resources do not work on Windows Server 2016.

4.2.5. Operation environment for SNMP linkage functions¶

EXPRESSCLUSTER with SNMP Service of Windows is validated on following OS.

x86_64 version

OS |

EXPRESSCLUSTER version |

Remarks |

|---|---|---|

Windows Server 2012 |

12.00 or later |

|

Windows Server 2012 R2 |

12.00 or later |

|

Windows Server 2016 |

12.00 or later |

|

Windows Server, version 1709 |

12.00 or later |

4.2.6. Operation environment for JVM monitor¶

The use of the JVM monitor requires a Java runtime environment.

The use of the JVM monitor load balancer linkage function (when using BIG-IP Local Traffic Manager) requires a Microsoft .NET Framework runtime environment.

Microsoft .NET Framework 3.5 Service Pack 1

Installation procedure

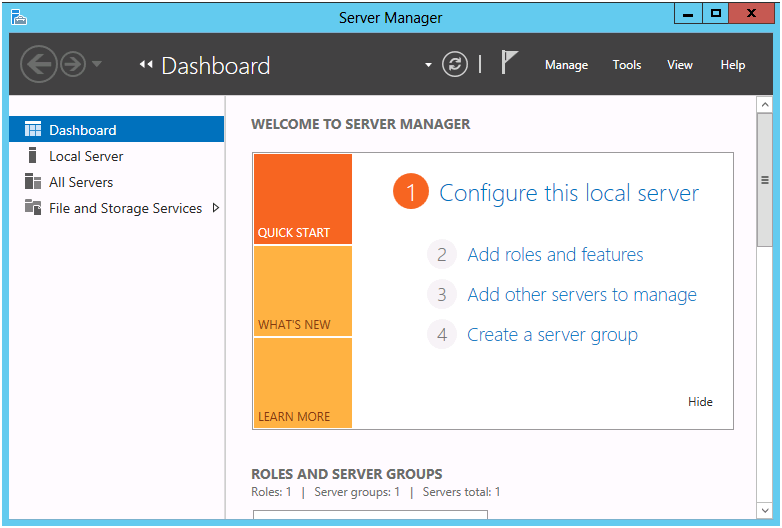

Fig. 4.1 Server Manager¶

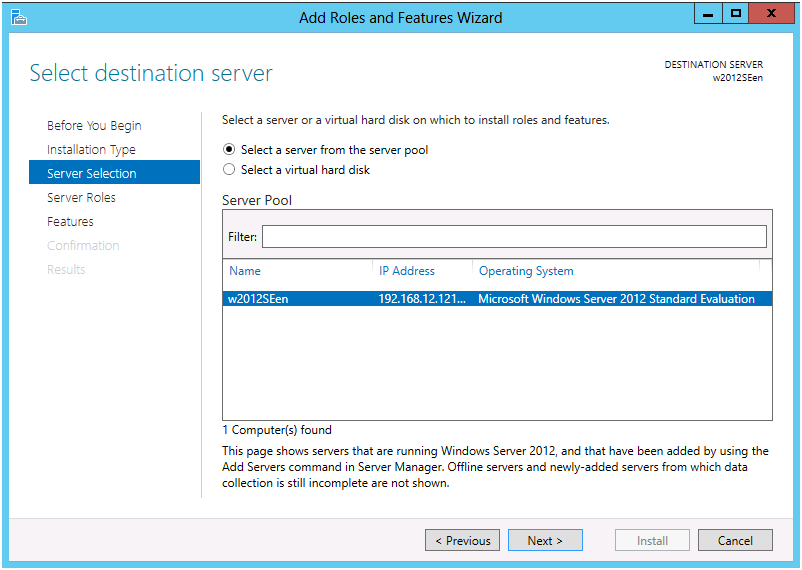

Fig. 4.2 Select Server¶

Click Next in the Server Roles window.

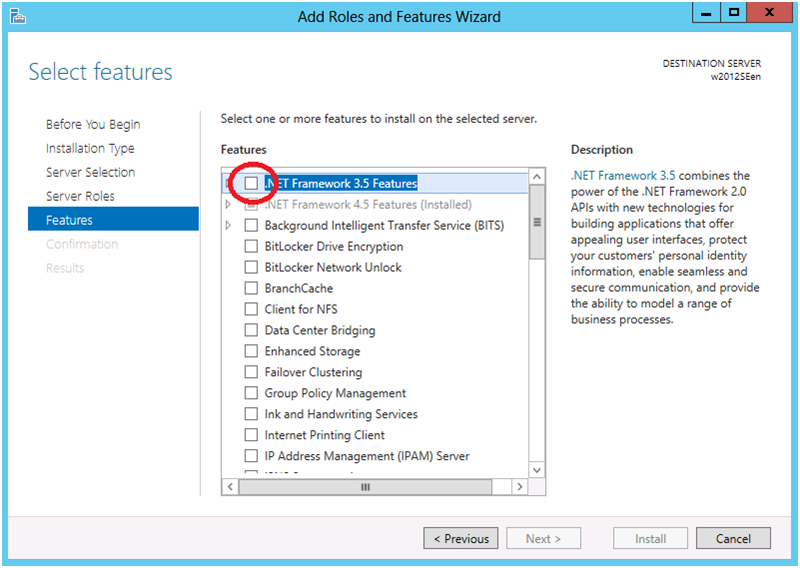

In the Features window, select .Net Framework 3.5 Features and click Next.

Fig. 4.3 Select Features¶

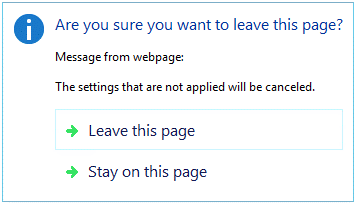

If the server is connected to the Internet, click Install in the Confirm installation selections window to install .Net Framework 3.5.

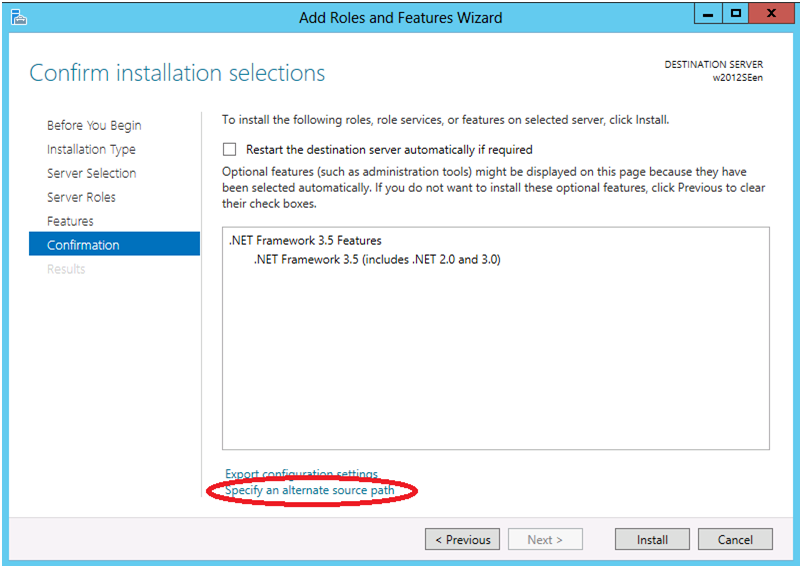

If the server is not connectable to the Internet, select Specify an alternative source path in the Confirm installation selections window.

Fig. 4.4 Confirm Installation Options¶

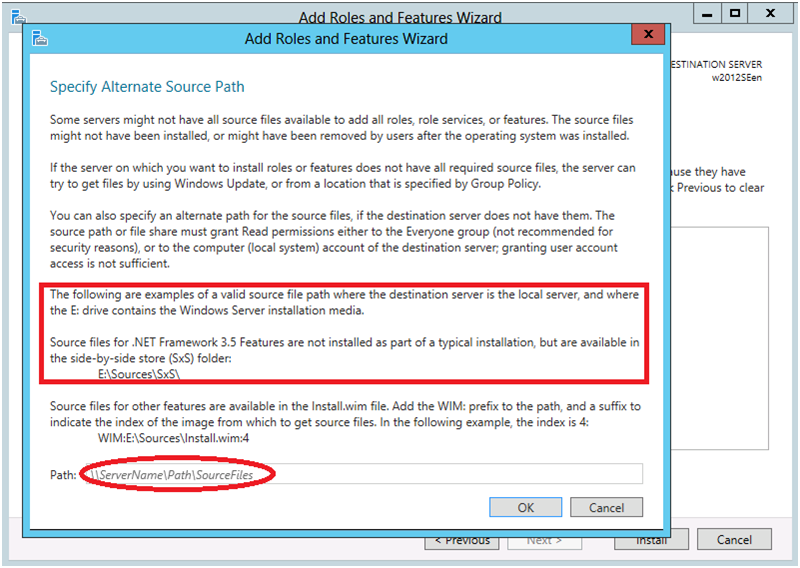

Specify the path to the OS installation medium in the Path field while referring to the explanation displayed in the window, and then click OK. After this, click Install to install .Net Framework 3.5.

Fig. 4.5 Specify Alternative Source Path¶

The tables below list the load balancers that were verified for the linkage with the JVM monitor.

x86_64 version

Load balancer |

EXPRESSCLUSTER version |

Remarks |

|---|---|---|

Express5800/LB400h or later |

12.00 or later |

|

InterSec/LB400i or later |

12.00 or later |

|

BIG-IP v11 |

12.00 or later |

|

CoyotePoint Equalizer |

12.00 or later |

4.2.7. Operation environment for system monitor or process resource monitor or function of collecting system resource information¶

Note

On the OS of Windows Server 2012 or later, NET Framework 4.5 version or later is pre-installed (The version of the pre-installed one varies depending on the OS).

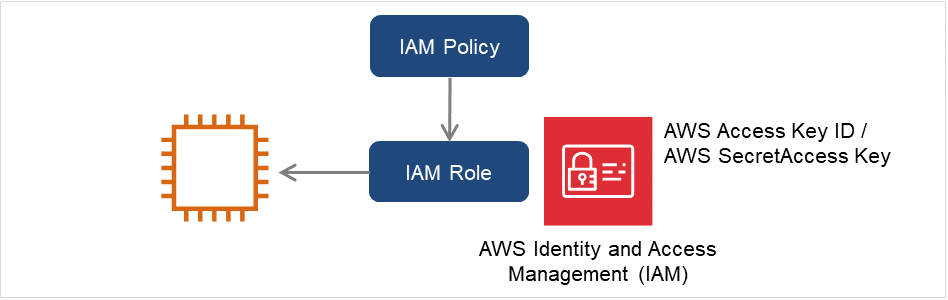

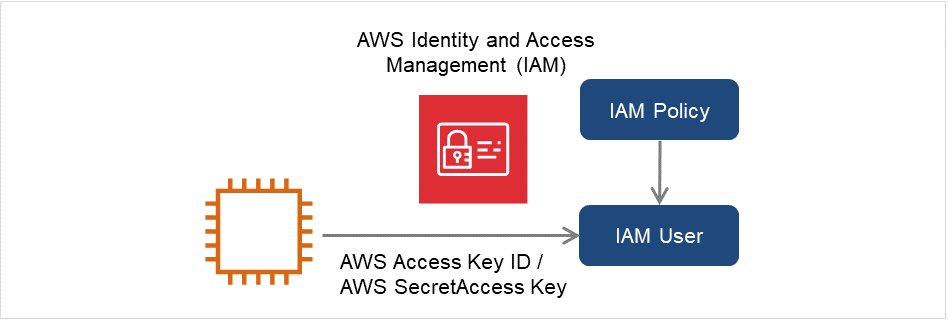

4.2.8. Operation environment for AWS Elastic IP resource, AWS virtual IP resource, AWS Elastic IP monitor resource, AWS Virtual IP monitor resource and AWS AZ monitor resource¶

The use of the AWS elastic ip resource, AWS virtual ip resource, AWS elastic IP monitor resource, AWS virtual IP monitor resource and AWS AZ monitor resource requires the following software.

Software |

Version |

Remarks |

|---|---|---|

AWS CLI |

1.6.0 or later |

|

Python |

2.7.5 or later

3.6.7 or later

3.8.2 or later

|

Python accompanying the AWS CLI is not allowed. |

The following are the version information for the OSs on AWS on which the operation of the AWS elastic ip resource, AWS virtual ip resource, AWS elastic IP monitor resource, AWS virtual IP monitor resource and AWS AZ monitor resource has been verified.

x86_64

Distribution |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|

Windows Server 2012 |

12.00 or later |

|

Windows Server 2012 R2 |

12.00 or later |

|

Windows Server 2016 |

12.00 or later |

|

Windows Server 2019 |

12.10 or later |

4.2.9. Operation environment for AWS DNS resource and AWS DNS monitor resource¶

The use of the AWS DNS resource and AWS DNS monitor resource requires the following software.

Software |

Version |

Remarks |

|---|---|---|

AWS CLI |

1.11.0 or later |

|

Python |

2.7.5 or later

3.6.7 or later

3.8.2 or later

|

Python accompanying the AWS CLI is not allowed. |

The following are the version information for the OSs on AWS on which the operation of the AWS DNS resource and AWS DNS monitor resource has been verified.

x86_64

Distribution |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|

Windows Server 2012 |

12.00 or later |

|

Windows Server 2012 R2 |

12.00 or later |

|

Windows Server 2016 |

12.00 or later |

|

Windows Server 2019 |

12.10 or later |

4.2.10. Operation environment for Azure probe port resource, Azure probe port monitor resource and Azure load balance monitor resource¶

The following are the version information for the OSs on Microsoft Azure on which the operation of the Azure probe port resource, Azure probe port monitor resource and Azure load balance monitor resource is verified.

x86_64

Distribution |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|

Windows Server 2012 |

12.00 or later |

|

Windows Server 2012 R2 |

12.00 or later |

|

Windows Server 2016 |

12.00 or later |

|

Windows Server, version 1709 |

12.00 or later |

|

Windows Server 2019 |

12.10 or later |

The following are the Microsoft Azure deployment models with which the operation of the Azure probe port resource, Azure probe port monitor resource, and Azure load balance monitor resource has been verified.

For the method to configure a load balancer, refer to "EXPRESSCLUSTER X HA Cluster Configuration Guide for Microsoft Azure (Windows)".

x86_64

Deployment model |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|

Resource Manager |

12.00 or later |

Load balancer is required |

4.2.11. Operation environment for Azure DNS resource and Azure DNS monitor resource¶

The use of the Azure DNS resource and Azure DNS monitor resource requires the following software.

Software |

Version |

Remarks |

|---|---|---|

Azure CLI |

2.0 or later |

The following are the version information for the OSs on Microsoft Azure on which the operation of the Azure DNS resource and Azure DNS monitor resource has been verified.

x86_64

Distribution |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|

Windows Server 2012 |

12.00 or later |

|

Windows Server 2012 R2 |

12.00 or later |

|

Windows Server 2016 |

12.00 or later |

|

Windows Server, version 1709 |

12.00 or later |

|

Windows Server 2019 |

12.10 or later |

x86_64

Deployment model |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|

Resource Manager |

12.00 or later |

Azure DNS is required. |

4.2.12. Operation environments for Google Cloud virtual IP resource, Google Cloud virtual IP monitor resource, and Google Cloud load balance monitor resource¶

The following lists the versions of the OSs on Google Cloud Platform on which the operation of the Google Cloud virtual IP resource, the Google Cloud virtual IP monitor resource, and the Google Cloud load balance monitor resource was verified.

Distribution |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|

Windows Server 2016 |

12.20 or later |

|

Windows Server 2019 |

12.20 or later |

4.2.13. Operation environments for Google Cloud DNS resource, Google Cloud DNS monitor resource¶

The use of the Google Cloud DNS resource, Azure Google Cloud monitor resource requires the following software.

Software |

Version |

Remarks |

|---|---|---|

Google Cloud SDK |

295.0.0~ |

The following are the version information for the OSs on Google Cloud Platform on which the operation of the Google Cloud DNS resource, Google Cloud DNS monitor resource is verified.

Distribution |

EXPRESSCLUSTER

version

|

Remarks |

|---|---|---|

Windows Server 2019 |

12.30 or later |

4.2.14. Operation environments for Oracle Cloud virtual IP resource, Oracle Cloud virtual IP monitor resource, and Oracle Cloud load balance monitor resource¶

The following lists the versions of the OSs on Oracle Cloud Infrastructure on which the operation of the Oracle Cloud virtual IP resource, the Oracle Cloud virtual IP monitor resource, and the Oracle Cloud load balance monitor resource was verified.

Distribution |

EXPRESSCLUSTER Version |

Remarks |

|---|---|---|

Windows Server 2012 R2 |

12.20 or later |

|

Windows Server 2016 |

12.20 or later |

4.3. System requirements for the Cluster WebUI¶

4.3.1. Supported operating systems and browsers¶

Browser |

Language |

|---|---|

Internet Explorer 11 |

English/Japanese/Chinese |

Internet Explorer 10 |

English/Japanese/Chinese |

Firefox |

English/Japanese/Chinese |

Google Chrome |

English/Japanese/Chinese |

Note

When using an IP address to connect to Cluster WebUI, the IP address must be registered to Site of Local Intranet in advance.

Note

When accessing Cluster WebUI with Internet Explorer 11, the Internet Explorer may stop with an error. In order to avoid it, please upgrade the Internet Explorer into KB4052978 or later. Additionally, in order to apply KB4052978 or later to Windows 8.1/Windows Server 2012R2, apply KB2919355 in advance. For details, see the information released by Microsoft.

Note

No mobile devices, such as tablets and smartphones, are supported.

4.3.2. Required memory size and disk size¶

Required memory size: 500MB or more

Required disk size: 200MB or more

5. Latest version information¶

This chapter provides the latest information on EXPRESSCLUSTER. The latest information on the upgraded and improved functions is described in details.

This chapter covers:

5.1. Correspondence list of EXPRESSCLUSTER and a manual¶

Description in this manual assumes the following version of EXPRESSCLUSTER. Make sure to note and check how EXPRESSCLUSTER versions and the editions of the manuals are corresponding.

EXPRESSCLUSTER Internal Version |

Manual |

Edition |

Remarks |

|---|---|---|---|

12.34 |

Getting Started Guide |

6th Edition |

|

Installation and Configuration Guide |

2nd Edition |

||

Reference Guide |

5th Edition |

||

Maintenance Guide |

1st Edition |

||

Hardware Feature Guide |

1st Edition |

||

Legacy Feature Guide |

3rd Edition |

5.2. New features and improvements¶

The following features and improvements have been released.

No.

|

Internal

Version

|

Contents

|

|---|---|---|

1 |

12.00 |

Management GUI has been upgraded to Cluster WebUI. |

2 |

12.00 |

HTTPS is supported for Cluster WebUI and WebManager. |

3 |

12.00 |

The fixed term license is released. |

4 |

12.00 |

The maximum number of mirror disk and/or hybrid disk resources has been expanded. |

5 |

12.00 |

Windows Server, version 1709 is supported. |

6 |

12.00 |

SQL Server monitor resource supports SQL Server 2017. |

7 |

12.00 |

Oracle monitor resource supports Oracle Database 12c R2. |

8 |

12.00 |

PostgreSQL monitor resource supports PowerGres on Windows 9.6. |

9 |

12.00 |

WebOTX monitor resource supports WebOTX V10.1. |

10 |

12.00 |

JVM monitor resource supports Apache Tomcat 9.0. |

11 |

12.00 |

JVM monitor resource supports WebOTX V10.1. |

12 |

12.00 |

The following monitor targets have been added to JVM monitor resource.

|

13 |

12.00 |

AWS DNS resource and AWS DNS monitor resource have been added. |

14 |

12.00 |

Azure DNS resource and Azure DNS monitor resource have been added. |

15 |

12.00 |

The clpstdncnf command to edit cluster termination behavior when OS shutdown initiated by other than cluster has been added. |

16 |

12.00 |

Monitoring behavior to detect error or timeout has been improved. |

17 |

12.00 |

The function to execute a script before or after group resource activation or deactivation has been added. |

18 |

12.00 |

The function to disable emergency shutdown for servers included in the same server group has been added. |

19 |

12.00 |

The function to create a rule for exclusive attribute groups has been added. |

20 |

12.00 |

Failover count up method is improved to select per server or per cluster. |

21 |

12.00 |

Internal communication has been improved to save TCP port usage. |

22 |

12.00 |

The list of files for log collection has been revised. |

23 |

12.00 |

Difference Bitmap Size to save differential data for mirror disk and hybrid disk resource is tunable. |

24 |

12.00 |

History Recording Area Size in Asynchronous Mode for mirror disk and hybrid disk resource is tunable. |

25 |

12.01 |

When HTTPS is unavailable in WebManager due to inadequate settings, a message is sent to event and alert logs. |

26 |

12.10 |

Windows Server, version 1803 is supported. |

27 |

12.10 |

Windows Server, version 1809 is supported. |

28 |

12.10 |

Windows Server 2019 is supported. |

29 |

12.10 |

Oracle monitor resource supports Oracle Database 18c. |

30 |

12.10 |

Oracle monitor resource supports Oracle Database 19c. |

31 |

12.10 |

PostgreSQL monitor resource supports PostgreSQL 11. |

32 |

12.10 |

PostgreSQL monitor resource supports PowerGres V11. |

33 |

12.10 |

Python 3 is supported by the following resources/monitor resources:

|

34 |

12.10 |

MSI installers and the pip-installed AWS CLI (aws.cmd) are supported by the following resources/monitor resources:

|

35 |

12.10 |

The Connector for SAP for SAP NetWeaver supports the following SAP NetWeaver:

|

36 |

12.10 |

The Connector for SAP/the bundled scripts for SAP NetWeaver supports the following:

|

37 |

12.10 |

Cluster WebUI supports cluster construction and reconfiguration. |

38 |

12.10 |

The DB rest point command for PostgreSQL has been added. |

39 |

12.10 |

The DB rest point command for DB2 has been added. |

40 |

12.10 |

The Witness heartbeat resource has been added. |

41 |

12.10 |

The HTTP network partition resolution resource has been added. |

42 |

12.10 |

The number of settings has been increased that can apply a changed cluster configuration without the suspension of business. |

43 |

12.10 |

A function has been added to check for duplicate floating IP addresses when a failover group is started up. |

44 |

12.10 |

A function has been added to delay automatic failover by a specified time with a heartbeat timeout detected between server groups in the remote cluster configuration. |

45 |

12.10 |

The number of environment variables has been increased that can be used with the start or stop scripts of the Script resources. |

46 |

12.10 |

A function has been added to judge the results of executing the script for the forced stop and to suppress failover. |

47 |

12.10 |

A function has been added to set a path to perl.exe to be used for the virtual machine management tool (vCLI 6.5) in the forced stop function. |