1. Preface¶

1.1. Who Should Use This Guide¶

The EXPRESSCLUSTER X Maintenance Guide describes maintenance-related information, intended for administrators. See this guide for information required for operating the cluster.

1.2. How This Guide is Organized¶

2. The system maintenance information: Provides maintenance information for EXPRESSCLUSTER.

1.3. EXPRESSCLUSTER X Documentation Set¶

The EXPRESSCLUSTER X manuals consist of the following four guides. The title and purpose of each guide is described below:

This guide is intended for all users. The guide covers topics such as product overview, system requirements, and known problems.

Installation and Configuration Guide

This guide is intended for system engineers and administrators who want to build, operate, and maintain a cluster system. Instructions for designing, installing, and configuring a cluster system with EXPRESSCLUSTER are covered in this guide.

This guide is intended for system administrators. The guide covers topics such as how to operate EXPRESSCLUSTER, function of each module and troubleshooting. The guide is supplement to the "Installation and Configuration Guide".

Maintenance Guide

This guide is intended for administrators and for system administrators who want to build, operate, and maintain EXPRESSCLUSTER-based cluster systems. The guide describes maintenance-related topics for EXPRESSCLUSTER.

1.4. Conventions¶

In this guide, Note, Important, See also are used as follows:

Note

Used when the information given is important, but not related to the data loss and damage to the system and machine.

Important

Used when the information given is necessary to avoid the data loss and damage to the system and machine.

See also

Used to describe the location of the information given at the reference destination.

The following conventions are used in this guide.

Convention |

Usage |

Example |

|---|---|---|

Bold |

Indicates graphical objects, such as fields, list boxes, menu selections, buttons, labels, icons, etc. |

In User Name, type your name.

On the File menu, click Open Database.

|

Angled bracket within the command line |

Indicates that the value specified inside of the angled bracket can be omitted. |

|

Monospace |

Indicates path names, commands, system output (message, prompt, etc), directory, file names, functions and parameters. |

|

bold |

Indicates the value that a user actually enters from a command line. |

Enter the following:

clpcl -s -a

|

italic |

Indicates that users should replace italicized part with values that they are actually working with. |

|

In the figures of this guide, this icon represents EXPRESSCLUSTER.

In the figures of this guide, this icon represents EXPRESSCLUSTER.

1.5. Contacting NEC¶

For the latest product information, visit our website below:

2. The system maintenance information¶

This chapter provides information you need for maintenance of your EXPRESSCLUSTER system. Resources to be managed are described in detail.

This chapter covers:

2.4. System resource statistics information collection function

2.5. Process resource statistics information collection function

2.7. Function for outputting the operation log of Cluster WebUI

2.8. Function for outputting an API service operation log file

2.11. Limit on the band for mirror disk connect communication

2.11.2. Procedure for suspending or releasing the limit on the band for mirror disk connect communication

2.13. Configuring the settings to temporarily prevent execution of failover

2.26. How to restore the mirror/hybrid disk from the disk image

2.34. Replacing the disk array controller (DAC)/updating the firmware

2.36. Updating data encryption key file of mirror/hybrid disk resources

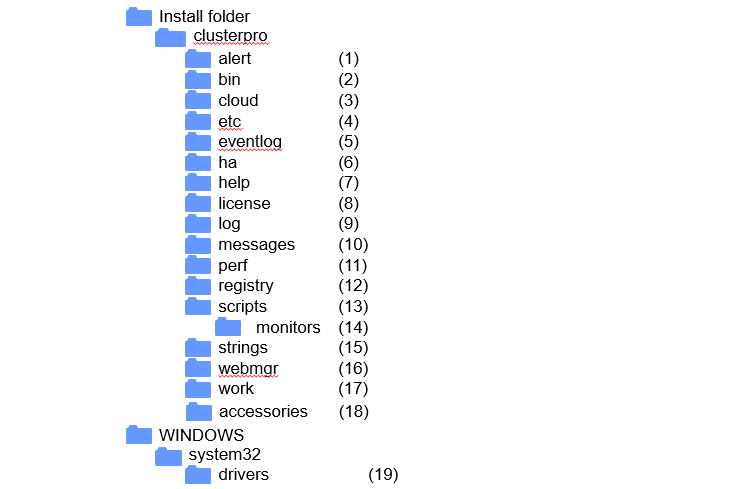

2.1. Directory structure of EXPRESSCLUSTER¶

Note

You will find executable files and script files that are not described in "EXPRESSCLUSTER command reference" in the "Reference Guide" under the installation directory. Run these files only with EXPRESSCLUSTER. Any failures or troubles caused by executing them by using applications other than EXPRESSCLUSTER are not supported.

EXPRESSCLUSTER directories are structured as described below:

Fig. 2.1 Directory structure¶

- Directory for alert synchronizationThis directory stores EXPRESSCLUSTER Alert Synchronization's modules and management files.

- Directory for cluster modulesThis directory stores the EXPRESSCLUSTER Server's executable files and libraries.

- Directory for cloud environmentThis directory stores script files for cloud environment.

- Directory for cluster configuration dataThis directory stores the cluster configuration files and policy file of each module.

- Directory for event logsThis directory stores libraries that are related to the EXPRESSCLUSTER event logs.

- Directory for HA productsThe directory stores binary files and setting files of Java Resource Agent and System Resource Agent.

- Directory related to HelpNot used now.

- Directory for licensesThis directory stores licenses for licensed products.

- Directory for module logsThis directory stores logs produced by each module.

- Directory for report messages (alert, event log)This directory stores alert and event log messages reported by each module.

- Directory for performance logThis directory stores performance log of mirror or hybrid disk resources and system resource of OS.

- Directory for the registryNot used now.

- Directory for script resource scripts of group resourcesThis directory stores script resource scripts of group resources.

- Directory for the recovery script executedThis directory stores the script executed when an error is detected in the group resource or monitor resource.

- Directory for the string tableThis directory stores string tables used in EXPRESSCLUSTER.

- Directory for the WebManager server and Cluster WebUI.This directory stores the WebManager server modules and management files.

- Directory for module tasksThis is a work directory for modules.

- Directory for other ancillary modulesThis directory stores libraries necessary for integration with other products.In normal cases, please do not use this directory.

- Directory for cluster driversThis directory stores drivers for kernel mode LAN heartbeat and disk filter.

2.2. How to delete EXPRESSCLUSTER logs or alerts¶

To delete EXPRESSCLUSTER logs or alerts, perform the following procedure.

Disable all cluster services on all servers in a cluster.

clpsvcctrl.bat --disable -a

Shut down the cluster with the Cluster WebUI or clpstdn command, and then reboot the cluster.

To delete logs, delete the files in the following folder. Perform this operation on the server for which you want to delete the logs.

<EXPRESSCLUSTER installation path>\log

To delete alerts, delete the files in the following folder. Perform this operation on the server for which you want to delete the alerts.

<EXPRESSCLUSTER installation path>\log

Enable all cluster services on all servers in a cluster.

clpsvcctrl.bat --enable -a

Restart all the servers in the cluster.

2.3. Mirror statistics information collection function¶

2.3.1. What is the mirror statistics information collection function?¶

The mirror statistics information collection function collects statistics information related to the mirror function that is obtained from each mirror source in mirror disk and hybrid disk configurations.

Using the Windows OS functions (performance monitor and typeperf command), the mirror statistics information collection function can collect mirror statistics information for EXPRESSCLUSTER X and display the collected information in real time. Moreover, it can continuously output mirror statistics information to a statistic log file from the instant that the mirror is constructed.

As shown below, the collected mirror statistics information can be used during mirror construction and mirror operation.

During mirror construction |

To tune the mirror setting items in the current environment, you can adjust the optimum setting by checking how each setting item influences the current environment. |

|---|---|

During mirror operation |

You can monitor the situation to determine whether a problem is likely to occur.

Moreover, analysis performance improves because mirror statistics information can be collected before and after failure occurrence.

|

2.3.2. Linkage between the mirror statistics information collection function and OS standard functions¶

Using the OS standard functions

Using the performance monitor and typeperf command, mirror statistics information can be collected and that information displayed in real time. Any counter can be selected from the subsequent "Counter names" list to continuously display and collect information over a fixed period of time. This allows you to visually check whether the mirror-related setting values are suitable for the constructed environment or whether an error has occurred during the collection of the statistics information.

For the procedure for using the performance monitor and typeperf command, see the subsequent items "Displaying mirror statistics information with the performance monitor," "Collecting mirror statistics information from the performance monitor", and "Collecting mirror statistics information from the typeperf command."

Specifying an object name

The object name used with the mirror statistics information collection function is "Cluster Disk Resource Performance" Specifying the "Cluster Disk Resource Performance" object enables the collection of mirror statistics information.

Specifying a counter name

The counter names used by the mirror statistics information collection function are listed below.

Counter name

Meaning

Unit

Description

% Compress Ratio

Compression ratio

%

Compression ratio of the mirror data to be sent to a remote server. The ratio of the compressed data size relative to the original data is used. Therefore, if 100 MB of data is compressed to 80 MB, the compression ratio is 80%.

Async Application Queue BytesAsync Application Queue Bytes, MaxApplication queue size (instantaneous value/maximum value)

Byte

Amount of data which is retained in the user space memory and which has yet to be sent during asynchronous mirror communication. The value that appears when the latest data is collected is an instantaneous value while the value that appears when the amount of data to be retained is the greatest is the maximum value.

Async Kernel Queue BytesAsync Kernel QueueBytes, MaxKernel queue size (instantaneous value/maximum value)

Byte

Amount of data which is retained in the kernel space memory and which has yet to be sent during asynchronous mirror communication. The value that appears when the latest data is collected is an instantaneous value while the value that appears when the amount of data to be retained is the greatest is the maximum value.

Async Mirror Queue Transfer TimeAsync Mirror Queue Transfer Time, MaxTime for transfer from the kernel queue to the application queue (average value/maximum value)

msec

Average value/maximum value of the time needed to transfer data from the kernel space memory to the user space memory during asynchronous mirror communication

Async Mirror Send Wait History Files Total BytesAsync Mirror Send Wait History Files Total Bytes, MaxHistory file usage (instantaneous value/maximum value)

Byte

Total size of the data files accumulated in the history file storage folder and which have yet to be sent during asynchronous mirror communication. The value that appears when the latest data is collected is an instantaneous value while the value that appears when the amount of accumulated data is the greatest is the maximum value.

Async Mirror Send Wait Total BytesAsync Mirror Send Wait Total Bytes, MaxAmount of data yet to be sent (instantaneous value/maximum value)

Byte

Total amount of mirror data which is to be sent to a remote server and which has yet to be sent during asynchronous mirror communication. The value that appears when the latest data is collected is an instantaneous value while the value that appears when the amount of data that has yet be sent is the greatest is the maximum value.

Mirror Bytes SentMirror Bytes Sent/secMirror transmission amount (total value/average value)

Byte(Byte/sec)Number of bytes of mirror data sent to a remote server. The total number of bytes that appears until the latest data is collected is the total value while the number of bytes to be sent per second is the average value.

Request Queue BytesRequest Queue Bytes, MaxRequest queue size (instantaneous value/maximum value)

Byte

Amount of queue used when an IO request is received during mirror communication. The value that appears when the latest data is collected is an instantaneous value while that the value that appears when the queue size is the greatest is the maximum value.

Transfer Time, AvgTransfer Time, MaxMirror communication time (average value/maximum value)

msec/time

Communication time per mirror communication used during mirror data transmission. The communication time averaged by the number of times of mirror communication used until the latest data is collected is the average value while the communication time per mirror communication which was the greatest is the maximum value.

- Specifying the instance nameThe instance name to be used by the mirror statistics information collection function is "MD,HD ResourceX." X indicates a mirror disk number/hybrid disk number from 1 to 22.For example, if the mirror disk number of mirror disk resource "MD" is set to "2", the mirror statistics information relating to resource "MD" can be collected by specifying instance "MD,HD Resource2."Moreover, if two or more resources are set, specifying instance "_Total" can collect information totalized by mirror statistics information relating to all resources that have been set.

Note

Specify the instance name corresponding to the mirror disk number/hybrid disk number for which a resource is set. An instance for which no resource is set can be specified; however, mirror statistics information cannot be displayed/collected.

Using mirror statistics information

Mirror statistics information that has actually been collected can be used to adjust the mirror-related setting values. If, for example, the communication speed and communication load can be confirmed from the collected mirror statistics information, it may be possible to improve the communication speed by turning the mirror-related setting values.

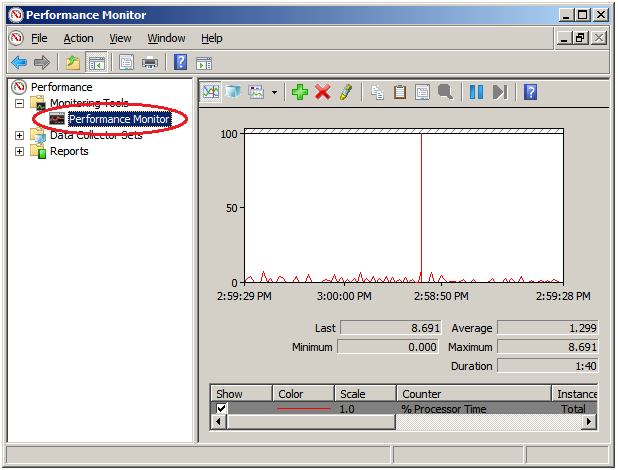

Displaying mirror statistics information with the performance monitor

Procedure for displaying the mirror statistics information to be collected in real time

From the Start menu, start Administrative Tools - Performance Monitor.

Select the performance monitor.

Click the + button or right-click to execute Add Counters from the menu.

Save the counter setting added with File - Save as.

Starting from the saved setting, you can repeatedly use the same counter setting.

The procedure is detailed below.Here, "Mirror Bytes Sent," or one item of mirror statistics information, is collected as an example. The target instance is assumed to be "MD/HD Resource1."From the Start menu, start Administrative Tools - Performance.

- From the left-hand menu tree in the window, select Performance Monitor.

The performance monitor window appears on the right-hand side of the window.

The performance monitor window appears on the right-hand side of the window. - Click the + button or right-click to execute Add Counters from the menu.

If the operation conditions are satisfied, the additional counter/instance is displayed.Select Cluster Disk Resource Performance, select counter Mirror Bytes Sent and instance MD,HD Resource1 and then click Add.

If the operation conditions are satisfied, the additional counter/instance is displayed.Select Cluster Disk Resource Performance, select counter Mirror Bytes Sent and instance MD,HD Resource1 and then click Add.Note

If Cluster Disk Resource Performance is not displayed, the linkage function is disabled. In this case, execute the following command at the command prompt to enable the linkage function, and then retry the procedure from step 1.

>lodctr.exe <EXPRESSCLUSTER installation path>\perf\clpdiskperf.ini

Save the counter setting added with File - Save as.

Starting from the saved setting, you can repeatedly use the same counter setting.

Collecting mirror statistics information from the performance monitor

The following explains the procedure for collecting the log file of mirror statistics information from the performance monitor.

Procedure for collecting the log file

From the Start menu, start Administrative Tools - Performance Monitor.

Create a new data collector set with Data Collector Sets - User Defined.

From Create Data Log, select Performance Counter and then click Add.

Select Cluster Disk Resource Performance and then add the counter and instance to be collected.

Start log collection.

The procedure is detailed below.Here, "Mirror Bytes Sent," or one item of mirror statistics information, is collected as an example. The target instance is assumed to be "MD/HD Resource1."From the Start menu, start Administrative Tools - Performance Monitor.

From Data Collector Sets - User Defined, select Operation - New, or from New of the right-click option, specify Data Collector Set.

Enter any name as the data collector set name.

As the data collector set creation method, select Create manually (Details) (C).

From Create Data Log, select Performance Counter and then click Add.

- Add a counter. Here, after selecting Mirror Bytes Sent from Cluster Disk Resource Performance, select MD,HD Resource1 from Instances of Selected object, and then click Add.MD,HD Resource1 of Mirror Bytes Sent is added to Added Counter.After adding all the counters to be collected, click OK and then select Finish.

Note

If Cluster Disk Resource Performance is not displayed, the linkage function is disabled. In this case, execute the following command at the command prompt to enable the linkage function, and then retry the procedure from step 1.

>lodctr.exe <EXPRESSCLUSTER installation path>\perf\clpdiskperf.ini

Start log collection. Execute Start from the menu with Data Collector Sets - User Defined - (Data Collector Set Name).

Collecting mirror statistics information from the typeperf command

The following explains the procedure for collecting the mirror statistics information from the typeperf command.

From the Start menu, start Programs - Accessories - Command Prompt.

Execute typeperf.exe.

The following explains the use example in detail.

[Use example 1] Collecting the mirror communication time (specifying all instances EXPRESSCLUSTER Resource)

Case in which MD resources: md01 to md04 and HD resources: hd05 to hd08 are already registeredHowever, each resource is set as follows:The md01 mirror disk number is 1. The md02 mirror disk number is 2. : The hd07 hybrid disk number is 7. The hd08 hybrid disk number is 8.

In line 1 below, the typeperf command is executed to collect the mirror communication time.Lines 2 through 11 show the column headers of the results.Here, the column headers are separated into each of the lines for readability, which are actually printed in one line.From line 12, the actually collected statistics data is displayed.Columns separated by "," indicate the following values, starting from left to right:"Sampling time", each communication time of "md01", "md02", "md03", "md04", "hd05", "hd06", "hd07", and "hd08"

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

C:\>typeperf "\Cluster Disk Resource Performance(*)\Transfer Time, Avg" "(PDH-CSV 4.0)","\\v-ra1w2012\\Cluster Disk Resource Performance(*)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(MD/HD Resource1)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(MD/HD Resource2)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(MD/HD Resource3)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(MD/HD Resource4)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(MD/HD Resource5)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(MD/HD Resource6)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(MD/HD Resource7)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(MD/HD Resource8)\Transfer Time, Avg", "\\v-ra1w2012\Cluster Disk Resource Performance(_Total)\Transfer Time, Avg" "03/03/2010 15:21:24.546","0.24245658","0.3588965","0.488589","0.24245658","0.3588965","0.488577","0.3588965","0.488589" "03/03/2010 15:21:24.546","0.21236597","0.6465466","0.488589","0.24245658","0.3588965","0.488589","0.2588965","0.288589" "03/03/2010 15:21:24.546","0.24465858","0.7797976","0.488589","0.13123213","0.4654699","0.488544","0.6588965","0.288589" "03/03/2010 15:21:24.546","0.85466658","0.5555565","0.488589","0.24245658","0.3588965","0.485689","0.7588965","0.388589" "03/03/2010 15:21:24.546","0.46564468","0.3123213","0.488589","0.24245658","0.4388965","0.482289","0.8888965","0.338589" "03/03/2010 15:21:24.546","0.85858998","0.3588965","0.488589","0.44245658","0.2288965","0.483289","0.3768965","0.228589" "03/03/2010 15:21:24.546","0.47987964","0.3588965","0.488589","0.64245658","0.1288965","0.488214","0.3488965","0.428589" "03/03/2010 15:21:24.546","0.88588596","0.3588965","0.488589","0.84245658","0.1588965","0.484449","0.3668965","0.422589"

[Use example 2] Collecting the amount of mirror data transmission (specifying the hd05 resource for the instance)

Case in which MD resources: md01 to md04 and HD resources: hd05 to hd08 are already registeredHowever, each resource is set as follows:The md01 mirror disk number is 1. The md02 mirror disk number is 2. : The hd07 hybrid disk number is 7. The hd08 hybrid disk number is 8.

In line 1 below, the typeperf command is executed to collect the amount of mirror data transmission.Line 2 shows the column headers of the results.From line 3, the actually collected statistics data is displayed.Columns separated by "," indicate the following values, starting from left to right:"Sampling time", the amount of data transmission of "hd05"

1 2 3 4 5 6 7 8 9 10

C:\>typeperf "\Cluster Disk Resource Performance(MD/HD Resource5)\Mirror Bytes Sent/sec" "(PDH-CSV 4.0)","\\v-ra1w2012\\Cluster Disk Resource Performance(MD/HD Resource5)\Mirror Bytes Sent/sec" "03/03/2010 15:21:24.546","52362", "03/03/2010 15:21:24.546","45564", "03/03/2010 15:21:24.546","25560", "03/03/2010 15:21:24.546","25450", "03/03/2010 15:21:24.546","22560", "03/03/2010 15:21:24.546","21597", "03/03/2010 15:21:24.546","35999", "03/03/2010 15:21:24.546","25668",

[Use example 3] Outputting the compression ratio to the log (specifying the hd01 resource for the instance)

Case in which MD resources: md01 to md04 and HD resources: hd05 to hd08 are already registeredHowever, each resource is set as follows:The md01 mirror disk number is 1. The md02 mirror disk number is 2. : The hd07 hybrid disk number is 7. The hd08 hybrid disk number is 8.

CSV is specified as the log file format andC:\PerfData\hd01.csvas the file output destination path.C:\>typeperf "\Cluster Disk Resource Performance(MD/HD Resource1)\% Compress Ratio" -f CSV -o C:\PerfData\hd01.csv

Use [Ctrl]+[C] to stop the log output after command execution.[Use example 4] Displaying the counter list (specifying no instance)

Case in which MD resources: md01 to md04 and HD resources: hd05 to hd08 are already registeredHowever, each resource is set as follows:The md01 mirror disk number is 1. The md02 mirror disk number is 2. : The hd07 hybrid disk number is 7. The hd08 hybrid disk number is 8.

In line 1 below, the typeperf command is executed to display the counter list.From line 2, the counters are displayed.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18

C:\>typeperf -q "\ Cluster Disk Resource Performance" \Cluster Disk Resource Performance(*)\% Compress Ratio \Cluster Disk Resource Performance(*)\Async Application Queue Bytes \Cluster Disk Resource Performance(*)\Async Application Queue Bytes, Max \Cluster Disk Resource Performance(*)\Async Kernel Queue Bytes \Cluster Disk Resource Performance(*)\Async Kernel Queue Bytes, Max \Cluster Disk Resource Performance(*)\Async Mirror Queue Transfer Time \Cluster Disk Resource Performance(*)\Async Mirror Queue Transfer Time, Max \Cluster Disk Resource Performance(*)\Async Mirror Send Wait History Files Total Bytes \Cluster Disk Resource Performance(*)\Async Mirror Send Wait History Files Total Bytes, Max \Cluster Disk Resource Performance(*)\Async Mirror Send Wait Total Bytes \Cluster Disk Resource Performance(*)\Async Mirror Send Wait Total Bytes, Max \Cluster Disk Resource Performance(*)\Mirror Bytes Sent \Cluster Disk Resource Performance(*)\Mirror Bytes Sent/sec \Cluster Disk Resource Performance(*)\Request Queue Bytes \Cluster Disk Resource Performance(*)\Request Queue Bytes, Max \Cluster Disk Resource Performance(*)\Transfer Time, Avg \Cluster Disk Resource Performance(*)\Transfer Time, Max

In addition, sampling interval change, command issuance to a remote server, and the like can all be specified as options.Use "Typeperf -?" to confirm the details of the options.

2.3.3. Operation of the mirror statistics information collection function¶

Mirror statistics information log output (automatic) during operation

The mirror statistics information collection function continuously collects statistics information in the environment in which the operation condition is satisfied and then outputs it to the statistic log file. Mirror statistics information collection and log output are performed automatically. Statistic log output is detailed below.

Item

Operation

Description

Output file name

nmp_<n>.curnmp_<n>.pre<x>nmp_total.curnmp_total.pre<x><n> indicates the mirror disk No. or hybrid disk No.cur is the newest, followed by pre, pre1, pre2, ..., in the newest to oldest order. The larger the number, the older.When the prescribed number of log files is exceeded, existing logs are deleted, starting with the oldest.total indicates the total data of all mirror disk resources/hybrid disk resources.Output file format

Text file

Data is output to the file in the comma-separated (CSV) text format.One-line data is output for each information collection.Output destination folder

EXPRESSCLUSTER installation folder\perf\diskData is output within the work folder immediately under the EXPRESSCLUSTER installation folder.

Resource to be output

For each resource+ totalLog is output to one file for each mirror disk resource or hybrid disk resource that was set.If no resource is set, no log file is created.If one or more log files are created, the Total log file indicating the total value of all the resources is also created.Output timing

Per minute

Information is output every minute.No log output occurs if the mirror statistics information output function is disabled.If the mirror statistics information log output operation is disabled, no log output occurs even though the mirror statistics information collection function is operating.Output file size

About 16 MB

The maximum size of one file is about 16 MB.If the upper size limit is exceeded, the log file is automatically rotated and the previous log file is saved.Even if the upper size limit is not exceeded, the log file may be rotated automatically when the output data is changed.Number of log rotations

12 generations

Up to 12 generations of log files are saved through log file rotations.If the upper rotation limit is exceeded, the oldest generation log file is automatically deleted.

2.3.4. Operation conditions of the mirror statistics information collection function¶

The mirror statistics information collection function runs when the following conditions are satisfied:

The EXPRESSCLUSTER Disk Agent service is active normally.

One or more mirror disk resources or hybrid disk resources are set.

The mirror statistic information collection function is enabled in cluster properties.

Confirm the EXPRESSCLUSTER Disk Agent service status.

From the Start menu, start Server Management - Service.Confirm that the EXPRESSCLUSTER Disk Agent service status is Start.Confirm that Startup Type is Auto.The server is required to be restarted if the service status is not Start.

Confirm the mirror setting.

Start Cluster WebUI.Confirm that the mirror disk resource or hybrid disk resource is set.

Confirm the setting of the mirror statistics information collection function.

Start Cluster WebUI.Change the mode to Config mode.Check the setting of Mirror Statistics in the Statistics tab in Cluster Properties.

For details of Cluster WebUI, see the online manual of Cluster WebUI.

2.3.5. Notes on the mirror statistics information collection function¶

To operate the mirror statistics information collection function, the free space (up to about 8.9 GB) is required on disk to record the statistic log file of the mirror statistics information.

Up to 32 processes can be started for a single server with both the performance monitor and typeperf commands combined. No mirror statistics information can be collected if more than 32 performance monitors or typeperf commands are executed for a single server.

- More than one of statistical information acquisition can't be done in 1 process.For example the computer which is a target from more than one performance monitor on the other computers, and the occasion from which more than one data collect is extracted by 1 performance monitor, etc.

- The extracted mirror statistics information is included in the logs collected by the clplogcc command or Cluster WebUI.Specify type5 to collect the log by the clplogcc command; specify Pattern 5 to collect the log by the Cluster WebUI. For details about log collection, see "Collecting logs (clplogcc command)" in "EXPRESSCLUSTER command reference" in the "Reference Guide" or the online manual.

2.4. System resource statistics information collection function¶

If the System Resource Statistics check box is checked on the Statistics tab of Cluster Properties in the Cluster WebUI config mode, or if system monitor resources or process resource monitor resources are added to the cluster, information on the system resource is collected and saved under <installation path>/perf/system according to the following file naming rules. The file format is CSV (text). In the following explanations, this file is referred to as the system resource statistics information file.

system.cur

system.pre

|

|

|---|---|

cur |

Indicates the latest information output destination. |

pre |

Indicates the previous, rotated, information output destination. |

The collected information is saved to the system resource statistics information file. The output interval (sampling interval) of statistics information is 60 seconds. If the size of current log file reached 16MB, log rotation occurs and the information is saved to a new log file (two generation log files can be used). Information saved to the system resource statistics information file can be used as a reference for analyzing the system performance. The collected statistics information contains the following items.

Statistic value name |

Unit |

Description |

|---|---|---|

CPUCount |

Quantity |

The number of CPUs |

CPUUtilization |

% |

Utilization of CPU |

MemoryTotalSize |

KByte |

Total memory size |

MemoryCurrentSize |

KByte |

Utilization of memory |

SwapTotalSize |

KByte |

Total swap size |

SwapCurrentSize |

KByte |

Utilization of swap |

ThreadCurrentSize |

Quantity |

The number of threads |

FileCurrentSize |

Quantity |

The number of opened files |

ProcessCurrentCount |

Quantity |

The number of processes |

AvgDiskReadQueueLength__Total |

Quantity |

The number of read requests queued in disk |

AvgDiskWriteQueueLength__Total |

Quantity |

The number of write requests queued in disk |

DiskReadBytesPersec__Total |

Byte |

The number of bytes transferred from disk by read operation |

DiskWriteBytesPersec__Total |

Byte |

The number of bytes transferred to disk by write operation |

PercentDiskReadTime__Total |

tick |

Busy time occurred while disk handles read requests |

PercentDiskWriteTime__Total |

tick |

Busy time occurred while disk handles write requests |

PercentIdleTime__Total |

tick |

Disk idle time |

CurrentDiskQueueLength__Total |

Quantity |

The number of requests remained in disk when performance data are collected |

The following output is an example of system resource statistics information file.

system.cur

"Date","CPUCount","CPUUtilization","MemoryTotalSize","MemoryCurrentSize","SwapTotalSize","SwapCurrentSize","ThreadCurrentSize","FileCurrentSize","ProcessCurrentCount","AvgDiskReadQueueLength__Total","AvgDiskWriteQueueLength__Total","DiskReadBytesPersec__Total","DiskWriteBytesPersec__Total","PercentDiskReadTime__Total","PercentDiskWriteTime__Total","PercentIdleTime__Total","CurrentDiskQueueLength__Total"

"2019/11/14 17:18:57.751","2","11","2096744","1241876","393216","0","1042","32672","79","623078737","241067820","95590912","5116928","623078737","241067820","305886514","0"

"2019/11/14 17:19:57.689","2","3","2096744","1234892","393216","0","926","31767","77","14688814","138463292","3898368","7112192","14688814","138463292","530778498","0"

"2019/11/14 17:20:57.782","2","2","2096744","1194400","393216","26012","890","30947","74","8535798","189735393","3802624","34398208","8535798","189735393","523400261","0"

:

2.5. Process resource statistics information collection function¶

If the System Resource Statistics check box is checked on the Statistics tab of Cluster Properties in the Cluster WebUI config mode, or if system monitor resources or process resource monitor resources are added to the cluster, information on the process resource is collected and saved under <installation path>/perf/system according to the following file naming rules. The file format is CSV (text). In the following explanations, this file is referred to as the process resource statistics information file.

process.cur

process.pre

|

|

|---|---|

cur |

Indicates the latest information output destination. |

pre |

Indicates the previous, rotated, information output destination. |

The collected information is saved to the process resource statistics information file. The output interval (sampling interval) of statistics information is 60 seconds. If the size of current log file reached 32MB, log rotation occurs and the information is saved to a new log file (two generation log files can be used). Information saved to the process resource statistics information file can be used as a reference for analyzing the process performance. The collected statistics information contains the following items.

Statistic value name |

Unit |

Description |

|---|---|---|

PID |

- |

Process ID |

CPUUtilization |

% |

CPU utilization |

MemoryPhysicalSize |

Byte |

Physical memory usage |

ThreadCurrentCount |

Quantity |

Number of running threads |

FileCurrentCount |

Quantity |

Number of opening files |

ProcessName |

- |

Process name

* Outputted not in double quotes.

|

The following output is an example of process resource statistics information file.

process.cur

"Date","PID","CPUUtilization","MemoryPhysicalSize","ThreadCurrentCount","FileCurrentCount","ProcessName"

"2022/09/26 11:39:19.099","676","0","10149888","8","641",services.exe

"2022/09/26 11:39:19.114","688","0","13660160","7","940",C:\Windows\system32\lsass.exe

"2022/09/26 11:39:19.130","808","0","3674112","2","85",C:\Windows\system32\svchost.exe -k DcomLaunch -p -s PlugPlay

:

2.6. Cluster statistics information collection function¶

In the Config mode of Cluster WebUI, with the Cluster Statistics check box (open Cluster Properties -> the Statistics tab) checked, CSV text files are created containing information on the processing results and time of, for example, reception interval for heartbeat resources, group failovers, starting group resources, and monitoring processes by monitor resources. These files are hereinafter called cluster statistics information files.

For heartbeat resources

Information is outputted to the file for each heartbeat resource type. This function is supported by kernel mode LAN heartbeat resources.

[Heartbeat resource type].cur[Heartbeat resource type].precur

Indicates the latest information output destination.

pre

Indicates the previous, rotated, information output destination.

File location

<installation path>/perf/cluster/heartbeat/

For groups

group.curgroup.precur

Indicates the latest information output destination.

pre

Indicates the previous, rotated, information output destination.

File location

<installation path>/perf/cluster/group/

For group resources

The information for each type of group resource is output to the same file.

[Group resource type].cur[Group resource type].precur

Indicates the latest information output destination.

pre

Indicates the previous, rotated, information output destination.

File location

<installation path>/perf/cluster/group/

For monitor resources

The information for each type of monitor resources is output to the same file.

[Monitor resource type].cur[Monitor resource type].precur

Indicates the latest information output destination.

pre

Indicates the previous, rotated, information output destination.

File location

<installation path>/perf/cluster/monitor/

Note

Listed below are the timing to output the statistics information to the cluster statistics information file:

For heartbeat resources

Periodical output

For groups 1

When the group startup processing is completed

When the group stop processing is completed

When the group move processing is completed 2

When the failover processing is completed 2

For group resources

When the group resource startup processing is completed

When the group resource stop processing is completed

For monitor resources

When the monitor processing is completed

When the monitor status change processing is completed

The statistics information to be collected includes the following items:

For heartbeat resources

Statistic value name

Description

Date

Time when the statistics information is output.This is output in the form below (000 indicates millisecond):YYYY/MM/DD HH:MM:SS.000Name

Name of a heartbeat resource.

Type

Type of the heartbeat resource.

Local

Host name of the local server.

Remote

Host name of the other server.

RecvCount

Heartbeat reception count during the log output interval.

RecvError

Error reception count during the log output interval.

RecvTime(Min)

Minimum interval (in milliseconds) of heartbeat reception during the log output interval.

RecvTime(Max)

Maximum interval (in milliseconds) of heartbeat reception during the log output interval.

RecvTime(Avg)

Average interval (in milliseconds) of heartbeat reception during the log output interval.

SendCount

Heartbeat transmission count during the log output interval.

SendError

Error transmission count during the log output interval.

SendTime(Min)

Minimum time (in milliseconds) for heartbeat transmission during the log output interval.

SendTime(Max)

Maximum time (in milliseconds) for heartbeat transmission during the log output interval.

SendTime(Avg)

Average time (in milliseconds) for heartbeat transmission during the log output interval.

For others (except heartbeat resources)

Statistic value name |

Description |

|---|---|

Date |

Time when the statistics information is output.

This is output in the form below (000 indicates millisecond):

YYYY/MM/DD HH:MM:SS.000

|

Name |

Name of group, group resource or monitor resource. |

Action |

Name of the executed processing.

The following strings are output:

For groups: Start (at start), Stop (at stop), Move (at move), Failover (at failover)

For group resources: Start (at activation), Stop (at deactivation)

For monitor resources: Monitor (at monitor execution)

|

Result |

Name of the results of the executed processing.

The following strings are output:

When the processing was successful: Success (no errors detected in monitoring or activation/deactivation)

When the processing failed: Failure (errors detected in monitoring or activation/deactivation)

When a warning occurred: Warning (only for monitoring, in case of warning)

When a timeout occurred: Timeout (monitoring timeout)

When the processing was canceled: Cancel (canceling processings such as cluster shutdown during group startup)

|

ReturnCode |

Return value of the executed processing. |

StartTime |

Start time of the executed processing.

This is output in the form below (000 indicates millisecond):

YYYY/MM/DD HH:MM:SS.000

|

EndTime |

End time of the executed processing.

This is output in the form below (000 indicates millisecond):

YYYY/MM/DD HH:MM:SS.000

|

ResponseTime(ms) |

Time taken for executing the processing (in millisecond).

This is output in millisecond.

|

Here is an example of the statistics information file to be output when a group with the following configuration is started up:

Server - Host name: server1, server2

Heartbeat resource

- Kernel mode LAN heartbeat resourceResource name: lankhb1, lankhb2

Group

Group name: failoverA

Group resource which belongs to the group (failoverA)

- script resourceResource name: script01, script02, script03

lankhb.cur

"Date","Name","Type","Local","Remote","RecvCount","RecvError","RecvTime(Min)","RecvTime(Max)","RecvTime(Avg)","SendCount","SendError","SendTime(Min)","SendTime(Max)","SendTime(Avg)" "2018/12/18 09:35:36.237","lankhb1","lankhb","server1","server1","20","0","3000","3000","3000","20","0","0","0","0" "2018/12/18 09:35:36.237","lankhb1","lankhb","server1","server2","20","0","3000","3000","3000","20","0","0","0","0" "2018/12/18 09:35:36.237","lankhb2","lankhb","server1","server1","20","0","3000","3000","3000","20","0","0","0","0" "2018/12/18 09:35:36.237","lankhb2","lankhb","server1","server2","20","0","3000","3000","3000","20","0","0","0","0" :

group.cur

"Date","Name","Action","Result","ReturnCode","StartTime","EndTime","ResponseTime(ms)" "2018/12/19 09:44:16.925","failoverA","Start","Success",,"2018/12/19 09:44:09.785","2018/12/19 09:44:16.925","7140" :

script.cur

"Date","Name","Action","Result","ReturnCode","StartTime","EndTime","ResponseTime(ms)" "2018/12/19 09:44:14.845","script01","Start","Success",,"2018/12/19 09:44:09.807","2018/12/19 09:44:14.845","5040" "2018/12/19 09:44:15.877","script02","Start","Success",,"2018/12/19 09:44:14.847","2018/12/19 09:44:15.877","1030" "2018/12/19 09:44:16.920","script03","Start","Success",,"2018/12/19 09:44:15.880","2018/12/19 09:44:16.920","1040" :

2.6.1. Notes on the size of the cluster statistics information file¶

The number of cluster statistics information files to be generated differs depending on their configurations.Some configurations may cause a large number of files to be generated. Therefore, consider setting the size of the cluster statistics information file according to the configuration. The maximum size of the cluster statistics information file is calculated with the following formula:

The size of the cluster statistics information file =([Heartbeat resource file size] x [number of types of heartbeat resources which are set]) x (number of generations (2)) +([Group file size]) x (number of generations (2)) +([Group resource file size] x [number of types of group resources which are set]) x (number of generations (2)) +([Monitor resource file size] x [number of types of monitor resources which are set]) x (number of generations (2))Example: For the following configuration, the total maximum size of the cluster statistics information files to be saved is 332 MB with this calculation. ((((50MB) x 1) x 2) + (((1MB) x 2) + ((3MB x 5) x 2) + ((10MB x 10) x 2) = 332MB)

Number of heartbeat resource types: 1 (file size: 50 MB)

Group (file size: 1 MB)

Number of group resource types: 5 (file size: 3 MB)

Number of monitor resource types: 10 (file size: 10 MB)

2.7. Function for outputting the operation log of Cluster WebUI¶

If the Output Cluster WebUI Operation Log check box is already checked on the WebManager tab of Cluster Properties in the config mode of Cluster WebUI, the information on the operation of Cluster WebUI is outputted to the log file. This file is in CSV format, which is hereinafter called "the operation log file of Cluster WebUI.

cur

Indicates the last outputted log file.

pre<x>

Where to save

Directory as Log output path in the config mode of Cluster WebUI

The operation information to be outputted includes the following items:

Item name |

Description |

|---|---|

Date |

Time when the operation information is outputted.

This is outputted in the form below (000 in milliseconds):

YYYY/MM/DD HH:MM:SS.000

|

Operation |

Name of the executed operation in Cluster WebUI. |

Request |

Request URL issued from Cluster WebUI to the WebManager server. |

IP |

IP address of a client that operated Cluster WebUI. |

UserName |

Name of a user who executed the operation.

When a user logged in to Cluster WebUI by using the OS authentication method, the user name is output.

|

HTTP-Status |

HTTP status code.

200: Success

Other than 200: Failure

|

ErrorCode |

Return value of the executed operation. |

ResponseTime(ms) |

Time taken for executing the operation (in milliseconds).

This is outputted in milliseconds.

|

ServerName |

Name of a server to be operated.

Its server name or IP address is outputted.

It is outputted when the name of a server to be operated is specified.

|

GroupName |

Name of a group to be operated.

It is outputted when the name of a group to be operated is specified.

|

ResourceName |

Name of a resource to be operated.

Outputted is the heartbeat resource name, network partition resolution resource name, group resource name, or monitor resource name.

It is outputted when the name of a resource to be operated is specified.

|

ResourceType |

Type of a resource to be operated.

It is output when the type of a resource to be operated is specified.

|

Parameters... |

Operation-specific parameters. |

The following output is an example of the operation log file of Cluster WebUI:

"Date","Operation","Request","IP","UserName","HTTP-Status","ErrorCode","ResponseTime(ms)","ServerName","GroupName","ResourceName","ResourceType","Parameters..." "2020/08/14 17:08:39.902","Cluster properties","/GetClusterproInfo.js","10.0.0.15","user1",200,0,141,,,, "2020/08/14 17:08:46.659","Monitor properties","/GetMonitorResourceProperty.js","10.0.0.15","user1",200,0,47,,,"fipw1","fipw" "2020/08/14 17:15:31.093","Resource properties","/GetGroupResourceProperty.js","10.0.0.15","user1",200,0,47,,"failoverA","fip1","fip" "2020/08/14 17:15:45.309","Start group","/GroupStart.js","10.0.0.15","user1",200,0,0,"server1","failoverA",, "2020/08/14 17:16:23.862","Suspend all monitors","/AllMonitorSuspend.js","10.0.0.15","user1",200,0,453,"server1",,,,"server2" :

The following is an example of the operation log file of Cluster WebUI outputted when the authentication fails:

When the cluster password method is used

"Date","Operation","Request","IP","UserName","HTTP-Status","ErrorCode","ResponseTime(ms)","ServerName","GroupName","ResourceName","ResourceType","Parameters..." "2020/11/20 09:29:59.710","Login","/Login.js","10.0.0.15","",403,,0,,,,

When the OS authentication method is used

"Date","Operation","Request","IP","UserName","HTTP-Status","ErrorCode","ResponseTime(ms)","ServerName","GroupName","ResourceName","ResourceType","Parameters..." "2020/11/20 09:29:59.710","Login User","/LoginUser.js","10.0.0.15","user1",401,,0,,,,

2.8. Function for outputting an API service operation log file¶

With the Output API Service Operation Log checkbox checked in the API tab of Cluster Properties in the config mode of Cluster WebUI, a log file is outputted containing information handled by the RESTful API. This CSV-format file is hereinafter called an API service operation log file.

cur

Indicates the last outputted log file.

pre<x>

Where to save

Directory as Log output path in the config mode of Cluster WebUI

The operation information to be outputted includes the following items:

Item name |

Description |

|---|---|

Date |

Time when the operation information is outputted.

This is outputted in the form below (000 in milliseconds):

YYYY/MM/DD HH:MM:SS.000

|

Method |

Either of the following HTTP request methods: GET or POST. |

Request |

Issued request-URI. |

IP |

IP address of the client which issued the request. |

UserName |

Name of a user who executed the operation. |

HTTP-Status |

HTTP status code.

200: Success

Other than 200: Failure

|

ErrorCode |

Return value of the executed operation. |

ResponseTime(ms) |

Time taken for executing the operation (in milliseconds).

This is outputted in milliseconds.

|

Here is an example of the contents of an outputted API service operation log file:

"Date","Method","Request","IP","UserName","HTTP-Status","ErrorCode","ResponseTime(ms)" "2023/05/28 16:34:08.007","GET","https://10.0.0.1:29009/api/v1/cluster","10.0.0.15","user1",200,0,84 "2023/05/28 16:34:08.007","GET","https://10.0.0.1:29009/api/v1/servers/servers?select=name","10.0.0.15","user1",200,0,84 "2023/05/28 16:35:03.283","POST","https://10.0.0.1:29009/api/v1/cluster/start","10.0.0.15","user1",200,0,142 "2023/05/28 16:35:03.283","POST","https://10.0.0.1:29009/api/v1/groups/failoverA/start -d '{ "target" : "server1" }'","10.0.0.15","user1",200,0,142 "2023/05/28 16:35:03.283","POST","https://10.0.0.1:29009/api/v1/resources/fip1/start -d '{ "target" : "server1" }'","10.0.0.15","user1",200,0,142 "2023/05/28 16:35:03.283","POST","https://10.0.0.1:29009/api/v1/monitors/fipw1/suspend -d '{ "target" : "server1" }'","10.0.0.15","root",200,0,142 :

2.9. Function for obtaining a log file for investigation¶

If an activation failure occurred in a group/monitor resource or a forced-stop resource failed in a forced stop, such information is collected and saved as a compressed file to the following directory: <installation path>\log\ecap. The format of the file name is <date and time when the event occurred>_<module name>_<event ID>.zip.

You can obtain this log file through Cluster WebUI. To do so, in the config mode of Cluster WebUI, go to Cluster Properties -> the Alert Log tab, then check the Enable a log file for investigation to be downloaded.

The compressed file contains the output of an executed command shared by resource types and that of one specific to a resource type.

Output of an executed command shared by resource types

The output is stored as a text file in the common folder.

Information to be collected is as follows:

The same information as that described in "Reference Guide" -> "EXPRESSCLUSTER command reference" -> "Collecting logs (clplogcc command)" -> "Collecting information when a failure occurs" -> "Information created by running a command"

The dynamic port range for TCP/IP

Volume information

CPU usage (Win32_PerfFormattedData_PerfOS_Processor class)

Memory usage (Win32_OperatingSystem class)

Output of an executed command specific to a resource type

The output is stored as a text file in Markdown format: <resource type>.ecap.md.

This is outputted by executing the following command specific to a resource type (even if this command does not exist, the command shared by resource types is run):

Resource type

Command name

Necessary package

Floating IP resource

arp -a

None

ping -w 3 <the IP address>

None

Dynamic DNS resource

nslookup -timeout=3 <the virtual host name>

None

dig any +time=3 <the virtual host name>

BIND

ipconfig /displaydns

None

NIC Link Up/Down monitor resource

ping -w 3 <the IP address>

None

Floating IP monitor resource

arp -a

None

ping -w 3 <the IP address>

None

Dynamic DNS monitor resource

nslookup -timeout=3 <the virtual host name>

None

dig any +time=3 <the virtual host name>

BIND

ipconfig /displaydns

None

Note

The log file for investigation may not be appropriately obtained, if the same event and the same module occurred more than once at the same period of time.

2.10. Communication ports¶

EXPRESSCLUSTER uses the following port numbers by default. You can change these port numbers by using the Cluster WebUI. Make sure that the programs other than EXPRESSCLUSTER do not access these port numbers.

To set up a firewall for the server, make sure that the following port numbers can be accessed.

For a cloud environment, allow access to ports numbered as below, not only in a firewall configuration at the instance side but also in a security configuration at the cloud infrastructure side.

For port numbers EXPRESSCLUSTER uses, refer to "Getting Started Guide" > "Notes and Restrictions" > "Before installing EXPRESSCLUSTER" > "Communication port number".

2.11. Limit on the band for mirror disk connect communication¶

You can set a limit on the communication band used for mirror disk connect communication by using the standard Windows Local Group Policy Editor (Policy-based QoS). A limit is set for each mirror disk connect. This method is useful for setting a limit on the communication band for all mirror disk resources or hybrid disk resources using the specified mirror disk connect.

2.11.1. Procedure for setting a limit on the band for mirror disk connect communication¶

To set a limit on the band for mirror disk connect communication, follow the procedure described below.

Setting the properties of a network adapter

Click Start, Control Panel, then Network and Sharing Center. Then, open Properties for a mirror disk connect.

Check the Qos Packet Scheduler check box when it is in Properties.

Click Install, Services, and then Add buttons to select QoS Packet Scheduler when it is not in Properties.

- Starting the Local Group Policy EditorTo set a limit on the band, use the Local Group Policy Editor. From the Start menu, click Run, and then execute the following command:

gpedit.msc

Creating a policy

Create a policy for a limit on the band. In the left pane, click Local Computer Policy, Computer Configuration, then Windows Settings, and then right-click Policy-based QoS and select Create New Policy.

Policy-based QoS - Create a QoS policy window

Set items as follows.

Policy name

Enter a policy name for identification.

Specify DSCP value

Set the IP priority. This setting is optional. For details, see Learn more about QoS Policies.

Specify Outbound Throttle Rate

Check the Specify Outbound Throttle Rate check box. Specify an upper limit on the communication band used for the mirror disk connect in units of KBps (kilobytes per second) or MBps (megabytes per second).

After setting the required items, click the Next button.

Policy-based QoS - This QoS policy applies to: window

Set this item as follows.

This QoS policy applies to: (application specification)

Select All applications.

After setting the required items, click the Next button.

Policy-based QoS - Specify the source and destination IP addresses. window

Set these items as follows.

This QoS policy applies to: (source IP address specification)

Select Only for the following source IP address or prefix and then enter the source IP address used for the mirror disk connect.

This QoS policy applies to: (destination IP address specification)

Select Only for the following destination IP address or prefix and then enter the destination IP address used for the mirror disk connect.

After setting the required items, click the Next button.

Policy-based QoS - Specify the protocol and port numbers. window

Set these items as follows.

Select the protocol this QoS policy applies to (S)

Select TCP.

Specify the source port number:

Select From any source port.

Specify the destination port number:

Select To this destination port number or range and then specify the mirror driver port number (default: 29005).

- Reflecting the policyClick the Finish button to apply the settings. The set policy is not immediately reflected, but according to the automatic policy update interval (default: within 90 minutes). To reflect the set policy immediately, update the policy manually. From the Start menu, click Run, and then execute the following command:

gpupdate /force

This completes the setting of a policy.

2.11.2. Procedure for suspending or releasing the limit on the band for mirror disk connect communication¶

To suspend or release the limit on the band for mirror disk connect communication, follow the procedure described below.

- Starting the Local Group Policy EditorTo suspend or release the limit on a band, use the Local Group Policy Editor. From the Start menu, click Run, and then execute the following command:

gpedit.msc

Suspending a policy by changing its setting or deleting the policy

- To suspend a limit on the bandTo suspend a limit on the band, change the setting for the policy for the limit on the band. Right-click the target QoS policy and then choose Edit Existing Policy. Then, uncheck the Specify Outbound Throttle Rate check box.After making this setting, click the OK button.

- To release a limit on the bandTo release a limit on the band, delete the policy for the limit on the band. Right-click the target QoS policy and then choose Delete Policy. The pop-up message "Are you sure you want to delete the policy?" appears. Click Yes.

- Reflecting the policyThe modification or deletion of a policy is not immediately reflected, but according to the automatic policy update interval (default: within 90 minutes). To reflect the deletion or modification immediately, update the policy manually. From the Start menu, click Run, and then execute the following command:

gpupdate /force

This completes the setting of a policy.

2.12. What causes EXPRESSCLUSTER to shut down servers¶

When any one of the following errors occurs, EXPRESSCLUSTER shuts down or resets servers to protect resources.

2.12.1. Final action for an error in group resource activation or deactivation¶

When one of the following is specified as the final action to be taken for errors in resource activation/deactivation:

Final action |

Result |

|---|---|

The cluster service stops and the OS shuts down. |

Causes normal shutdown after the group resources stop. |

The cluster service stops and the OS reboots. |

Causes normal reboot after the group resources stop. |

An intentional stop error is generated |

Causes a stop error (Panic) intentionally upon group resource activation/deactivation error. |

2.12.2. Action for a stall of resource activation or deactivation¶

When one of the following is specified as the action to be taken for a stall of resource activation or deactivation, and resource activation or deactivation took longer time than expected:

Action for a stall |

Result |

|---|---|

Emergency shutdown |

Causes the OS to shut down upon the stall of group resource activation or deactivation. |

Intended generation of a stop error |

Causes a stop error (Panic) upon the stall of group resource activation or deactivation. |

The OS shuts down if the resource activation or deactivation takes an unexpectedly long time. The OS shuts down, regardless of the setting of recovery in the event of a resource activation or deactivation error.

If a resource activation stall occurs, the following message is output to the event log and as an alert message.

Module type: rc

Event ID: 1032

Message: Failed to start the resource %1. (99 : command is timeout)

Description: Resource start failure

If a resource deactivation stall occurs, the following message is output to the event log and as an alert message.

Module type: rc

Event ID: 1042

Message: Failed to stop the resource %1. (99 : command is timeout)

Description: Resource stop failure

2.12.3. Final action at detection of an error in monitor resource¶

When the final action for errors in monitor resource monitoring is specified as one of the following:

Final action |

Result |

|---|---|

Stop cluster service and shut down the OS |

Causes normal shutdown after the group resources stop. |

Stop cluster service and reboot the OS |

Causes normal reboot after the group resources stop. |

An intentional stop error is generated |

Causes a stop error (Panic) intentionally upon monitor resource error detection. |

2.12.4. Forced stop action¶

When the type of forced stop is configured as BMC:

Forced stop action

Result

BMC reset

Causes reset in the failing server where the failover group existed.

BMC power off

Causes power off in the failing server where the failover group existed.

BMC power cycle

Causes power cycle in the failing server where the failover group existed.

BMC NMI

Causes NMI in the failing server where the failover group existed.

When the type of forced stop is configured as vCenter:

Forced stop action

Result

Power off

Causes power off in the failing server where the failover group existed.

Reset

Causes reset in the failing server where the failover group existed.

When the type of forced stop is configured as AWS or OCI:

Forced stop action

Result

stop

Stops the instance of the failing server where the failover group existed.

reboot

Reboots the instance of the failing server where the failover group existed.

When the type of forced stop is configured as Azure:

2.12.5. Emergency server shutdown¶

When the following processes terminated abnormally, clustering can not work properly. Then EXPRESSCLUSTER shuts down the server on which those processes terminated. This action is called emergency server shutdown.

clprc.exe

Server shut down method can be configured in Action When the Cluster Service Process is Abnormal of Cluster Properties from the config mode of Cluster WebUI. Following method can be set.

2.12.6. Resource deactivation error in stopping the EXPRESSCLUSTER Server service¶

If there is a failure to deactivate the resource during the EXPRESSCLUSTER Server service stop process, the action set in [Action When the Cluster Service Process Is Abnormal] is executed.

2.12.7. Recovery from network partitioning¶

If all heartbeats are disrupted, network partitioning resolution takes place which results in one or all of the servers to shut down. Unless the automatic recovery mode is set in Cluster Properties, the server is in the Suspension (Isolated) status and is not clustered after reboot.

When you resolve the problem that caused the disruption of heartbeats, recover the cluster.

For details on network partitioning, see "Details on network partition resolution resources" in the "Reference Guide".

For information on the suspended status (restart following a shutdown) and cluster recovery, see the online manual "Functions of the WebManager" in this guide.

2.12.8. Emergency server restart¶

When an abnormal termination is detected in the following processes, EXPRESSCLUSTER reboots the OS. This action is called Emergency server restart.

EXPRESSCLUSTER Disk Agent (clpdiskagent.exe)

EXPRESSCLUSTER Server (clppmsvc.exe)

EXPRESSCLUSTER Transaction (clptrnsv.exe)

2.12.9. Failure in suspending or resuming the cluster¶

If suspending or resuming the cluster fails, the server is shutdown.

2.13. Configuring the settings to temporarily prevent execution of failover¶

Follow the steps below to temporarily prevent failover caused by a failed server from occurring.

(Example) To (temporarily) extend the heartbeat time-out to 3600 seconds (one hour) from the current time when the heartbeat time-out is set to 90 seconds:

clptoratio -r 40 -t 1h

Follow the steps below to temporarily prevent failover caused by a monitor error by temporarily stopping monitor resource monitoring.

(Example) To suspend all monitoring operations on the server in which the command is run:

clpmonctrl -s(Example) To suspend all monitoring operations on the server with -h option specified

clpmonctrl -s -h <server name>(Example) Resuming all monitoring operations on the server in which the command is run:

clpmonctrl -r(Example) To resume all monitoring operations on the server with -h option specified

clpmonctrl -r -h <server name>

Follow the steps below to temporarily prevent failover caused by a monitor error by disabling recovery action for a monitor resource error.

Follow the steps below to temporarily prevent failover caused by an activation error by disabling recovery action for a group resource activation error.

2.14. How to execute chkdsk/defrag¶

2.14.2. How to execute chkdsk/defrag on a mirror/hybrid disk¶

When executing chkdsk or defrag on a partition configured as a mirror disk resource, the procedure differs depending on whether the server is an active server or a standby server.

How to execute chkdsk/defrag on an active server (mirror/hybrid disk)

Refer to "How to execute chkdsk/defrag on a shared disk"

How to execute chkdsk/defrag on a standby server (mirror disk)

If you perform a chkdsk or defragmentation in restoration mode on the standby server, mirror copy overwrites partitions established as mirror disks on the active disk image, and the file system fails to be restored or optimized. This section describes the procedure for chkdsk in order to check media errors.

Suspend the mdw monitor resources temporarily by using the Cluster WebUI or the clpmonctrl command.

(Example)

clpmonctrl -s -m <mdw monitor name>Isolate the target mirror disk resource.

(Example)

clpmdctrl --break <md resource name>Enable access to the mirror disk .

(Example)

clpmdctrl --active <md resource name> -fExecute chkdsk or defrag on the target partition from the command prompt.

Important

If the message "chkdsk cannot run because the volume is being used by another process. Would you like to schedule this volume to be checked the next time the system restarts? (Y/N)" appears, select "N".

Disable access to the mirror disk.

(Example)

clpmdctrl --deactive <md resource name>Resume the mdw monitor resources by using the Cluster WebUI or the clpmonctrl command.

(Example)

clpmonctrl -r -m <mdw monitor name>If automatic mirror recovery is disabled, perform mirror recovery manually from Mirror Disks.

How to execute chkdsk/defrag on a standby server (hybrid disk)

If you perform a chkdsk or defragmentation in restoration mode on the standby server, mirror copy overwrites partitions established as hybrid disks on the active disk image, and the file system fails to be restored or optimized. This section describes the procedure for chkdsk in order to check media errors.

Suspend the hdw monitor resources temporarily by using the Cluster WebUI or the clpmonctrl command.

(Example)

clpmonctrl -s -m <hdw monitor name>Isolate and enable access to the target hybrid disk resource.

(Example)

clphdsnapshot --open <hd resource name>Execute chkdsk or defrag on the target partition from the command prompt.

Important

If the message "chkdsk cannot run because the volume is being used by another process. Would you like to schedule this volume to be checked the next time the system restarts? (Y/N)" appears, select "N".

Disable access to the mirror disk ..

(Example)

clphdsnapshot --closeResume the mdw monitor resources by using the Cluster WebUI or the clpmonctrl command.

(Example)

clpmonctrl -r -m <hdw monitor name>If automatic mirror recovery is disabled, perform mirror recovery manually from Mirror Disks.

2.15. How to replace a server with a new one¶

When you replace a server in a cluster environment, follow the instructions below:

Set up a new server in the same way as the failed server.

When using a shared disk, do not connect the new server to the shared disk yet.

Set the same computer name and IP address as the failed server.

Register the EXPRESSCLUSTER license and apply updates as they have been registered and applied before.

If there were cluster partition and/or data partition of a mirror disk or hybrid disk on the local disk of the failing server, allocate these partitions and assign drive letters for them as they were configured in the failing server. When you use the disk of the failing server, configure drive letters for the partitions, though allocating partitions is not necessary.

When using a shared disk, set the SCSI controller or the HBA that is connected to the shared disk to be filtered in Filter Settings of Shared Disk upon installing the EXPRESSCLUSTER Server.

After the setup, shut it down and power it off.

Important

In Filter Settings of Shared Disk, set the SCSI controller or the HBA that is connected to the shared disk to be filtered. If the new server is connected to the shared disk when it has not been set to be filtered, data on the shared disk may be corrupted.

If the failed server is still running, shut it down and remove it from the shared disk and the LAN, and make sure other servers in the cluster are working properly. (Ignore errors caused by the failed server being stopped.)

Start the new server while it is connected to the LAN. When using a shared disk, start the server while it is also connected to the shared disk.

When using the shared disk, on the new server, use Disk Management (On the Start menu, point to Settings, and click Control Panel. Double-click Administrative Tools and then Computer Management, and click Disk Management.) to confirm that the shared disk is visible, and set the same drive letter as the failed server.

At this point, access to the shared disk is controlled, so the disk data cannot be referred.

Connect to a server in normal operation in the cluster by using the Web browser to start the config mode of Cluster WebUI. When using a shared disk, click Properties, HBA tab and Connect on the new server to check or modify the information on HBA and partitions.

Important

On the HBA tab of Properties of the new server, set the SCSI controller or the HBA that is connected to the shared disk to be filtered. If the shared disk is connected when it has not been set to be filtered, data on the shared disk may be corrupted.

When there is any mirror disk resource or hybrid disk resource in the resources used in the new server, stop the failover group containing these resources from the operation mode of Cluster WebUI.

Run "clpcl --suspend --force" from the command prompt on the server in normal operation in the cluster and suspend the cluster.

A server is recognized to have stopped, so the cluster cannot be suspended from the Cluster WebUI.

Select Apply the settings from the File menu in the Builder to apply the cluster configuration data .on the cluster.

When the message "The disk information in the cluster configuration data differs from that in the server. Do you want the inconsistency to be automatically corrected?" appears, select Yes.

If you use a fixed term license, run the following command:

clplcnsc --reregister <a folder path for saved license files>

Resume the cluster from the operation mode of Cluster WebUI. If you stopped any group in step 6, start it.

Note

If you resume the cluster from the Cluster WebUI, the error message "Failed to resume the cluster. Click the Refresh data button, or try again later." is displayed, but ignore it. This is displayed because the new server has not been suspended.

Reboot the OS on the new server.

When Off is selected for Auto Return in Extension tab of Cluster Properties, click Recover Server of the server where EXPRESSCLUSTER has been reinstalled in the operation mode of Cluster WebUI.

When a mirror disk resource or hybrid disk resource exists in the resources used in the new server and the Auto mirror recovery check box is not selected in Mirror Disk tab of Properties of the cluster, copy the mirror disk or hybrid disk fully from Mirror Disks.

Important

If the server that operates in another mirror disk type cluster is replaced with a new server, differential copy is executed automatically. After differential copy is completed, perform full copy manually. If you do not perform full copy, a mirror disk data inconsistency will occur.

Move group as necessary. When mirror disk or hybrid disk is being fully copied, complete copying before moving.

2.16. Wait time for synchronized cluster startup¶

Even all servers in a cluster are powered on simultaneously, it does not always mean that EXPRESSCLUSTER will start up simultaneously on all servers. EXPRESSCLUSTER may not start up simultaneously after rebooting the cluster following shutdown. Because of this, one server waits for other servers in the cluster to start.

By default, 5 minutes is set to the startup synchronization time. To change the default value, click Cluster Properties in the Cluster WebUI, click the Timeout tab, and select Synchronize Wait Time.

For more information, see "Timeout tab" in "Parameter details" in the "Reference Guide".

2.17. Changing the server configuration (add/delete)¶

2.17.1. Adding a server (mirror disk or hybrid disk is not used)¶

To add a server, follow the steps below:

Important

When adding a server in changing the cluster configuration, do not make any other changes such as adding a group resource.

- The additional server license must be registered.To register licenses, refer to "Installation and Configuration Guide" > "Registering the license".

Make sure that the cluster is working properly.

Start the server to add. For using the shared disk, make sure the server to add is not connected to the shared disk and then start the server to add.

Important

To use the shared disk, do not connect the server to the shared disk before setting it up and powering it off. Data on the shared disk may be corrupted.

Configure the settings that should be done before setting up the EXPRESSCLUSTER Server on the server to add. However, to use the shared disk, do not configure the settings for the disk in this step.

See also

As for the settings to be configured before the setup, see "Settings after configuring hardware" of "Determining a system configuration" in "Installation and Configuration Guide".

Set up the EXPRESSCLUSTER Server to the server to add. Enter the port numbers of the Cluster WebUI and the disk agent. Configure the same settings for the port number as the server that has been already set up. To use the shared disk, set the HBA that is connected to the shared disk to be filtered. Register the license as necessary. After the setup, shut down the server to add and power it off.

Important

If the shared disk is not set to be filtered in Filter Settings of Shared Disk when setting up the EXPRESSCLUSTER Server, do not connect to the shared disk even after the setup completes. Data on the shared disk may be corrupted. Reinstall EXPRESSCLUSTER and set the shared disk to be filtered.

Start the server to add. To use the shared disk, connect the disk to the server to add at first, and then start the server.

To use the shared disk, configure the settings for the disk on the server to add.

Use Disk Management (On the start menu, point to Settings, and click Control Panel. Double-click Administrative Tools and then Computer Management, and click Disk Management.) to confirm that the shared disk is visible.