8. EXPRESSCLUSTER command reference¶

This chapter describes commands that are used on EXPRESSCLUSTER.

This chapter covers:

8.9. Changing, backing up, and checking cluster configuration data (clpcfctrl command)

8.15.1. Displaying the hybrid disk status (clphdstat command)

8.22. Controlling chassis identify lamp (clpledctrl command)

8.24. Requesting processing to cluster servers (clprexec command)

8.26. Controlling cluster activation synchronization wait processing (clpbwctrl command)

8.27. Estimating the amount of resource usage (clpprer command)

8.29. Controlling the rest point of DB2 (clpdb2still command)

8.30. Controlling the rest point of MySQL (clpmysqlstill command)

8.31. Controlling the rest point of Oracle (clporclstill command)

8.32. Controlling the rest point of PostgreSQL (clppsqlstill command)

8.33. Controlling the rest point of SQL Server (clpmssqlstill command)

8.34. Controlling the rest point of Sybase (clpsybasestill command)

8.35. Displaying the cluster statistics information (clpperfc command)

8.36. Checking the cluster configuration information (clpcfchk command)

8.1. Operating the cluster from the command line¶

EXPRESSCLUSTER provides various commands to operate a cluster by the command line. These commands are useful for things like constructing a cluster or when you cannot use the Cluster WebUI. You can perform greater number of operations using the command line than Cluster WebUI.

Note

When you have configured a group resource (examples: disk resource and exec resource) as a recovery target in the settings of error detection by a monitor resource, and the monitor resource detects an error, do not perform the following actions by commands related to the actions or by the Cluster WebUI while recovery (reactivation -> failover -> final action) is ongoing.

terminate/suspend the cluster

start/terminate/migrate a group

If you perform the actions mentioned above against the cluster while the recovery caused by detection of an error by a monitor resource is ongoing, other group resources of that group may not terminate. However, you can perform these actions as long as the final action has been executed, even if a monitor resource detected an error.

Important

The installation directory contains executable-format files and script files that are not listed in this guide. Do not execute these files by programs or applications other than EXPRESSCLUSTER. Any problems caused by not using EXPRESSCLUSTER will not be supported.

8.2. EXPRESSCLUSTER commands¶

Commands for configuring a cluster

Command |

Description |

Page |

|---|---|---|

clpcfctrl |

Distributes configuration data created by the Cluster WebUI to servers.

Backs up the cluster configuration data to be used by the Cluster WebUI.

|

|

clplcnsc |

Manages the product or trial version license of this product. |

|

clpcfchk |

Checks the cluster configuration data. |

Commands for displaying status

Command |

Description |

Page |

|---|---|---|

clpstat |

Displays the cluster status and configuration information. |

|

clphealthchk |

Check the process health. |

Commands for cluster operation

Command |

Description |

Page |

|---|---|---|

clpcl |

Starts, stops, suspends, or resumes the EXPRESSCLUSTER daemon. |

|

clpdown |

Stops the EXPRESSCLUSTER daemon and shuts down the server. |

|

clpstdn |

Stops the EXPRESSCLUSTER daemon across the whole cluster and shuts down all servers. |

|

clpgrp |

Starts, stops, or moves groups. This command also migrates the virtual machine. |

|

clptoratio |

Extends or displays the various time-out values of all servers in the cluster. |

|

clproset |

Modifies and displays I/O permission of a shared disk partition device. |

|

clpmonctrl |

Controls monitor resources. |

|

clpregctrl |

Displays or initializes the reboot count on a single server. |

|

clprsc |

Stops or resumes group resources |

|

clpcpufreq |

Controls CPU frequency. |

|

clpledctrl |

Controls the chassis identify function. |

|

clptrnreq |

Requests a server to execute a process. |

|

clprexec |

Requests that an EXPRESSCLUSTER server execute a process from external monitoring. |

|

clpbmccnf |

Changes the information on BMC user name and password. |

|

clpbwctrl |

Controls the cluster activation synchronization wait processing. |

Log-related commands

Command |

Description |

Page |

|---|---|---|

clplogcc |

Collects logs and OS information. |

|

clplogcf |

Modifies and displays a configuration of log level and the file size of log output. |

|

clpperfc |

Displays the cluster statistics data about groups and monitor resources. |

Script-related commands

Command |

Description |

Page |

|---|---|---|

clplogcmd |

Writes texts in the exec resource script to create a desired message to the output destination |

Mirror-related commands (when the Replicator is used)

Command |

Description |

Page |

|---|---|---|

clpmdstat |

Displays a mirroring status and configuration information. |

|

clpmdctrl |

Activates/deactivates a mirror disk resource, or recovers mirror.

Displays or modifies the maximum number of the request queues.

|

|

clpmdinit |

Initializes the cluster partition of a mirror disk resource.

Creates a file system on the data partition of a mirror disk resource.

|

Hybrid disk-related commands (when the Replicator DR is used)

Command |

Description |

Page |

|---|---|---|

clphdstat |

Displays the hybrid disk status and configuration information. |

|

clphdctrl |

Activates/deactivates a hybrid disk resource, or recovers mirror.

Displays or modifies the maximum number of the request queues.

|

|

clphdinit |

Initializes the cluster partition of a hybrid disk resource. |

System monitor-related commands (when the System Resource Agent is used)

Command |

Description |

Page |

|---|---|---|

clpprer |

Estimates the future value from the tendency of the given resource use amount data. |

DB rest point-related commands

Command |

Description |

Page |

|---|---|---|

clpdb2still |

Controls the securing/release of the rest point of DB2. |

|

clpmysqlstill |

Controls the securing/release of the rest point of MySQL. |

|

clporclstill |

Controls the securing/release of the rest point of Oracle. |

|

clppsqlstill |

Controls the securing/release of the rest point of PostgreSQL. |

|

clpmssqlstill |

Controls the securing/release of the rest point of SQL Server. |

|

clpsybasestill |

Controls the securing/release of the rest point of Sybase. |

Other commands

Command |

Description |

Page |

|---|---|---|

clplamp |

Lights off the warning light of the specified server. |

8.3. Displaying the cluster status (clpstat command)¶

the clpstat command displays cluster status and configuration information.

-

Command line - clpstat -s [--long] [-h hostname]clpstat -g [-h hostname]clpstat -m [-h hostname]clpstat -n [-h hostname]clpstat -p [-h hostname]clpstat -i [--detail] [-h hostname]clpstat --cl [--detail] [-h hostname]clpstat --sv [server_name] [--detail] [-h hostname]clpstat --hb [hb_name] [--detail] [-h hostname]clpstat --np [np_name] [--detail] [-h hostname]clpstat --svg [servergroup_name] [--detail] [-h hostname]clpstat --grp [group_name] [--detail] [-h hostname]clpstat --rsc [resource_name] [--detail] [-h hostname]clpstat --mon [monitor_name] [--detail] [-h hostname]clpstat --xcl [xclname] [--detail] [-h hostname]clpstat --local

-

Description This command line displays a cluster status and configuration data.

-

Option -

-s¶

-

Nooption¶ Displays a cluster status.

-

--long¶ Displays a name of the cluster name and resource name until the end.

-

-g¶ Displays a cluster group map.

-

-m¶ Displays status of each monitor resource on each server.

-

-n¶ Displays each heartbeat resource status on each server.

-

-p¶ Displays the status of network partition resolution resource on each server.

-

-i¶ Displays the configuration information of the whole cluster.

-

--cl¶ Displays the cluster configuration data. Displays the Mirror Agent information as well for the Replicator, Replicator DR.

-

--sv[server_name]¶ Displays the server configuration information. By specifying the name of a server, you can display information of the specified server.

-

--hb[hb_name]¶ Displays heartbeat resource configuration information. By specifying the name of a heartbeat resource, you can display only the information on the specified heartbeat.

-

--np[np_name]¶ Displays network partition resolution resource configuration information. By specifying the name of a network partition resolution resource, you can display only the information on the specified network partition resolution resource.

-

--svg[servergroup_name]¶ Displays server group configuration information. By specifying the name of a server group, you can display only the information on the specified server group.

-

--rsc[resource_name]¶ Displays group resource configuration information. By specifying the name of a group resource, you can display only the information on the specified group resource.

-

--mon[monitor_name]¶ Displays monitor resource configuration information. By specifying the name of a monitor resource, you can display only the information on the specified resource.

-

--xcl[xclname]¶ Displays configuration information of exclusion rules.By specifying exclusion rule name, only the specified exclusion name information can be displayed.

-

--detail¶ Displays more detailed information on the setting.

-

-hhostname¶ Acquires information from the server specified with hostname. Acquires information from the command running server (local server) when the -h option is omitted.

-

--local¶ Displays the cluster status. This option displays the same information when -s option is specified or when no option is specified. However, this option displays only information of the server on which this command is executed, without communicating with other servers.

-

-

Return Value When the -s option is not specified

0

Success

9

The command was run duplicatedly.

Other than the above

Failure

-

Remarks According to the combination of options, configuration information shows information in various forms.

-

Notes Run this command as the root user.

The cluster daemon must be activated on the server where you run this command.

When you specify the name of a server for the -h option, the server should be in the cluster.

For the language used for command output, see "Cluster properties - Info tab" in "2. Parameter details" in this guide.

When you run the clpstat command with the -s option or without any option, names such as a cluster or a resource will not be displayed halfway.

-

Example of Execution Examples of information displayed after running these commands are provided in the next topic.

-

Error Messages Message

Cause/Solution

Log in as root.

Log on as the root user.

Invalid configuration file. Create valid cluster configuration data.

Create valid cluster configuration data by using the Cluster WebUI.

Invalid option.

Specify a valid option.

Could not connect to the server. Check if the cluster daemon is active.

Check if the cluster daemon is activated.

Invalid server status.

Check if the cluster daemon is activated.

Server is not active. Check if the cluster daemon is active.

Check if the cluster daemon is activated.

Invalid server name. Specify a valid server name in the cluster.

Specify the valid name of a server in the cluster.

Invalid heartbeat resource name. Specify a valid heartbeat resource name in the cluster.

Specify the valid name of a heartbeat resource in the cluster.

Invalid network partition resource name.Specify a valid network partition resource name in the cluster.Specify the valid name of a network partition resolution resource in the cluster.

Invalid group name. Specify a valid group name in the cluster.

Specify the valid name of a group in the cluster.

Invalid group resource name. Specify a valid group resource name in the cluster.

Specify the valid name of a group resource in the cluster.

Invalid monitor resource name. Specify a valid monitor resource name in the cluster.

Specify the valid name of a monitor resource in the cluster.

Connection was lost. Check if there is a server where the cluster daemon is stopped in the cluster.

Check if there is any server on which the cluster daemon has stopped in the cluster.

Invalid parameter.

The value specified as a command parameter may be invalid.

Internal communication timeout has occurred in the cluster server. If it occurs frequently, set a longer timeout.

A time-out occurred in the EXPRESSCLUSTER internal communication.If time-out keeps occurring, set the internal communication time-out longer.Internal error. Check if memory or OS resources are sufficient.

Check to see if the memory or OS resource is sufficient.

Invalid server group name. Specify a valid server group name in the cluster.

Specify the correct server group name in the cluster.

The cluster is not created.

Create and apply the cluster configuration data.

Could not connect to the server. Internal error. Check if memory or OS resources are sufficient.

Check to see if the memory or OS resource is sufficient.

Cluster is stopped. Check if the cluster daemon is active.

Check if the cluster daemon is activated.

Cluster is suspended. To display the cluster status, use --local option.

Cluster is suspended. To display the cluster status, use --local option.

8.3.1. Common entry examples¶

8.3.2. Displaying the status of the cluster (-s option)¶

The following is an example of display when you run the clpstat command with the -s option or without any option:

-

Example of a command entry # clpstat -s

-

Example of the display after running the command ===================== CLUSTER STATUS ====================== Cluster : cluster <server> *server1............: Online server1 lanhb1 : Normal LAN Heartbeat lanhb2 : Normal LAN Heartbeat diskhb1 : Normal Disk Heartbeat comhb1 : Normal COM Heartbeat witnesshb1 : Normal Witness Heartbeat pingnp1 : Normal ping resolution pingnp2 : Normal ping resolution httpnp1 : Normal http resolution server2.............: Online server2 lanhb1 : Normal LAN Heartbeat lanhb2 : Normal LAN Heartbeat diskhb1 : Normal Disk Heartbeat comhb1 : Normal COM Heartbeat witnesshb1 : Normal Witness Heartbeat pingnp1 : Normal ping resolution pingnp2 : Normal ping resolution httpnp1 : Normal http resolution <group> failover1..........: Online failover group1 current : server1 disk1 : Online /dev/sdb5 exec1 : Online exec resource1 fip1 : Online 10.0.0.11 failover2..........: Online failover group2 current : server2 disk2 : Online /dev/sdb6 exec2 : Online exec resource2 fip2 : Online 10.0.0.12 <monitor> diskw1 : Normal disk monitor1 diskw2 : Normal disk monitor2 ipw1 : Normal ip monitor1 pidw1 : Normal pidw1 userw : Normal usermode monitor sraw : Normal sra monitor =============================================================

Information on each status is provided in "Status Descriptions".

8.3.3. Displaying a group map (-g option)¶

To display a group map, run the clpstat command with the -g option.

-

Example of a command entry # clpstat -g

-

Example of the display after running the command ===================== GROUPMAP INFORMATION ================= Cluster : cluster *server0 : server1 server1 : server2 ------------------------------------------------------------- server0 [o] : failover1[o] failover2[o] server1 [o] : failover3[o] =============================================================

Groups that are not running are not displayed.

Information on each status is provided in "Status Descriptions".

8.3.4. Displaying the status of monitor resources (-m option)¶

To display the status of monitor resources, run the clpstat command with the -m option.

-

Example of a command entry # clpstat -m

-

Example of the display after running the command =================== MONITOR RESOURCE STATUS ================= Cluster : cluster *server0 : server1 server1 : server2 Monitor0 [diskw1 : Normal] ------------------------------------------------------------- server0 [o] : Online server1 [o] : Online Monitor1 [diskw2 : Normal] ------------------------------------------------------------- server0 [o] : Online server1 [o] : Online Monitor2 [ipw1 : Normal] ------------------------------------------------------------- server0 [o] : Online server1 [o] : Online Monitor3 [pidw1 : Normal] ------------------------------------------------------------- server0 [o] : Online server1 [o] : Offline Monitor4 [userw : Normal] ------------------------------------------------------------- server0 [o] : Online server1 [o] : Online Monitor5 [sraw : Normal] ------------------------------------------------------------- server0 [o] : Online server1 [o] : Online =============================================================

Information on each status is provided in "Status Descriptions".

8.3.5. Displaying the status of heartbeat resources (-n option)¶

To display the status of heartbeat resources, run clpstat command with the -n option.

-

Example of a command entry # clpstat -n

-

Example of the display after running the command ================== HEARTBEAT RESOURCE STATUS ==================== Cluster : cluster *server0 : server1 server1 : server2 HB0 : lanhb1 HB1 : lanhb2 HB2 : diskhb1 HB3 : comhb1 HB4 : witnesshb1 [on server0 : Online] HB 0 1 2 3 4 ----------------------------------------------------------------- erver0 : o o o o o server1 : o o o x o [on server1 : Online] HB 0 1 2 3 4 ----------------------------------------------------------------- server0 : o o o x o server1 : o o o o o =================================================================

Detailed information on each status is provided in "Status Descriptions".

-

The status of the example shown above The example above presents the status of all heartbeat resources seen from server0 and server1 when the COM heartbeat resource is disconnected.

Because comhb1, a COM heartbeat resource, is not able to communicate from both servers, communication to server1 on server0 or communication to server0 on server1 is unavailable.

The rest of heartbeat resources on both servers are in the status allowing communications.

8.3.6. Displaying the status of network partition resolution resources (-p option)¶

To display the status of network partition resolution resources, run clpstat command with the -p option.

-

Example of a command entry # clpstat -p

-

Example of the display after running the command =============== NETWORK PARTITION RESOURCE STATUS ================ Cluster : cluster *server0 : server1 server1 : server2 NP0 : pingnp1 NP1 : pingnp2 NP2 : httpnp1 [on server0 : Caution] NP 0 1 2 ----------------------------------------------------------------- server0 : o x o server1 : o x o [on server1 : Caution] NP 0 1 2 ----------------------------------------------------------------- server0 : o x o server1 : o x o =================================================================

Detailed information on each status is provided in "Status Descriptions".

-

The status of the example shown above The example above presents the status of all the network partition resolution resources seen from server0 and server1 when the device to which ping of the network partition resolution resource pingnp2 is sent is down.

8.3.7. Displaying the cluster configuration data (--cl option)¶

To display the configuration data of a cluster, run the clpstat command with the -i, --cl, --svg, --hb, --grp, --rsc, --mon, or --xcl option. You can see more detailed information by specifying the --detail option.

For details of each item of the list, see "Cluster properties" in "2. Parameter details" in this guide.

To display the cluster configuration data, run the clpstat command with the --cl option.

-

Example of a command entry # clpstat --cl

-

Example of the display after running the command ===================== CLUSTER INFORMATION ================== [Cluster : cluster] Comment : failover cluster =============================================================

8.3.8. Displaying only the configuration data of certain servers (--sv option)¶

When you want to display only the cluster configuration data on a specified server, specify the name of the server after the --sv option in the clpstat command. If you want to see the details, specify the --detail option. When the name of the server is not specified, cluster configuration data of all servers are displayed.

-

Example of a command entry # clpstat --sv server1

-

Example of the display after running the command ===================== CLUSTER INFORMATION ================== [Server0 : server1] Comment : server1 Virtual Infrastructure : vSphere Product : EXPRESSCLUSTER X 4.2 for Linux Internal Version : 4.2.0-1 Edition : X Platform : Linux =============================================================

8.3.9. Displaying only the resource information of certain heartbeats (--hb option)¶

When you want to display only the cluster configuration data on a specified heartbeat resource, specify the name of the heartbeat resource after the --hb option in the clpstat command. If you want to see the details, specify the --detail option.

-

Example of a command entry For a LAN heartbeat resource:

# clpstat --hb lanhb1

-

Example of the display after running the command ==================== CLUSTER INFORMATION =================== [HB0 : lanhb1] Type : lanhb Comment : LAN Heartbeat =============================================================

-

Example of a command entry For disk heartbeat resource:

# clpstat --hb diskhb

-

Example of the display after running the command ===================== CLUSTER INFORMATION ================== [HB2 : diskhb1] Type : diskhb Comment : Disk Heartbeat =============================================================

-

Example of a command entry For COM heartbeat resource:

# clpstat --hb comhb

-

Example of the display after running the command ===================== CLUSTER INFORMATION ================== [HB3 : comhb1] Type : comhb Comment : COM Heartbeat =============================================================

-

Example of a command entry For kernel mode LAN heartbeat resource:

# clpstat --hb lankhb

-

Example of the display after running the command ===================== CLUSTER INFORMATION ================== [HB4 : lankhb1] Type : lankhb Comment : Kernel Mode LAN Heartbeat =============================================================

-

Example of a command entry For a BMC heartbeat resource:

# clpstat --hb bmchb1

-

Example of the display after running the command ==================== CLUSTER INFORMATION ======================= [HB0 : bmchb1] Type : bmchb Comment : BMC Heartbeat =================================================================

-

Tips By using the --sv option and the --hb option together, you can see the information as follows.

-

Example of a command entry # clpstat --sv --hb

-

Example of the display after running the command: ===================== CLUSTER INFORMATION ================= [Server0 : server1] Comment : server1 Virtual Infrastructure : Product : EXPRESSCLUSTER X 4.2 for Linux Internal Version : 4.2.0-1 Edition : X Platform : Linux [HB0 : lanhb1] Type : lanhb Comment : LAN Heartbeat [HB1 : lanhb2] Type : lanhb Comment : LAN Heartbeat [HB2 : diskhb1] Type : diskhb Comment : Disk Heartbeat [HB3 : comhb1] Type : comhb Comment : COM Heartbeat [HB4 : witnesshb] Type : witnesshb Comment : Witness Heartbeat [Server1 : server2] Comment : server2 Virtual Infrastructure : Product : EXPRESSCLUSTER X 4.2 for Linux Internal Version : 4.2.0-1 Edition : X Platform : Linux [HB0 : lanhb1] Type : lanhb Comment : LAN Heartbeat [HB1 : lanhb2] Type : lanhb Comment : LAN Heartbeat [HB2 : diskhb1] Type : diskhb Comment : Disk Heartbeat [HB3 : comhb1] Type : comhb Comment : COM Heartbeat [HB4 : witnesshb] Type : witnesshb Comment : Witness Heartbeat ============================================================

8.3.10. Displaying only the configuration data of certain network partition resolution resources (--np option)¶

When you want to display only the cluster configuration data on the specified network partition resolution resource, specify the name of the network partition resolution resource after the --np option in the clpstat command. If you want to see the details, specify the --detail option. When you do not specify the name of the network partition resolution resource, the cluster configuration data of all the network partition resolution resources is displayed.

-

Example of a command entry For a PING network partition resolution resource:

# clpstat --np pingnp1

-

Example of the display after running the command ===================== CLUSTER INFORMATION ===================== [NP0 : pingnp1] Type : pingnp Comment : ping resolution =================================================================

-

Example of a command entry For a HTTP network partition resolution resource:

# clpstat --np httpnp1

-

Example of the display after running the command ===================== CLUSTER INFORMATION ===================== [NP0 : httpnp1] Type : httpnp Comment : http resolution =================================================================

8.3.11. Displaying only the configuration data of certain server group (--svg option)¶

To display only the cluster configuration data on a specified server group, specify the name of server group after --svg option in the clpstat command. When you do not specify the name of server group, the cluster configuration data of all the server groups is displayed.

-

Example of a command entry # clpstat --svg servergroup1

-

Example of the display after running the command ===================== CLUSTER INFORMATION ===================== [ServerGroup0 : servergroup1] server0 : server1 server1 : server2 server2 : server3 =================================================================

8.3.12. Displaying only the configuration data of certain groups (--grp option)¶

When you want to display only the cluster configuration data on a specified group, specify the name of the group after the --grp option in the clpstat command. If you want to see the details, specify the --detail option. When you do not specify the name of group, the cluster configuration data of all the groups is displayed.

-

Example of a command entry # clpstat --grp failover1

-

Example of the display after running the command ===================== CLUSTER INFORMATION ================== [Group0 : failover1] Type : failover Comment : failover group1 ============================================================

8.3.13. Displaying only the configuration data of a certain group resource (--rsc option)¶

When you want to display only the cluster configuration data on a specified group resource, specify the group resource after the --rsc option in the clpstat command. If you want to see the details, specify the --detail option. When you do not specify the name of server group, the cluster configuration data of all the group resources is displayed.

-

Example of a command entry For floating IP resource:

# clpstat --rsc fip1

-

Example of the display after running the command ===================== CLUSTER INFORMATION ===================== [Resource2 : fip1] Type : fip Comment : 10.0.0.11 IP Address : 10.0.0.11 ================================================================

-

Tips By using the --grp option and the --rsc option together, you can display the information as follows.

-

Example of a command entry # clpstat --grp --rsc

-

Example of the display after running the command ===================== CLUSTER INFORMATION ================== [Group0 : failover1] Type : failover Comment : failover group1 [Resource0 : disk1] Type : disk Comment : /dev/sdb5 Disk Type : disk File System : ext2 Device Name : /dev/sdb5 Raw Device Name : Mount Point : /mnt/sdb5 [Resource1 : exec1] Type : exec Comment : exec resource1 Start Script Path : /opt/userpp/start1.sh Stop Script Path : /opt/userpp/stop1.sh [Resource2 : fip1] Type : fip Comment : 10.0.0.11 IP Address : 10.0.0.11 [Group1 : failover2] Type : failover Comment : failover group2 [Resource0 : disk2] Type : disk Comment : /dev/sdb6 Disk Type : disk File System : ext2 Device Name : /dev/sdb6 Raw Device Name : Mount Point : /mnt/sdb6 [Resource1 : exec2] Type : exec Comment : exec resource2 Start Script Path : /opt/userpp/start2.sh Stop Script Path : /opt/userpp/stop2.sh [Resource2 : fip2] Type : fip Comment : 10.0.0.12 IP Address : 10.0.0.12 =============================================================

8.3.14. Displaying only the configuration data of a certain monitor resource (--mon option)¶

When you want to display only the cluster configuration data on a specified monitor resource, specify the name of the monitor resource after the --mon option in the clpstat command. If you want to see the details, specify --detail option. When you do not specify the name of monitor resource, the cluster configuration data of all monitor resources is displayed.

-

Example of a command entry For floating IP monitor resource:

# clpstat --mon fipw1

-

Example of the display after running the command: ===================== CLUSTER INFORMATION ===================== [Monitor2 : fipw1] Type : fipw Comment : fip monitor1 =================================================================

8.3.15. Displaying the configuration data of a resource specified for an individual server (--rsc option or --mon option)¶

When you want to display the configuration data on a resource specified for an individual server, specify the name of the resource after the --rsc or --mon option in the clpstat command.

-

Example of a command entry When the monitor target IP address of the IP monitor resource is set to an individual server:

# clpstat --mon ipw1

-

Example of the display after running the command: ===================== CLUSTER INFORMATION ===================== [Monitor2 : ipw1] Type : ipw Comment : ip monitor1 IP Addresses : Refer to server's setting <server1> IP Addresses : 10.0.0.253 : 10.0.0.254 <server2> IP Addresses : 10.0.1.253 : 10.0.1.254 =================================================================

8.3.16. Displaying only the configuration data of specific exclusion rules (--xcl option)¶

When you want to display only the cluster configuration data on a specified exclusion rules, specify the exclusive rule name after the --xcl option in the clpstat command.

-

Example of a command entry # clpstat --xcl excl1

-

Example of the display after running the command ===================== CLUSTER INFORMATION ===================== [Exclusive Rule0 : excl1] Exclusive Attribute : Normal group0 : failover1 group1 : failover2 =================================================================

8.3.17. Displaying all configuration data (-i option)¶

By specifying the -i option, you can display the configuration information that is shown when --cl, --sv, --hb, --svg, --grp, --rsc, --mon, and --xcl options are all specified.

If you run the command with the -i option and the --detail option together, all the detailed cluster configuration data is displayed. Because this option displays large amount of information at a time, use a command, such as the less command, and pipe, or redirect the output in a file for the output.

-

Tips Specifying the -i option displays all the information on a console. If you want to display some of the information, it is useful to combine the --cl, --sv, --hb, --svg, --grp, --rsc, and/or --mon option. For example, you can use these options as follows:

-

Example of a command entry If you want to display the detailed information of the server whose name is "server0," the group whose name is "failover1," and the group resources of the specified group, enter:

# clpstat --sv server0 --grp failover1 --rsc --detail

8.3.18. Displaying the status of the cluster (--local option)¶

By specifying the --local option, you can display only information of the server on which you execute the clpstat command, without communicating with other servers.

-

Example of a command entry # clpstat --local

-

Example of the display after running the command ===================== CLUSTER STATUS ====================== Cluster : cluster cluster..........: Start cluster <server> *server1..........: Online server1 lanhb1 : Normal LAN Heartbeat lanhb2 : Normal LAN Heartbeat diskhb1 : Normal DISK Heartbeat comhb1 : Normal COM Heartbeat witnesshb1 : Normal Witness Heartbeat pingnp1 : Normal ping resolution pingnp2 : Normal ping resolution httpnp1 : Normal http resolution server2...........: Online server2 lanhb1 : - LAN Heartbeat lanhb2 : - LAN Heartbeat diskhb1 : - DISK Heartbeat comhb1 : - COM Heartbeat witnesshb1 : - Witness Heartbeat pingnp1 : - ping resolution pingnp2 : - ping resolution httpnp1 : - http resolution <group> failover1.........: Online failover group1 current : server1 disk1 : Online /dev/sdb5 exec1 : Online exec resource1 fip1 : Online 10.0.0.11 failover2.........: - failover group2 current : server2 disk2 : - /dev/sdb6 exec2 : - exec resource2 fip2 : - 10.0.0.12 <monitor> diskw1 : Online disk monitor1 diskw2 : Online disk monitor2 ipw1 : Online ip monitor1 pidw1 : Online pidw1 userw : Online usermode monitor sraw : Online sra monitor =============================================================

Information on each status is provided in "Status Descriptions".

8.3.19. Status Descriptions¶

-

Cluster Function

Status

Description

Status display (--local)

Start

Starting

Suspend

Being suspended

Stop

Offline Pending

Unknown

Status unknown

-

Server Function

Status

Description

Status displayHeartbeat resource status displayOnline

Starting

Offline

Offline Pending

Online Pending

Now being started

Offline Pending

Now being stopped

Caution

Heartbeat resource failure

Unknown

Status unknown

-

Status unknown

Group map displayMonitor resource status displayo

Starting

x

Offline Pending

-

Status unknown

-

Heartbeat Resource Function

Status

Description

Status display

Normal

Normal

Caution

Failure (Some)

Error

Failure (All)

Unused

Not used

Unknown

Unknown

-

Status unknown

Heartbeat resource status display

o

Able to communicate

x

Unable to communicate

-

Not used or status unknown

-

Network Partition Resolution Resource Function

Status

Description

Status display

Normal

Normal

Error

Failure

Unused

Not used

Unknown

Status unknown

-

Status unknown

Network partition resolution status display

o

Able to communicate

x

Unable to communicate

-

Not used or status unknown

-

Group Function

Status

Description

Status display

Online

Started

Offline

Stopped

Online Pending

Now being started

Offline Pending

Now being stopped

Error

Error

Unknown

Status unknown

-

Status unknown

Group map display

o

Started

e

Error

p

Now being started/stopped

-

Group Resource Function

Status

Description

Status display

Online

Started

Offline

Stopped

Online Pending

Now being started

Offline Pending

Now being stopped

Online Failure

Starting failed

Offline Failure

Stopping failed

Unknown

Status unknown

-

Status unknown

-

Monitor Resource Function

Status

Description

Status Display

Normal

Normal

Caution

Error (Some)

Error

Error (All)

Not Used

Not Used

Unknown

Status Unknown

Status display (--local)Monitor Resource Status DisplayOnline

Started

Offline

Stopped

Caution

Caution

Suspend

Stopped temporary

Online Pending

Now being started

Offline Pending

Now being stopped

Online Failure

Starting failed

Offline Failure

Stopping failed

Not used

Not used

Unknown

Status unknown

-

Status unknown

8.4. Operating the cluster (clpcl command)¶

the clpcl command operates a cluster

-

Command line - clpcl -s [-a] [-h hostname]clpcl -t [-a] [-h hostname] [-w timeout] [--apito timeout]clpcl -r [-a] [-h hostname] [-w timeout] [--apito timeout]clpcl --suspend [--force] [-w timeout] [--apito timeout]clpcl --resume

-

Description This command starts, stops, suspends, or resumes the cluster daemon.

-

Option -

-s¶ Starts the cluster daemon.

-

-t¶ Stops the cluster daemon.

-

-r¶ Restarts the cluster daemon.

-

--suspend¶ Suspends the entire cluster

-

-wtimeout¶ clpcl command specifies the wait time to stop or suspend the cluster daemon to be completed when -t, -r, or --suspend option is used. The unit of time is second.

When a time-out is not specified, it waits for unlimited time.

When "0 (zero)" is specified, it does not wait.

When -w option is not specified, it waits for (heartbeat time-out x 2) seconds.

-

--resume¶ Resumes the entire cluster. The status of group resource of the cluster when suspended is kept.

-

-a¶ Executed the command on all servers

-

-hhostname¶ Makes a request to run the command to the server specified in hostname. Makes a processing request to the server on which this command runs (local server) if the -h option is omitted.

-

--force¶ When used with the --suspend option, forcefully suspends the cluster regardless of the status of all the servers in the cluster.

-

--apitotimeout¶ - Specify the interval (internal communication timeout) to wait for the EXPRESSCLUSTER daemon start or stop in seconds. A value from 1 to 9999 can be specified.If the --apito option is not specified, waiting for the EXPRESSCLUSTER daemon start or stop is performed according to the value set to the internal communication timeout of the cluster properties.

-

-

Return Value 0

Success

Other than 0

Failure

-

Remarks - When this command is executed with the -s or --resume option specified, it returns control when processing starts on the target server.When this command is executed with the -t or --suspend option specified, it returns control after waiting for the processing to complete.When this command is executed with the -r option specified, it returns control when the EXPRESSCLUSTER daemon restarts on the target server after stopping once.

Run the clpstat command to display the started or resumed status of the EXPRESSCLUSTER daemon.

-

Notes Run this command as the root user.

This command cannot be executed while a group is being started or stopped.

For the name of a server for the -h option, specify the name of a server in the cluster.

When you suspend the cluster, the cluster daemon should be activated in all servers in the cluster. When the --force option is used, the cluster is forcefully suspended even if there is any stopped server in the cluster.

When you start up or resume the cluster, access the servers in the cluster in the order below, and use one of the paths that allowed successful access.

via the IP address on the interconnect LAN

via the IP address on the public LAN

When you resume the cluster, use the clpstat command to see there is no activated server in the cluster.

This command starts, stops, restarts, suspends, or resumes only the EXPRESSCLUSTER daemon. The mirror agent and the like are not started, stopped, restarted, suspended, or resumed together.

-

Example of a command entry Example 1: Activating the cluster daemon in the local server

# clpcl -sExample 2: Activating the cluster daemon in server1 from server0

# clpcl -s -h server1Start server1 : Command succeeded.

If a server name is specified, the display after running the command should look similar to above.

Start hostname : Execution result

(If the activation fails, cause of the failure is displayed)

Example 3: Activating the cluster daemon in all servers

# clpcl -s -aStart server0 : Command succeeded.

Start server1 : Performed startup processing to the active cluster daemon. When all the servers are activated, the display after running the command should look similar to above. Start hostname : Execution result

(If the activation fails, cause of the failure is displayed)

Example 4: Stopping the cluster daemon in all servers

# clpcl -t -aIf the cluster daemon stops on all the servers, it waits till the EXPRESSCLUSTER daemons stop on all the servers.

If stopping fails, an error message is displayed.

-

Error Messages Message

Cause/Solution

Log in as root.

Log on as the root user.

Invalid configuration file. Create valid cluster configuration data.

Create valid cluster configuration data using the Cluster WebUI.

Invalid option.

Specify a valid option

Performed stop processing to the stopped cluster daemon.

The stopping process has been executed on the stopped cluster daemon.

Performed startup processing to the active cluster daemon.

The startup process has been executed on the activated cluster daemon.

Could not connect to the server. Check if the cluster daemon is active.

Check if the cluster daemon is activated.

Could not connect to the data transfer server. Check if the server has started up.

Check if the server is running.

Failed to obtain the list of nodes.Specify a valid server name in the cluster.Specify the valid name of a server in the cluster.

Failed to obtain the daemon name.

Failed to obtain the cluster name.

Failed to operate the daemon.

Failed to control the cluster.

Resumed the daemon that is not suspended.

Performed the resume process for the HA Cluster daemon that is not suspended.

Invalid server status.

Check that the cluster daemon is activated.

Server is busy. Check if this command is already run.

This command may have already been run.

Server is not active. Check if the cluster daemon is active.

Check if the cluster daemon is activated.

There is one or more servers of which cluster daemon is active. If you want to perform resume, check if there is any server whose cluster daemon is active in the cluster.

When you execute the command to resume, check if there is no server in the cluster on which the cluster daemon is activated.

All servers must be activated. When suspending the server, the cluster daemon need to be active on all servers in the cluster.

When you execute the command to suspend, the cluster daemon must be activated in all servers in the cluster.

Resume the server because there is one or more suspended servers in the cluster.

Execute the command to resume because some server(s) in the cluster is in the suspend status.

Invalid server name. Specify a valid server name in the cluster.

Specify the valid name of a sever in the cluster.

Connection was lost. Check if there is a server where the cluster daemon is stopped in the cluster.

Check if there is any server on which the cluster daemon is stopped in the cluster.

Invalid parameter.

The value specified as a command parameter may be invalid.

Internal communication timeout has occurred in the cluster server. If it occurs frequently, set the longer timeout.

A time-out occurred in the HA Cluster internal communication.If time-out keeps occurring, set the internal communication time-out longer.Processing failed on some servers. Check the status of failed servers.

If stopping has been executed with all the servers specified, there is one of more servers on which the stopping process has failed.Check the status of the server(s) on which the stopping process has failed.Internal error. Check if memory or OS resources are sufficient.

Check to see if the memory or OS resource is sufficient.

There is a server that is not suspended in cluster. Check the status of each server.

There is a server that is not suspended in the cluster. Check the status of each server.

Suspend %s : Could not suspend in time.

The server failed to complete the suspending process of the cluster daemon within the time-out period. Check the status of the server.

Stop %s : Could not stop in time.

The server failed to complete the stopping process of the cluster daemon within the time-out period. Check the status of the server.

Stop %s : Server was suspended.Could not connect to the server. Check if the cluster daemon is active.The request to stop the cluster daemon was made. However the server was suspended.

Could not connect to the server. Check if the cluster daemon is active.

The request to stop the cluster daemon was made. However connecting to the server failed. Check the status of the server.

Suspend %s : Server already suspended.Could not connect to the server. Check if the cluster daemon is active.The request to suspend the cluster daemon was made. However the server was suspended.

Event service is not started.

Event service is not started. Check it.

Mirror Agent is not started.

Mirror Agent is not started. Check it.

Event service and Mirror Agent are not started.

Event service and Mirror Agent are not started. Check them.

Some invalid status. Check the status of cluster.

The status of a group may be changing. Try again after the status change of the group is complete.

Failed to shut down the server.

Failed to shut down or reboot the server.

8.5. Shutting down a specified server (clpdown command)¶

the clpdown command shuts down a specified server.

-

Command line clpdown [-r] [-h hostname]

-

Description This command stops the cluster daemon and shuts down a server.

-

Option -

None¶ Shuts down a server.

-

-r¶ Reboots the server.

-

-hhostname¶ Makes a processing request to the server specified in hostname. Makes a processing request to the server on which this command runs (local server) if the -h option is omitted.

-

-

Return Value 0

Success

Other than 0

Failure

-

Remarks - This command runs the following commands internally after stopping the cluster daemon.Without any option specified shutdownWith the -r option specified reboot

This command returns control when the group stop processing is completed.

This command shuts down the server even when the EXPRESSCLUSTER daemon is stopped.

-

Notes Run this command as the root user.

This command cannot be executed while a group is being started or stopped.

For the name of a server for the -h option, specify the name of a server in the cluster.

-

Example of a command entry Example 1: Stopping and shutting down the cluster daemon in the local server

# clpdownExample 2: Shutting down and rebooting server1 from server0

# clpdown -r -h server1

-

Error Message

8.6. Shutting down the entire cluster (clpstdn command)¶

the clpstdn command shuts down the entire cluster

-

Command line clpstdn [-r] [-h hostname]

-

Description This command stops the cluster daemon in the entire cluster and shuts down all servers.

-

Option -

None¶ Executes cluster shutdown.

-

-r¶ Executes cluster shutdown reboot.

-

-hhostname¶ Makes a processing request to the server specified in hostname. Makes a processing request to the server on which this command runs (local server) if the -h option is omitted.

-

-

Return Value 0

Success

Other than 0

Failure

-

Remarks This command returns control when the group stop processing is completed.

-

Notes Run this command as the root user.

This command cannot be executed while a group is being started or stopped.

For the name of a server for the -h option, specify the name of a server in the cluster.

A server that cannot be accessed from the server that runs the command (for example, a server with all LAN heartbeat resources are off-line.) will not shut down.

-

Example of a command entry Example 1: Shutting down the cluster

# clpstdnExample 2: Performing the cluster shutdown reboot

# clpstdn -r

-

Error Message

8.7. Operating groups (clpgrp command)¶

the clpgrp command operates groups

-

Command line - clpgrp -s [group_name] [-h hostname] [-f] [--apito timeout]clpgrp -t [group_name] [-h hostname] [-f] [--apito timeout]clpgrp -m [group_name] [-h hostname] [-a hostname] [--apito timeout]clpgrp -l [group_name] [-h hostname] [-a hostname] [--apito timeout]

-

Description This command starts, deactivates or moves groups. This command also migrates groups.

-

Option -

-s[group_name]¶ Starts groups. When you specify the name of a group, only the specified group starts up. If no group name is specified, all groups start up.

-

-t[group_name]¶ Stops groups. When you specify the name of a group, only the specified group stops. If no group name is specified, all groups stop.

-

-m[group_name]¶ Moves a specified group. If no group name is specified, all the groups are moved. The status of the group resource of the moved group is kept.

-

-l[group_name]¶ - Migrates the specified group. The group type must always be the migration type.If no group name is specified, all the active migration groups on the server are migrated.

-

-hhostname¶ Makes a processing request to the server specified in hostname. Makes a processing request to the server on which this command runs (local server) if the -h option is omitted.

-

-ahostname¶ Defines the server which is specified by hostname as a destination to which a group will be moved. When the -a option is omitted, the group will be moved according to the failover policy

-

-f¶ - If you use this option with the -s option against a group activated on a remote server, it will forcefully be started on the server that requested the process.If this command is used with the -t option, the group will be stopped forcefully.

-

-ngroup_name¶ Displays the name of the server on which the group has been started.

-

--apitotimeout¶ - Specify the interval (internal communication timeout) to wait for the group resource start or stop in seconds. A value from 1 to 9999 can be specified.If the --apito option is not specified, waiting for the group resource start or stop is performed according to the value set to the internal communication timeout of the cluster properties.

-

-

Return Value 0

Success

Other than 0

Failure

-

Notes Run this command as the root user.

The cluster daemon must be activated on the server that runs this command

Specify a server in the cluster when you specify the name of server name for the -h and -a options.

Make sure to specify a group name, when you use the -m option.

If the group is moved by using the [-m] option, it is determined to be normal at the time of performing the group start process on the destination server. Please be aware that even if this command is executed successfully, the activation of the resource may fail on the server to which the group is moved. In order to check whether or not the group has started by using the return value, execute the following:# clpgrp -s [group_name] [-h hostname] -fIn order to move a group belonging to exclusion rules whose exclusion attribute is set to "Normal" by using the [-m] option, explicitly specify a server to which the group is moved by the [-a] option.

With the [-a] option omitted, moving a group fails if a group belonging to exclusion rules whose exclusion attribute is set to "Normal" is activated in all the movable servers.

-

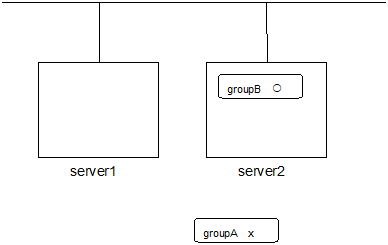

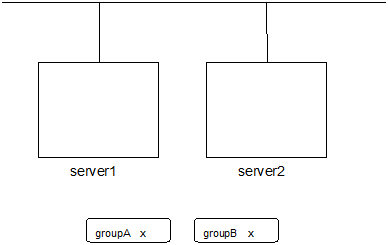

Example of Execution The following is an example of status transition when operating the groups.

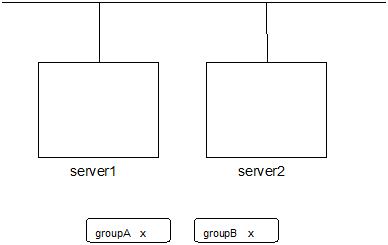

Example: The cluster has two servers and two groups.

Failover policy of group

groupA server1 -> server2groupB server2 -> server1Both groups are stopped.

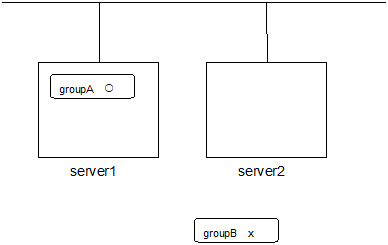

Run the following command on server1.

# clpgrp -s groupAGroupA starts in server1.

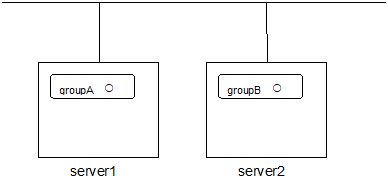

Run the following command in server2.

# clpgrp -sAll groups that are currently stopped but can be started start in server2.

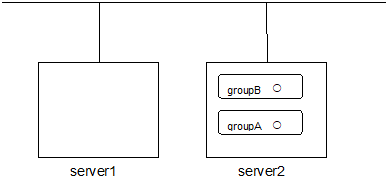

Run the following command in server1

# clpgrp -m groupAGroupA moves to server2.

Run the following command in server1

# clpgrp -t groupA -h server2GroupA stops.

Run the following command in server1.

# clpgrp -t Command Succeeded.

When the command is executed, there is no group running on server1. So, "Command Succeeded." appears.

Add -f to the command you have run in Step 6 and execute it on server1.

# clpgrp -t -fGroups which were started in server2 can be forcefully deactivated from server1.

-

Error message Message

Cause/Solution

Log in as root.

Log on as the root user.

Invalid configuration file. Create valid cluster configuration data.

Create valid cluster configuration data using the Cluster WebUI

Invalid option.

Specify a valid option

Could not connect to the server. Check if the cluster daemon is active.

Check if the cluster daemon is activated.

Invalid server status.

Check if the cluster daemon is activated.

Server is not active. Check if the cluster daemon is active.

Check if the cluster daemon is activated.

Invalid server name. Specify a valid server name in the cluster.

Specify the valid name of sever in the cluster.

Connection was lost. Check if there is a server where the cluster daemon is stopped in the cluster.

Check if there is any server on which the cluster daemon has stopped in the cluster.

Invalid parameter.

The value specified as a command parameter may be invalid.

Internal communication timeout has occurred in the cluster server. If it occurs frequently, set a longer timeout.

A time-out occurred in the EXPRESSCLUSTER internal communication.

If time-out keeps occurring, set the internal communication time-out longer.

Invalid server. Specify a server that can run and stop the group, or a server that can be a target when you move the group.

The server that starts/stops the group or to which the group is moved is invalid.

Specify a valid server.

Could not start the group. Try it again after the other server is started, or after the Wait Synchronization time is timed out.

Start up the group after waiting for the remote server to start up, or after waiting for the time-out of the start-up wait time.

No operable group exists in the server.

Check if there is any group that is operable in the server which requested the process.

The group has already been started on the local server.

Check the status of the group by using the Cluster WebUI or the clpstat command.

The group has already been started on the other server. To start/stop the group on the local server, use -f option.

Check the status of the group by using the Cluster WebUI or the clpstat command.

If you want to start up or stop a group which was started in a remote server from the local server, move the group or run the command with the -f option.

The group has already been started on the other server. To move the group, use "-h <hostname>" option.

Check the status of the group by using the Cluster WebUI or clpstat command.

If you want to move a group which was started on a remote server, run the command with the -h hostname option.

The group has already been stopped.

Check the status of the group by using the Cluster WebUI or the clpstat command.

Failed to start one or more group resources. Check the status of group

Check the status of group by using Cluster WebUI or the clpstat command.

Failed to stop one or more group resources. Check the status of group

Check the status of group by using the Cluster WebUI or the clpstat command.

The group is busy. Try again later.

Wait for a while and then try again because the group is now being started up or stopped.

An error occurred on one or more groups. Check the status of group

Check the status of the group by using the Cluster WebUI or the clpstat command.

Invalid group name. Specify a valid group name in the cluster.

Specify the valid name of a group in the cluster.

Server is not in a condition to start group or any critical monitor error is detected.

Check the status of the server by using the Cluster WebUI or clpstat command.

An error is detected in a critical monitor on the server on which an attempt was made to start a group.

There is no appropriate destination for the group. Other servers are not in a condition to start group or any critical monitor error is detected.

Check the status of the server by using the Cluster WebUI or clpstat command.

An error is detected in a critical monitor on all other servers.

The group has been started on the other server. To migrate the group, use "-h <hostname>" option.

Check the status of the group by using the Cluster WebUI or clpstat command.

If you want to move a group which was started on a remote server, run the command with the -h hostname option.

The specified group cannot be migrated.

The specified group cannot be migrated.

The specified group is not vm group.

The specified group is not a virtual machine group.

Migration resource does not exist.

Check the status of the group by using the Cluster WebUI or clpstat command.

The resource to be migrated is not found.

Migration resource is not started.

Check the status of the group by using the Cluster WebUI or clpstat command.

The resource to be migrated is not started.

Some invalid status. Check the status of cluster.

Invalid status for some sort of reason. Check the status of the cluster.

Internal error. Check if memory or OS resources are sufficient.

Check to see if the memory or OS resource is sufficient.

8.8. Collecting logs (clplogcc command)¶

the clplogcc command collects logs.

-

Command line clplogcc [ [-h hostname] | [-n targetnode1 -n targetnode2 ......] ] [-t collect_type] [-r syslog_rotate_number] [-o path] [-l]

-

Description This command collects information including logs and the OS information by accessing the data transfer server.

-

Option -

None¶ Collects logs in the cluster.

-

-hhostname¶ Specifies the name of the access destination server for collecting cluster node information

-

-tcollect_type¶ Specifies a log collection pattern. When this option is omitted, a log collection pattern will be type1. Information on log collection types is provided in "Collecting logs by specifying a type (-t option)".

-

-rsyslog_rotate _number¶ Specifies how many generations of syslog will be collected. When this option is omitted, only one generation will be collected.

-

-opath¶ Specifies the output destination of collector files. When this option is skipped, logs are output under tmp of the installation path.

-

-ntargetnode¶ Specifies the name of a server that collects logs. With this specification, logs of the specified server, rather than of the entire cluster, will be collected.

-

-l¶ - Collects logs on the local server without going through the data transfer server.The -h option and the -n option cannot be specified at the same time.

-

-

Return Value 0

Success

Other than 0

Failure

-

Remarks Since log files are compressed by tar.gz, add the xzf option to the tar command to decompress them.

-

Notes Run this command as the root user.

For the name of server for the -h option, specify the name of a server in the cluster that allows name resolution.

For the name of server for the -n option, specify the name of server that allows name resolution. If name resolution is not possible, specify the interconnect or public LAN address.

When you run this command, access the servers in the cluster in the order below, and use one of the paths that allowed successful access.

via the IP address on the interconnect LAN

via the IP address on the public LAN

via the IP address whose name was resolved by the server name in the cluster configuration data

If the log files collected on Linux OS (pax format of the tar command's compression) are decompressed with gnutar format of the tar command, a PaxHeaders.X folder is generated. However, it does not affect the operation.

-

Example of command execution Example 1: Collecting logs from all servers in the cluster

# clplogcc Collect Log server1 : Success Collect Log server2 : Success

Log collection results (server status) of servers on which log collection is executed are displayed.

Process hostname: result of loc collection (server status)

-

Execution Result For this command, the following processes are displayed.

Steps in Process

Meaning

Connect

Displayed when the access fails.

Get File size

Displayed when acquiring the file size fails.

Collect Log

Displayed with the file acquisition result.

The following results (server status) are displayed:

Result (server status)

Meaning

Success

Success

Timeout

Time-out occurred.

Busy

The server is busy.

Not Exist File

The file does not exist.

No Free space

No free space on the disk.

Failed

Failure caused by other errors.

-

Error Message Message

Cause/Solution

Log in as root.

Log on as the root user.

Invalid configuration file. Create valid cluster configuration data.

Create valid cluster configuration data using the Cluster WebUI.

Invalid option.

Specify a valid option.

Specify a number in a valid range.

Specify a number within a valid range.

Specify a correct number.

Specify a valid number.

Specify correct generation number of syslog.

Specify a valid number for the syslog generation.

Collect type must be specified 'type1' or 'type2' or 'type3' or 'type4' or 'type5' or 'type6'. Incorrect collection type is specified.

Invalid collection type has been specified.

Specify an absolute path as the destination of the files to be collected.

Specify an absolute path for the output destination of collected files.

Specifiable number of servers are the max number of servers that can constitute a cluster.

The number of servers you can specify is within the maximum number of servers for cluster configuration.

Could not connect to the server. Check if the cluster daemon is active.

Check if the cluster daemon is activated.

Failed to obtain the list of nodes. Specify a valid server name in the cluster.

Specify the valid name of a server in the cluster.

Invalid server status.

Check if the cluster daemon is activated.

Server is busy. Check if this command is already run.

This command may have been already activated. Check the status.

Internal error. Check if memory or OS resources are sufficient.

Check to see if the memory or OS resource is sufficient.

8.8.1. Collecting logs by specifying a type (-t option)¶

To collect only the specified types of logs, run the clplogcc command with the -t option.

Specify a type from 1 through 6 for the log collection.

type1 |

type2 |

type3 |

type4 |

type5 |

type6 |

|

|---|---|---|---|---|---|---|

|

y |

y |

y |

y |

n |

n |

|

y |

y |

y |

n |

n |

n |

|

y |

y |

n |

y |

n |

n |

|

y |

y |

y |

y |

n |

n |

|

y |

y |

n |

n |

n |

n |

|

y |

y |

n |

n |

n |

n |

|

n |

y |

n |

n |

n |

n |

|

n |

n |

n |

n |

y |

n |

|

n |

n |

n |

n |

n |

y |

|

y |

y |

y |

y |

n |

y |

(y=yes, n=no)

Run this command from the command line as follows.

Example: When collecting logs using type2

# clplogcc -t type2

When no option is specified, a log type will be type 1.

Information to be collected by default

Information on the following is collected by default:

Logs of each module in the EXPRESSCLUSTER Server

Alert logs

Attribute of each module (ls -l) in the EXPRESSCLUSTER Server

In bin, lib

In cloud

In alert/bin, webmgr/bin

In ha/jra/bin, ha/sra/bin, ha/jra/lib, ha/sra/lib

In drivers/md

In drivers/khb

In drivers/ka

All installed packages (rpm -qa expresscls execution result)

EXPRESSCLUSTER version

distribution (/etc/*-release)

License information

Cluster configuration data file

Policy file

Cloud environment configuration directory

Dump of shared memory used by EXPRESSCLUSTER

Local node status of EXPRESSCLUSTER (clpstat --local execution results)

Process and thread information (ps execution result)

PCI device information (lspci execution result)

Service information (execution results of the commands such as systemctl, chkconfig, and ls)

Output result of kernel parameter (result of running sysctl -a)

glibc version (rpm -qi glibc execution result)

Kernel loadable module configuration (/etc/modules.conf. /etc/modprobe.conf)

File system (/etc/fstab)

IPC resource (ipcs execution result)

System (uname -a execution result)

Network statistics (netstat, ss execution result IPv4/IPv6)

ip (execution results of the command ip addr, link, maddr, route or -s l)

All network interfaces (ethtool execution result)

Information collected at an emergency OS shutdown (See "Collecting information when a failure occurs".)

libxml2 version (rpm -qi libxml2 execution result)

Static host table (/etc/hosts)

File system export table (exportfs -v execution result)

User resource limitations (ulimit -a execution result)

File system exported by kernel-based NFS (/etc/exports)

OS locale

Terminal session environment value (export execution result)

Language locale (/etc/sysconfig/i18n)

Time zone (env - date execution result)

Work area of EXPRESSCLUSTER server

- Monitoring optionsThis information is collected if options are installed.

Collected dump information when the monitor resource timeout occurred

Collected Oracle detailed information when Oracle monitor resource abnormity was detected

syslog

syslog (/var/log/messages)

syslog (/var/log/syslog)

Syslogs for the number of generations specified (/var/log/messages.x)

journal log (such as files in /var/run/log/journal/)

core file

- core file of EXPRESSCLUSTER moduleStored in /opt/nec/clusterpro/log by the following archive names.

Alert related:

altyyyymmdd_x.tar

The WebManager server related:

wmyyyymmdd_x.tar

EXPRESSCLUSTER core related:

clsyyyymmdd_x.tar

srayyyymmdd_x.tar

jrayyyymmdd_x.tar

yyyymmdd indicates the date when the logs are collected. x is a sequence number.

OS information

OS information on the following is collected by default:

Kernel mode LAN heartbeat, keep alive

/proc/khb_moninfo

/proc/ka_moninfo

/proc/devices

/proc/mdstat

/proc/modules

/proc/mounts

/proc/meminfo

/proc/cpuinfo

/proc/partitions

/proc/pci

/proc/version

/proc/ksyms

/proc/net/bond*

all files of /proc/scsi/ all files in the directory

all files of /proc/ide/ all files in the directory

/etc/fstab

/etc/rc*.d

/etc/syslog.conf

/etc/syslog-ng/syslog-ng.conf

/etc/snmp/snmpd.conf

Kernel ring buffer (dmesg execution result)

ifconfig (the result of running ifconfig)

iptables (the result of running iptables -L)

ipchains (the result of running ipchains -L)

df (the result of running df)

raw device information (the result of running raw -qa)

kernel module load information (the result of running lsmod)

host name, domain name information (the result of running hostname, domainname)

dmidecode (the result of running dmidecode)

LVM device information (the result of running vgdisplay -v)

snmpd version information (snmpd -v execution result)

Virtual Infrastructure information (the result of running virt-what)

blockdev (the result of running blockdev --report)

When you collect logs, you may find the following message on the console. This does not mean failure. The logs are collected normally.

hd#: bad special flag: 0x03 ip_tables: (C) 2000-2002 Netfilter core team

(Where hd# is the name of the IDE device that exists on the server)

Script

Start/stop script for a group that was created with the Cluster WebUI.

If you specify a user-defined script other than the above (/opt/nec/clusterpro/scripts), it is not included in the log collection information. It must be collected separately.

ESMPRO/AC Related logs

Files that are collected by running the acupslog command.

HA logs

System resource information

JVM monitor log

System monitor log

Mirror statistics information

Mirror statistics information

In perf/disk

Cluster statistics information

Cluster statistics information

In perf/cluster

System resource statistics information

System resource statistics information

In perf/system

8.8.2. Syslog generations (-r option)¶

To collect syslogs for the number of generations specified, run the following command.

Example: Collecting logs for the 3 generations

# clplogcc -r 3

The following syslogs are included in the collected logs.

When no option is specified, only /var/log/messages is collected.

You can collect logs for 0 to 99 generations.

When 0 is specified, all syslogs are collected.

Number of Generation |

Number of generations to be acquired |

|---|---|

0 |

All Generations |

1 |

Current |

2 |

Current + Generation 1 |

3 |

Current + Generation 1 to 2 |

: |

|

x |

Current + Generation 1 to (x-1) |

8.8.3. Output paths of log files (-o option)¶

Log file is named and be saved as "server name-log.tar.gz"

If an IP address is specified for the -n option, a log file is named and saved as "IP address-log.tar.gz."

Since log files are compressed by tar.gz, decompress them by adding the xzf option to the tar command.

If not specifying -o option

Logs are output in tmp of installation path.

# clplogcc Collect Log hostname : Success # ls /opt/nec/clusterpro/tmp hostname-log.tar.gz

When the -o option is not specified:

If you run the command as follows, logs are located in the specified /home/log directory.

# clplogcc -o /home/log Collect Log hostname: Success # ls /home/log hostname-log.tar.gz

8.8.4. Specifying log collector server (-n option)¶

By using the -n option, you can collect logs only from the specified server.

Example: Collecting logs from Server1 and Server3 in the cluster.

# clplogcc -n Server1 -n Server3

Specify a server in the same cluster.

The number of servers you can specify is within the maximum number of servers in the cluster configuration.

8.8.5. Collecting information when a failure occurs¶

When the following failure occurs, the information for analyzing the failure is collected.

When a cluster daemon configuring the cluster abnormally terminates due to interruption by a signal (core dump) or internal status error etc.

When a group resource activation error or deactivation error occurs

When monitoring error occurs in a monitor resource

Information to be collected is as follows:

Cluster information

Some module logs in EXPRESSCLUSTER servers

Dump files in the shared memory used by EXPRESSCLUSTER

Cluster configuration information files

Core files of EXPRESSCLUSTER module

OS information (/proc/*)

/proc/devices

/proc/partitions

/proc/mdstat

/proc/modules

/proc/mounts

/proc/meminfo

/proc/net/bond*

Information created by running a command

Results of the sysctl -a

Results of the ps

Results of the top

Results of the ipcs

Results of the netstat -in

Results of the netstat -apn

Results of the netstat -gn

Results of the netstat -rn

Results of the ifconfig

Results of the ip addr

Results of the ip -s l

Results of the df

Results of the raw -qa

journalctl -e execution result

These are collected by default in the log collection. You do not need to collect them separately.

8.9. Changing, backing up, and checking cluster configuration data (clpcfctrl command)¶

8.9.1. Creating a cluster and changing the cluster configuration data¶

the clpcfctrl --push command delivers cluster configuration data to servers.

-

Command line clpcfctrl --push -l|-w [-c hostname|IP] [-h hostname|IP] [-p portnumber] [-x directory] [--force] [--nocheck]

-

Description This command delivers the configuration data created by the Cluster WebUI to servers.

-

Option -

--push¶ Specify this option when delivering the data. You cannot omit this option.

-

-l¶ Specify this option when using the configuration data saved by the Cluster WebUI on Linux.

-

-w¶ - Specify this option when using the configuration data saved by the Cluster WebUI on Windows.You cannot specify -l and -w together.

-

-chostname | IP¶ - Specifies a server to access for acquiring a list of servers. Specify a host name or IP address.When this option is omitted, address in configuration data will be used.

-

-hhostname | IP¶ - Specifies a server to which configuration data is delivered. Specify host name or IP address.If this option is omitted, configuration data is delivered to all servers.

-

-pportnumber¶ - Specifies a port number of data transfer port.When this option is omitted, the default value will be used. In general, it is not necessary to specify this option.

-

-xdirectory¶ - Specify this option when delivering configuration data to the specified directory.This option is used with -l or -w.When -l is specified, configuration data saved on the file system by the Cluster WebUI on Linux is used.When -w is specified, configuration data saved by the Cluster WebUI on Windows is used.

-

--force¶ Even if there is a server that has not started, the configuration data is delivered forcefully.

-

--nocheck¶ When this option is specified, cluster configuration data is not checked. Use this option only when deleting a server.

-

-

Return Value 0

Success

Other than 0

Failure

-

Notes Run this command as the root user.

When you run this command, access the servers in the order below, and use one of the paths that allowed successful access.

via the IP address on the interconnect LAN

via the IP address on the public LAN

-

Example of command execution Example 1: Delivering configuration data that was saved on the file system using the Cluster WebUI on Linux